If you are deploying services that require fast data then you might need to use shared SSD storage for your data disks, and this is made possible using a Premium Storage Account with DS-Series or GS-Series virtual machines. Read on to learn more.

More Speed, Scottie!

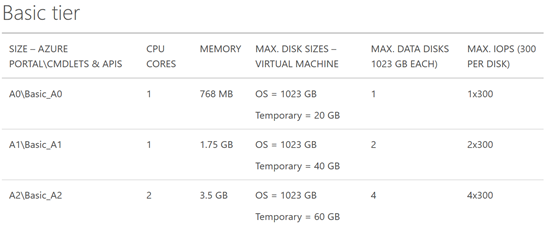

A typical virtual machine will offer up to 300 IOPS (Basic A-Series) or 500 IOPS (Standard A-Series and up) per data disk. There are a few ways to to improve data performance:

- More data disks: You can deploy a VM spec that supports more than 1 data disk. If each disk has 500 IOPS, then aggregating the disks multiplies the IOPS. If I store my data across 4 data disks then I have a raw potential 2000 IOPS.

- Disk caching: You can use a D-Series or G-Series to store a cache of frequently accessed data on the SSD-based temporary drive. SSD is a nice way to improve data performance.

- Memory caching: Some application offer support for caching in RAM. A large memory type such as the G-Series offers up to 448 GB RAM to store data sets in RAM. Nothing is faster than RAM!

Shared SSD Storage

Although there is nothing faster than RAM there are a couple of gotchas:

- If you have a large data set then you might not have enough RAM to cache in.

- G-Series VMs are expensive – the cloud is all about more, smaller VMs.

If an SSD cache is not big enough either, then maybe shared SSD storage for data disks would offer a happy medium: lots of IOPS and low latency; It’s not as fast as RAM, but it’s still plenty fast! This is why Microsoft gave us the DS- and GS-Series virtual machines which use Premium Storage.

Premium Storage

Shared SSD-based storage is possible only with the DS- and GS-Series virtual machines – note that DS- and GS-Series VMs can use standard storage too. Each spec offers support for a different number of data disks. There are some things to note with Premium Storage:

- OS disk: By default, the OS disk is stored in the same premium storage account as the premium data disks if you just go next-next-next. It’s possible to create the OS disk in a standard storage account to save money – remember that data needs the speed, not the OS.

- Spanning storage accounts: You can exceed the limits (35 TB) of a single premium storage account by attaching data disks from multiple premium storage accounts.

- VM spec performance limitations: Each VM spec limits the amount of throughput that it supports to premium storage – some VMs will run slower than the potential of the data disks. Make sure that you choose a spec that supports enough throughput.

- Page blobs: Premium storage can only be used to store VM virtual hard disks.

- Resiliency: Premium Storage is LRS only. Consider snapshots or VM backups if you need more insurance.

- Region support: Only a subset of regions support shared SSD storage at this time: East US2, West US, West Europe, Southeast Asia, Japan East, Japan West, Australia East.

- Premium storage account: You must deploy a premium storage account (PowerShell or Preview Portal); you cannot use a standard storage account which is bound to HDD-based resources.

The maximum sizes and bandwidth of Azure premium storage

The maximum sizes and bandwidth of Azure premium storage

Premium Storage Data Disks

Standard storage data disks are actually quite simple compared to premium storage data disks. If you use the UI, then you can only create data disks of the following sizes and specifications:

The 3 premium storage disk size baselines

The 3 premium storage disk size baselines

However, you can create a premium storage data disk of your own size, up to 1023 GB (the normal Azure VHD limit). Note that Azure will round up the size of the data disk to determine the performance profile based on the above table. So if I create a 50 GB premium storage VHD, it will have the same performance profile as a P10 (128 GB) VHD with 500 IOPS and 100 MB per second potential throughput (see VM spec performance limitations, above).

Pricing

You can find the pricing for premium storage on the same page as standard storage. Billing is based on the 3 models of data disk, P10, P20, and P30. As with performance, the size of your disk is rounded up to the next model, and you are charged based on the amount of storage actually consumed.

If you use snapshots then there is an additional billing rate.

Example

I have been asked to deploy an Azure DS-Series virtual machine in Western Europe with 100 GB of storage. I must be able to support up to 100 MB/second. The virtual machine only needs 1 vCPU and 3.5 GB RAM.

So, let’s start with the VM. 1 vCPU and 3.5 GB RAM steers me towards the DS1 virtual machine. If I check out that spec I find that the VM meets the CPU and RAM requirements. But check out the last column; The DS1 only supports a throughput of 32 MB/second which is well below the 100 MB/second which is required. I need to upgrade to a more expensive DS3 that has 4 vCPUs and 14 GB RAM, and supports up to 128 MB/second.

Note: I have searched high and low and cannot find a public price for DS- or GS-Series virtual machines. As far as I know, the only pricing is in I got pricing for virtual machines from the “Ibiza” preview portal. There I could see that the DS3 will cost around €399/month, compared to around €352/month for the D3.

[EDIT] A comment from Samir Farhat (below) made me go back and dig. So, the pricing page does mention DS- and GS-Series virtual machines. GS-Series are the same price as G-Series. However, the page incorrectly says that DS-Series pricing is based on that of the D-Series. That might have been true once, but the D-Series was reduced in price and the DV2-Series was introduced. Now, the D-Series is cheaper than the DS-Series. The DS-Series is the same price as the DV2-Series. I’ve checked the pricing in the Azure Preview Portal to confirm.

If I use PowerShell I can create a 50 GB data disk in the standard storage account. Azure will round this disk up to the P10 rate to determine the per GB pricing and the performance. My 50 GB disk will offer:

- 500 IOPS

- 100 MB/second (which was more than the DS1 or DS2 could offer)

The pricing will be €18.29 per GB per month. But don’t forget that there are other elements in the VM pricing such as OS disk, temporary disk, and more.

Once could do storage account snapshots to “backup” the VM, but the last I heard it was disruptive to service and not supported. There’s also a steep per GB cost. Use Azure Backup for IaaS VMs and you can use much cheaper blob blobs in standard storage to perform policy-based non-disruptive backups of the entire VM.