In this Festive Tech Calendar post, I am going to explain how to get Private Endpoints working in the real world.

Thank you to the team that runs Festive Tech Calendar every year for the work that they do and for raising funds for worthy causes.

Private Endpoints

When The Cloud was first envisioned, it was made a platform that didn’t really take network security seriously. The resources that developers want to use, Platform-as-a-Service (PaaS), were built to only have public endpoints. In the case of Microsoft Azure, if I deploy an App Service Plan, the compute that is provisioned for me shares a public IP address(es) with plans from other tenants. The App Service Plan is accessible directly on the Internet – that’s even true when you enable “firewall rules” in an App Service because those rules only control what HTTP/S requests will be responded to so raw TCP connections (zero day attacks) are still possible.

If I want to protect that App Service Plan I need to make it truly private by connecting it to a virtual network, using a private IP address, and maybe placing a Web Application Firewall in the flow oc the client connection.

The purpose of Private Endpoint is to alter the IP address that is used to connect to a platform resource. The public endpoint is, preferably, disabled for inbound connections and clients are redirected to a private IP address.

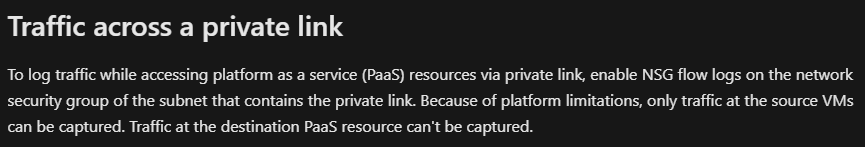

When we enable a Private Endpoint for a PaaS resource, a Private Endpoint resource is added and a NIC is created. The NIC is connected to a subnet in a virtual network and obtains or is supplied with an IP address for that subnet. All client connections will be via that private IP address. And this is where it all goes wrong in the real world.

If I browse myapp.azurewebsites.net my PC will resolve that name to the public endpoint IP address – even after I have implemented a Private Endpoint. That means that I have to redirect my client to the new IP address. Nothing on The Internet knows that private IP address mapping. The only way to map the FQDN of the App Service to the private endpoint is to use Private DNS.

You might remember this phrase for troubleshooting on-premises networks: “it’s always DNS”. In Azure, “it’s always routing, then it’s always DNS”, but the DNS part is what we need to figure out, not just for this App Service but for all workloads/resource types.

The Problems

There are three main issues:

- Microsoft Documentation

- Developers don’t do infrastructure

- Who does DNS in The Cloud?

Microsoft Documentation

The documentation for Private Endpoint ranges from excellent to awful. That variance depends on the team/resource type that is covered by the documentation. Each resource team is responsible for their own implementation/documentation. And that means some documentation is good and clear, while some documentation should never have made it past a pull request.

The documentation on how to use Private Endpoint focuses on single workloads. You’ll find the same is true in the certifcation exams on Microsoft networking. In the real world, we have many workloads. Clients need to access those workloads over virtual networks. Those workloads integrate with each other, and that means that they must also resolve each others names. This name resolution must work for resources inside of individual workloads, for workload-to-workload communications, and on-premises clients-to-workload communications. You can eventually figure out how to do this from Microsoft documentation but, in my experience, many organisations give up during this journey and assume that Private Endpoint does not work.

Developers Don’t Do Infrastructure

Imagine asking a developer to figure out virtual networks and subnetting! OK, let’s assume you have reimagined IT processes and structures (like you are supposed to) and have all that figured out.

Now you are going to ask a developer to understand how DNS works. In the real world, most devs know their market verticals, language(s) and (quite complex) IDE toolset, and everything else is not important. I’ve had the pleasure of talking devs through running NSLOOKUP (something we IT pros often consider simple) and I basically ran a mini-class.

Assuming that a dev knows how DNS works and should be architected in The Cloud is a path to failure.

Who Does DNS In The Cloud?

I have lost track of how many cloud jorneys that I have been a part of, either from the start or where I joined a struggling project. A common wish for many of those customers is that they won’t run any virtual machines (some organisations even “ban” VMs) – I usually laugh and promise them some VMs later. Their DNS is usually based on Windows Server/Active Directory and with no VMs in their future, they assume that don’t need any DNS system.

If there is no DNS architecture, then how will a system, such as Private Endpoint, work?

A Working Architecture

I’m going to jump straight to a working archticture. I’ll start with a high-level design and then talk about some of the low-level design options.

This design works. It might not be exactly what you require but simple changes can be made for specific scenarios.

High-Level Design

Private DNS Zones are created for each resource type and service type in that resource that a Private Endpoint is deployed for. Those zones are deployed centrally and are associated with a virtual network/subnet that will be dedicated to a DNS service.

The DNS service of your choice will be deployed to the DNS virtual newtork/subnet. Forwarders wll be configured on that DNS Service to point to the “magic Azure virtual IP address” 168.63.129.16. That is an address that is dedicated to Azure services – if you send DNS requests to it then they will be handled by:

- Azure Private DNS zones, looking for a matching zone/record

- Azure DNS, which can resolve Azure Public DNS Zones or resolve Internet requests – ah you don’t need proxy DNS servers in a DMZ now because Azure becomes that proxy DNS server!

Depending on the detailed design, your DNS servers can also resolve on-premises records to enable Azure-to-on-premises connections – important for migration windows while services exist in two locations, connections to partners via private connections, and when some services will stay on-premises.

All other virtual networks in your deployment (my design assumes you have a hub & spoke for a mid/large scale deployment) will have custom DNS servers configured to point at the DNS servers in the DNS Workload.

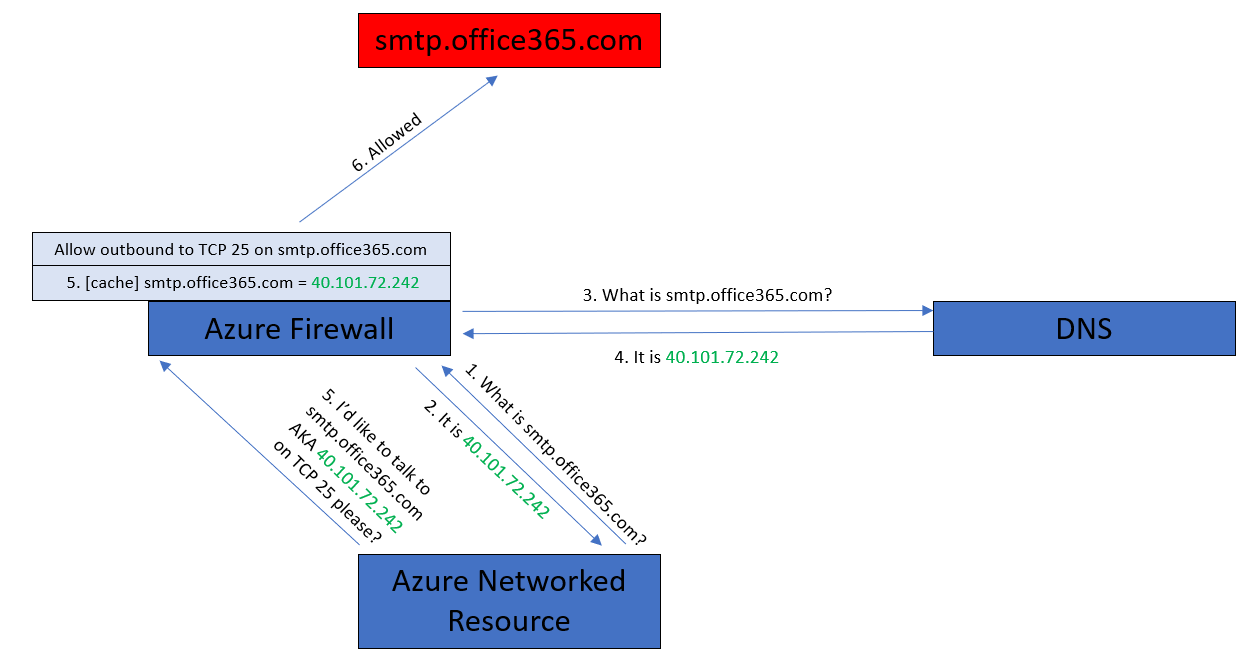

One intersting option here is Azure Firewall in the hub. If you want to enable FQDNs in Network Rules then you will:

- Enable DNS Proxy mode in the Azure Firewall.

- Configure the DNS server IP addresses in the Azure Firewall.

- Use the private IP address of the Azure Firewall (a HA resource type) as your DNS server in the virtual networks.

Low-Level Design

There are different options for your DNS servers:

- Azure Private DNS Resolver

- Active Directory Domain Services (ADDS) Domain Controllers

- Simple DNS Servers

In an ideal world, you would choose Azure Private DNS Resolver. This is a pure PaaS resource that can be managed as code – remember “VMs are banned”. You can forward to Azure Private DNS Zones and forward to on-premises/remote DNS servers. Unfortunately, Azure Private DNS Resolver is a relatively expensive resource and the design and requirements are complex. I haven’t really used Azure Private DNS Resolver in the real world so I cannot comment on compatibility with complex on-premises DNS architectures, but I can imagine there being issues with organisations such as universities where every DNS technology known to mankind since the early 1990’s is probably employed.

Most of the customers that I have worked with have opted to use Domain Controllers (DCs) in Azure as their DNS servers. The DCs store all the on-premises AD-integrated zones and can resolve records independently of on-premises DNS server. The intereface is familiar to Windows admins and easily configured and managed. This increases usability and compatibility. If you choose a modest B-series SKU then the cost will be quite a bit lower than Azure Private DNS Resolver. You’ll also have an ADDS presence in Azure enabling legacy workloads to use their required authenetication/aauthorisation methods.

The third option is to just use either a simple Windows/Linux VM as the DNS server. This is a good choice where ADDS is not required or where Linux DNS is required.

The Private Endpoint

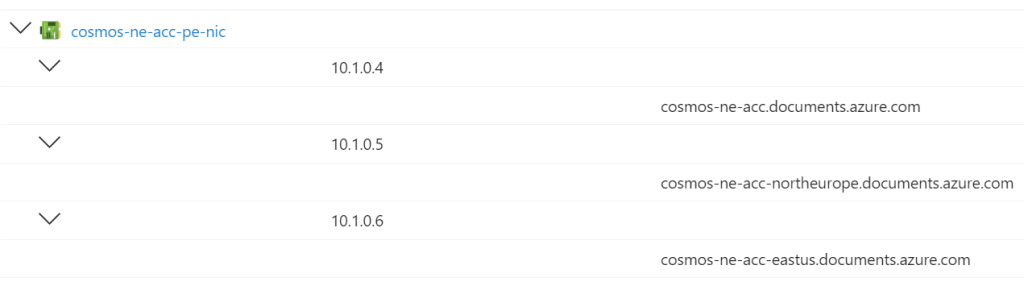

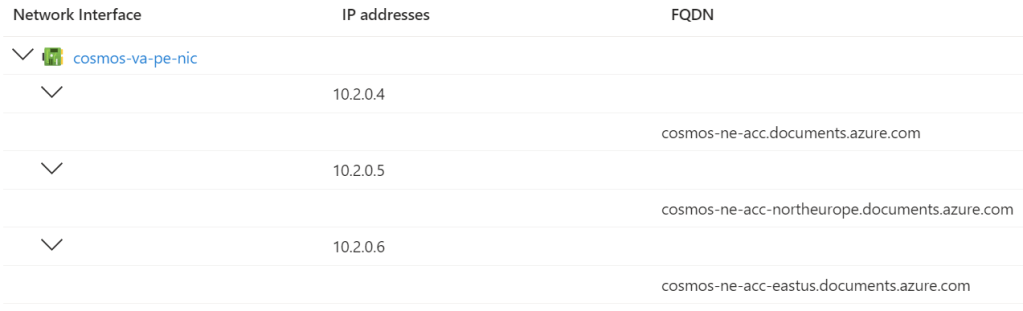

I metioned that a Private Endpoint/NIC combination would be deployed for each resource/service type that requires private connectivity. For example, a Storage Account can have blob, table, queue, web, file, dsf, afs, and disks services. We need to be able to redirect the client to the specific service – that means creating a NDS record in the correct Azure Private DNS Zone, such as privatelink.blob.core.windows.net. Some workloads, such as Cosmos DB, can require multiple DNS records – how do you know what to create?

Luckily, their is a feature in Private Endpoint that handles auto-registration for you:

- All of the required DNS records are created in the correct DNS zones – you must have the Azure Private DNS Zones deployed beforehand.

- If your resource changes IP address , the DNS records will be updated automatically.

Sadly, I could not find anydocumentation for this feature while writing this article. However, it’s an easy feature to configure. Open your new Private Endpoint and browse to DNS Configuration. There you can see the required DNS records for this Private Endpoint.

Click Add Configuration and supply the requested information. From now on, that Private Endpoint will handle record registration/updates for you. Nice!

With a central handler for DNS name resolution, on-premises clients have the ability to connect to your Private Endpoints – subject to network security rules. On-premises DNS servers should be configured with conditional forwarders (one for each Private Link Azure Private DNS Zone) to point at your Azure DNS servers – they can point at a Azure Firewall if the previously mentioned DNS options are used.

Some Complexities

Like everything, this design is not perfect. Centralised anything comes with authorisation/governance issues. Anyone deploying a Private Endpoint will require the rights to access the Azure Private DNS Zones/records. In the wrong hands, that could become a ticketing nightmare where simple tasks take 6 weeks – far from the agility that we dream of in The Cloud.

Conclusion

The above design is one that I have been using for years. It ahs evolved a little as new features/resources have been added to Azure but the core design has remained the same. It works and it is scalable. Importantly, once it is built, there is little for the devs to know about – just enable DNS Configuration in the Private Endpoint.

Tweaks can be made. I’ve discussed some DNS server options – some choose to dispense with DNS Servers altogether and use Azure Firewall as the DNS server, which forwards to the default Azure DNS services. On-premises DNS servers can forward to Azure Firewall or to the DNS servers. But the core design remains the same.