September is a month of storms. There appears to have been lots of activity in the Azure cloud last month too. Everyone working on Azure should pay attention to the PAY ATTENTION! section.

PAY ATTENTION!

Default outbound access for VMs in Azure will be retired— transition to a new method of internet access

On 30 September 2025, default outbound access connectivity for virtual machines in Azure will be retired. After this date, all new VMs that require internet access will need to use explicit outbound connectivity methods such as Azure NAT Gateway, Azure Load Balancer outbound rules, or a directly attached Azure public IP address.

There will be more communications on this from Microsoft. But this is more than a “don’t worry about your existing VMs” situation. What happens when you add more VMs to an existing old network? What happens when you do a restore? What happens when you do an Azure Site Recovery failover? Those are all new VMs in old networks and they are affected. Everyone should do some work to see if they are affected and prepare remediations in advance – not on the day when they are stressed out by a restore or a Black Friday expansion.

App Service Environment version 1 and version 2 will be retired on 31 August 2024

After 31 August 2024, App Service Environment v1 and v2 will no longer be supported and these App Service Environments and the applications running on them will be deleted and any application data associated with them will be lost.

Oh yeah, you’d better start working on migrations now.

Azure Kubernetes Service

Application gateway for Containers vs Application Gateway Ingress Controller – What’s changed?

Application Gateway for Containers is a new application (layer 7) load balancing and dynamic traffic management product for workloads running in a Kubernetes cluster. At the time of writing this service is currently in public preview. In this article we will look at the differences between AGIC and Application Gateway for containers and some of the great new features available through this new offering.

I know little about AKS but this subject seems to have excited some AKS users.

A Bucket Load Of Stuff

Too much for me to get into and I don’t know enough about this stuff:

- GA: Dedicated table support for AKS diagnostics logs

- GA: AKS image cleaner

- GA: Vertical Pod Autoscaling add-on for AKS

- GA: Support for Python 3.11 in Azure Functions

- GA: Node OS patching – NodeImage feature in AKS

App Services

Announcing Public Preview of Free Hosting Plan for WordPress on App Service

We announced the General Availability of WordPress on App Service one year ago, in August 2022 with 3 paid hosting plans. We learnt that sometimes you might need to try out the service before you migrate your production applications. So, we are offering you a playground for a limited period – a free hosting plan to and explore and experiment with WordPress on App Service. This will help you understand the offering better before you make a long-term investment.

They really want you to try this out – note that this plan is not for production workloads.

Hybrid

Announcing the General Availability of Jumpstart HCIBox

Almost one year ago the Jumpstart team released the public preview of HCIBox, our self-contained sandbox for exploring Azure Stack HCI capabilities without the need for physical hardware. Feedback from the community has been fantastic, with dozens of feature requests and issues submitted and resolved through our open-source community.

Today, the Jumpstart team is excited to announce the general availability of HCIBox!

It’s one thing to test out the software functionality of Azure Stack HCI. But the reality is that this is a hardware-centric solution and there is no simulating the performance, stability, or operations of something this complex.

Generally Available: Windows Server 2012 and 2012 R2 Extended Security Updates enabled by Azure Arc

Windows Server 2012 and 2012 R2 Extended Security Updates (ESUs) enabled by Azure Arc is now Generally Available. Windows Server 2012 and 2012 R2 are going End of Support on October 10, 2023. With ESUs, customers who are running Windows Server 2012 on-premises or in other clouds can get three more years of critical security updates from Microsoft to protect their End of Life infrastructure.

This is not free. This is tied into the news about Azure Update Manager (below).

Miscellaneous

Detailed CSP to EA Migration guidance and crucial considerations

In this blog, I’ve shared insights drawn from real-world migration experiences. This article can help you meticulously plan your own CSP to EA migration, ensuring a smoother transition while incorporating critical considerations into your migration strategy.

One really wishes that CSP, EA, etc were just differences in billing and not Azure APIs. Changing of billing should be like changing a phone plan.

Top 10 Considerations for running your workload successfully on Azure this Holiday Season

Black Friday, Small Business Saturday and Cyber Monday will test your app’s limits, and so it’s time for your Infrastructure and Application teams to ensure that your platforms delivers when it is needed the most. Be it shopping applications on the web and mobile or payment gateways or banking systems supporting payments or inventory systems or billing systems – anything and everything associated with the shopping season should be prepared to face the load for this holiday season.

The “holiday season” starts earlier every year. Tesco Ireland started in August. Amazon has a Prime Day next Tuesday (October 10). These events test systems harder than ever and monolithic on-prem designs will not handle it. It’s time to get ready – if it’s not already too late!

Ungated Public Preview: Azure API Center

We’re thrilled to share that Azure API Center is now open for everyone to try during our ungated public preview! Azure API Center is a new Azure service that is part of the Azure API Management platform. It is the central hub where you can effortlessly keep track of all your APIs company-wide, making them readily discoverable, reusable, and manageable.

Managing a catalog of APIs could be challenging. Tooling is welcome.

Generally available: Secure critical infrastructure from accidental deletions at scale with Policy

We are thrilled to announce the general availability of DenyAction, a new effect in Azure Policy! With the introduction of Deny Action, policy enforcement now expands into blocking request based on actions to the resource. These deny action policy assignments can safeguard critical infrastructure by blocking unwarranted delete calls.

Can you believe that Azure was designed deliberately to not have a deny permission? Adding it after is not easy. The idea here is that delete locks on resources/resource groups become too easy to remove – and are frequently removed. Something, like a policy, that is enforced in the API (between you and the resources) is always applied and is not easy to remove and can be easily deployed at scale.

Virtual Machines

Generally available: Azure Premium SSD v2 Disk Storage is now available in more regions

Azure Premium SSD v2 Disk Storage is now available in Australia East, France Central, Norway East and UAE North regions. This next-generation storage solution offers advanced general-purpose block storage with the best price performance, delivering sub-millisecond disk latencies for demanding IO-intensive workloads at a low cost.

Expanded region availability makes this something more interesting. But, Azure Backup support is in very limited preview since the Spring.

Announcing the general availability of new Azure burstable virtual machines

we are announcing the general availability of the latest generations of Azure Burstable virtual machine (VM) series – the new Bsv2, Basv2, and Bpsv2 VMs based on the Intel® Xeon® Platinum 8370C, AMD EPYC™ 7763v, and Ampere® Altra® Arm-based processors respectively.

Faster and cheaper than the previous editions of B-Series VMs and they include ARM support too. The new virtual machines support all remote disk types such as Standard SSD, Standard HDD, Premium SSD and Ultra Disk storage.

Generally Available: Azure Update Manager

We are pleased to announce that Azure Update Manager, previously known as Update Management Center, is now generally available.

The controversial news is that Arc-managed machines will cost $5/month. I’m still not sold on this solution – it still feels less than legacy solutions like WSUS.

Announcing Public Preview of NVMe-enabled Ebsv5 VMs offering 400K IOPS and 10GBps throughput

Today, we are announcing a Public Preview of accelerated remote storage performance using Azure Premium SSD v2 or Ultra disk and selected sizes within the existing NVMe-enabled Ebsv5 family. The higher storage performance is offered on the E96bsv5 and E112ibsv5 VM sizes and delivers up to 400K IOPS (I/O operations per second) and 10GBps of remote disk storage throughput.

Even the largest SQL VM that I have worked with comes nowhere near these specs. The customer(s) that have justified this investment by Microsoft must be huge.

Azure savings plan for compute: How the benefit is applied

Organizations are benefiting from Azure savings plan for compute to save up to 65% on select compute services – and you could too. By committing to spending a fixed hourly amount for either one year or three years, you can save on plans tailored to your budget needs. But you may wonder how Azure applies this benefit.

It’s simple really. The system looks at your VMs, calculates the theoretical savings, and first applies your discount to the machines where you will save the most money, and then repeats until your discount is used.

General Availability: Share VM images publicly with community gallery – Azure Compute Gallery feature

With community gallery, a new feature of Azure Compute Gallery, you can now easily share your VM images with the wider Azure community. By setting up a ‘community gallery’, you can group your images and make them available to other Azure customers. As a result, any Azure customer can utilize images from the community gallery to create resources such as virtual machines (VMs) and VM scale sets.

This is a cool idea.

Trusted Launch for Azure VMware Solution virtual machines

Azure VMware Solution proudly introduces Public Preview of Trusted Launch for Virtual Machines. This advanced feature comprises Secure Boot, Virtual Trusted Platform Module (vTPM), and Virtualization-based Security (VBS), collectively forming a formidable defense against modern cyber threats.

A feature that was introduced in Windows Server 2016 Hyper-V.

Infrastructure-As-Code

Introduction to Azure DevOps Workload identity federation (OIDC) with Terraform

Workload identity federation is an OpenID Connect implementation for Azure DevOps that allow you to use short-lived credential free authentication to Azure without the need to provision self-hosted agents with managed identity. You configure a trust between your Azure DevOps organisation and an Azure service principal. Azure DevOps then provides a token that can be used to authenticate to the Azure API.

This looks like a more secure way to authenticate your pipelines. No secrets are stored and a trust between your DevOps organasation and Azure enables short-lived authentication with desired access rights/scopes.

Quickstart: Automate an existing load test with CI/CD

In this article, you learn how to automate an existing load test by creating a CI/CD pipeline in Azure Pipelines. Select your test in Azure Load Testing, and directly configure a pipeline in Azure DevOps that triggers your load test with every source code commit. Automate load tests with CI/CD to continuously validate your application performance and stability under load.

This is not something that I have played with but I suspect that you don’t want to do this against production systems!

General Availability: GitHub Advanced Security for Azure DevOps

Starting September 20th, 2023, the core scanning capabilities of GitHub Advanced Security for Azure DevOps can now be self-enabled within Azure DevOps and connect to Microsoft Defender for Cloud. Customers can automate security checks in the developer workflow using:

- Code Scanning: locates vulnerabilities in source code and provides remediation guidance.

- Secret Scanning: identifies high-confidence secrets and blocks developers from pushing secrets into code repositories.

- Dependency Scanning: discovers vulnerabilities with open-source dependencies and automates update alerts for developers.

This seems like a good direction to go but I’m told it’s quite pricey.

Networking

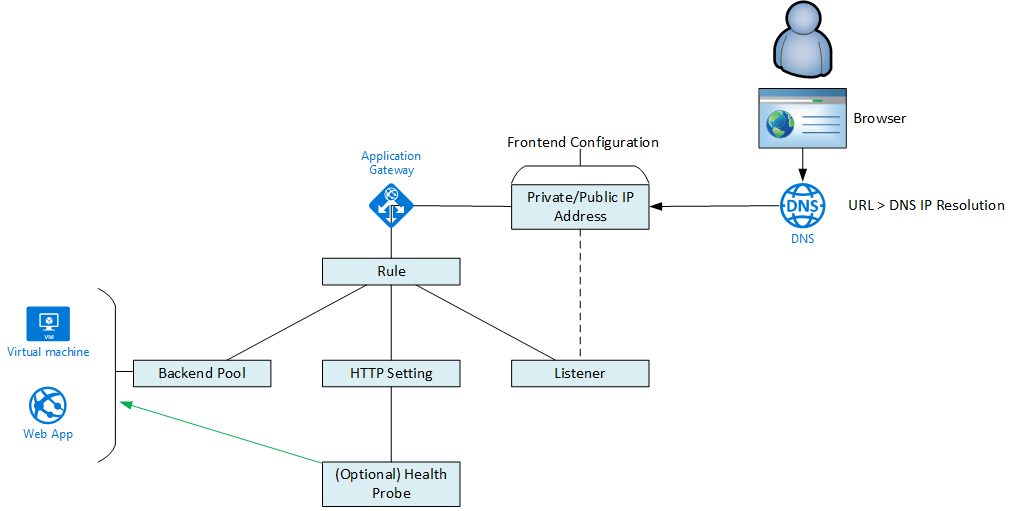

General availability: Sensitive Data Protection for Application Gateway Web Application Firewall

WAF running on Application Gateway now supports sensitive data protection through log scrubbing. When a request matches the criteria of a rule, and triggers a WAF action, that event is captured within the WAF logs. WAF logs are stored as plain text for debuggability, and any matching patterns with sensitive customer data like IP address, passwords, and other personally identifiable information could potentially end up in logs as plain text. To help safeguard this sensitive data, you can now create log scrubbing rules that replace the sensitive data with “******”.

Sounds good to me!

General availability: Gateway Load Balancer IPv6 Support

Azure Gateway Load Balancer now supports IPv6 traffic, enabling you to distribute IPv6 traffic through Gateway Load Balancer before it reaches your dual-stack applications.

With this support, you can now add IPv6 frontend IP addresses and backend pools to Gateway Load Balancer. This allows you to inspect, protect, or mirror both IPv4 and IPv6 traffic flows using third-party or custom network virtual appliances (NVAs).

Useful for security architectures where NVAs are being used

Azure Backup

Preview: Cross Region Restore (CRR) for Recovery Services Agent (MARS) using Azure Backup

We are announcing the support of Cross Region Restore for Recovery Services Agent (MARS) using Azure Backup.

This makes sense. Let’s say I back up my on-prem data, located in Virginia, to Azure East US, in Boydton Virginia. And then there’s a disaster in VA that wipes out my office and Azure East US. Now I can restore to a new location from the paired region replica.

Preview: Save Azure Backup Recovery Services Agent (MARS) passphrase to Azure Key Vault

Now, you can save your Azure Recovery Services Agent encryption passphrase in Azure Key Vault directly from the console, making the Recovery Services Agent installation seamless and secure.

This beats the old default option of saving it as a text file on the machine that you were backing up.

General availability: Selective Disk Backup and Restore in Enhanced Policy for Azure VM Backup

We are adding the “Selective Disk Backup and Restore” capability in Enhanced Policy of Azure VM Backup.

Be careful out there!

Storage

General Availability: Malware Scanning in Defender for Storage

Malware Scanning in Defender for Storage will be generally available September 1, 2023.

Please make sure that you read up on how much this will cost you. The DfC plans changed recently, and the pricing model for Storage plans changed to include this feature.

Azure Monitor

Public preview: Alerts timeline view

Azure Monitor alerts is previewing a new timeline view that simplifies the consumption experience of fired alerts. The new view has the following advantages:

- Shows fired alerts on a timeline

- Helps identify co-occurrence of alerts

- Displays alerts in the context of the resources they fired on

- Focuses on showing counts of alerts to better understand impact

- Supports viewing alerts by severity

- Provides a more intuitive discovery and investigation path

This might be useful if you are getting a lot of alerts.

Azure Virtual Desktop

Announcing general availability of Azure Virtual Desktop Custom Image Templates

Custom image templates allow admins to build a custom “golden image” using the Azure Virtual Desktop management user interface. Leverage a variety of built-in customizations or add your own customization scripts to install applications or configurations.

Why are they not using Azure Image Builder like I do?