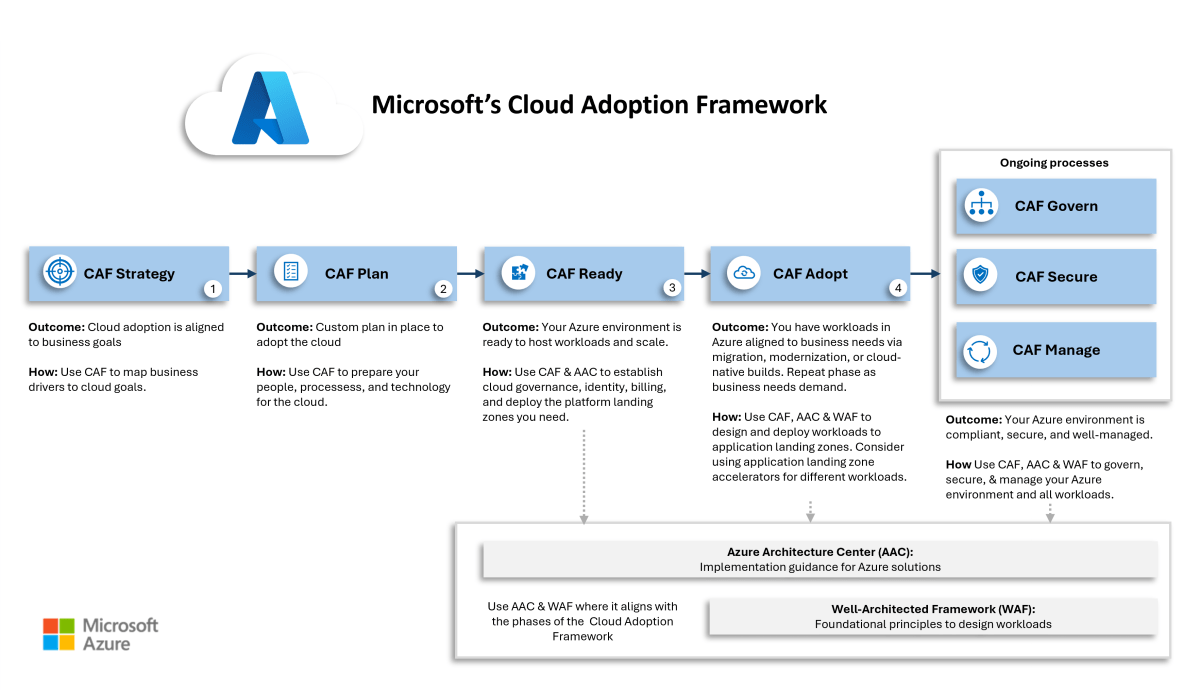

In this post, I will explain how I have interpreted the Cloud Adoption Framework for Microsoft Azure and how I apply it with my company, Cloud Mechanix.

Taking Theory Into Practice

In my last post, I explained two things:

- The value of the Cloud Adoption Framework (CAF)

- It is never too late to apply the CAF

I strongly believe in the value of the CAF, mostly because:

- I’ve seen what happens when an organisation rushes into an IT-driven cloud migration project.

- The CAF provides a process to avoid the issues caused by that rush.

The CAF does have an issue – it is not opinionated. The CAF has lots of discussion, but can be light on direction. That’s why I have slightly tweaked the CAF to:

- Take into account what I believe an organisation should do.

- Include the deliverables of each phase.

- Indicate the dependencies and flow between the phases.

- Highlight where there will be continuous improvement after the adoption project is complete.

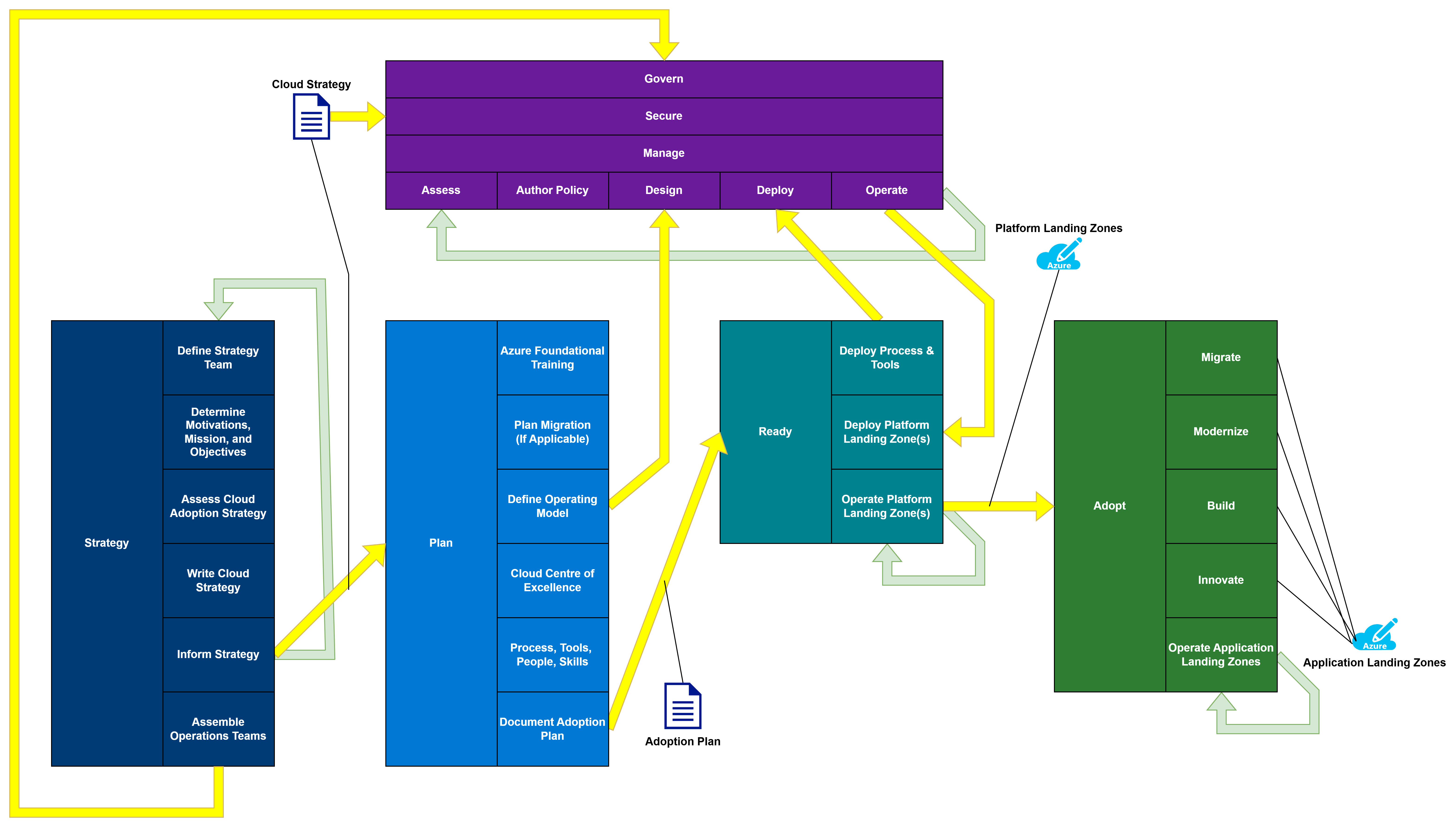

The Cloud Mechanix CAF

Here is a diagram of the Cloud Mechanix version of the Azure Cloud Adoption Framework:

There are two methodologies:

- Foundational

- Operational

Foundational Methodology

There are four phases in the Foundational Methodology:

- Strategy

- Plan

- Ready

- Adopt

Strategy

The Strategy phase is the key to making the necessary changes in the organisation. When an IT (infrastructure) manager starts a migration project:

- They have little to no knowledge of the organisation-wide needs of IT services.

- No influence outside their department – particularly with other departments/divisions/teams – to make changes.

- Possibly have little interest in any process/organisational/tool changes to how IT services are delivered.

The process will run sequentially as follows:

| Task | Description | Deliverable |

| Define Strategy Team | Select the members who will participate in this phase. They should know the organisational needs/strategy. They must have authority to speak for the organisation. | A team that will review and publish the Cloud Strategy. |

| Determine Motivations, Mission, and Objectives | Identify and rank the organisation’s reasons to adopt the cloud. Create a mission statement to summarise the project. Define objectives to accomplish the mission statement/motivations and assign “definitions of success”. | Ranked motivations. A mission statement. Objectives with KPIs. |

| Assess Cloud Adoption Strategy | Review the existing cloud adoption strategy, if one exists. | A review of the cloud strategy, contrasting it with the identified motivations, mission statement, and objectives. |

| Write Cloud Strategy | A cloud strategy document will be created using the gathered information. This will record the information and provide a high-level plan, with timelines for the rest of the cloud adoption project. | A non-technical document that can be read and understood by members of the organisation. |

| Inform Strategy | The Cloud Strategy will be published. A clear communication from the Strategy Team will inform all staff of the mission statement and objectives, authorising the necessary changes. | A clear communication that will be understood by all staff. Note that the steps to produce and publish this strategy will be repeated on a regular basis to keep the cloud strategy up-to-date. |

| Assemble Operations Teams | The leadership of the Operational Framework tracks will be selected and authorised to perform their project duties. | The team leaders will initiate their tracks, based on instructions from the Cloud Strategy. |

The Cloud Strategy is the primary parameter for the tracks in the Operational Framework and the Plan phase of the Foundational Framework.

Plan

The Plan phase is primarily focused on designing the organisational changes to how holistic IT services (not just IT infrastructure) are delivered.

| Task | Description | Deliverable |

| Azure Foundational Training | The entry level of Azure training should be delivered to any staff participating in the Plan/Ready phases of the project. | The AZ-900 equivalent of knowledge should be learned by the staff members. |

| Plan Migration | An assessment of workloads should begin for any workloads that are candidates for migration to the cloud. This is optional, depending on the Cloud Strategy. | A detailed migration plan for each workload. |

| Define Operating Model | Define the new way that IT services (not just infrastructure) will be delivered. | An authorised plan for how IT services will be delivered in Azure. The operating model will be a parameter for the Design task in the Govern/Secure/Manage tracks in the Foundational Methodology. |

| Cloud Centre of Excellence | A “special forces” team will be created to be the early adopters of Azure. They will be the first learners/users and will empower/teach other users over time. | A list of cross-functional IT staff with the necessary roles to deliver the operational model. |

| Process, Tools, People, and Skills | The processes for delivering the new operational model will be defined. The tools that will be used for the operational model will be tested, selected, and acquired. People will be identified for roles and reorganised (actually or virtually) as required. Skills gaps will be identified and resolved through training/acquisition. | The necessary changes to deliver the operational model will be planned and documented. Skills will be put in place to deliver the operational model. |

| Document Adoption Plan | A plan will be created to: 1. Deploy the new tools 2. Build platform landing zones 3. Prepare for Adopt | An adoption plan is created and published to the agreed scope. |

The Adoption Plan will be the primary parameter for the Ready phase.

Ready

The purpose of Ready is to:

- Get the tooling in place.

- Prepare the platform landing zones to enable application landing zones.

There is a co-dependency between Ready and the Operational Methodology. The Operational Methodology will:

- Require the tooling to deploy the governance, security and management features, especially if an infrastructure-as-code approach will be used.

- Provide the governance, security, and management systems that will be required for the platform landing zones.

This means that there is a required ordering:

- Governance, Secure, and Manage must design their features.

- Ready must prepare the tooling.

- Governance, Secure, and Manage will deploy their features.

- Ready can continue.

| Task | Description | Deliverable |

| Deploy Process & Tools | The tools and processes for the operating model will be deployed and made ready. | This is required to enable Govern, Secure, and Manage to deploy their features. |

| Deploy Platform Landing Zones | Landing zones for features such as hubs, domain controllers, DNS, shared Web Application Firewalls, and so on, will be deployed. | The infrastructure features that are required by application landing zones will be prepared. |

| Operate Platform Landing Zones | Each platform landing zone is operated in accordance with the Well-Architected Framework. | Continuous improvement for performance, reliability, cost, management, and functionality. |

The platform landing zones are a technical delivery parameter for the Adopt phase.

Adopt

The nature of Adopt will be shaped by the cloud strategy. For example, an organisation might choose to do a simple migration because of a technical motivation. Another organisation might decide to build new applications in The Cloud, while keeping old ones in on-premises hosting. Another might choose to focus entirely on market disruption by innovating new services. No one strategy is right, and a blend may be used. All of this is dictated by the mission statement and objectives that are defined during Strategy.

| Task | Description | Deliverable |

| Migrate | A structured process will migrate the applications based on the migration plan generated during Plan. | An application landing zone for each migrated application. |

| Modernise | Applications are rearchitected/rebuilt based on the migration plan generated during Plan. | An application landing zone for each migrated application. |

| Build | New applications are built in Azure. | An application landing zone is created for each workload. |

| Innovate | New services to disrupt the market are researched, developed, and put into production. | An innovation process will eventually generate an application landing zone for each new service. |

| Operate Application Landing Zones | Each application landing zone is operated in accordance with the Well-Architected Framework. | Continuous improvement for performance, reliability, cost, management, and functionality. |

Operational Methodology

The Operational Methodology must not be overlooked; this is because the three tracks, running in parallel with the Foundational Methodology, will perform necessary functions to design and continuously operate/improve systems to protect the organisation.

The three tracks, each with identical tasks, are:

- Govern: Build, maintain, and improve governance systems.

- Secure: Build, maintain, and improve security systems.

- Manage: Build, maintain, and improve systems guidelines and management systems.

This approach assigns ownership of the Well-Architected Framework pillars to the three tracks.

- Govern: Cost optimisation

- Secure: Security

- Manage: Reliability, operational excellence, and performance efficiency

Each track has a separate team with:

- A leader

- Stakeholders

- Architect

- Implementors

Each is a separate track, but there is much crossover. For example, Azure Policy is perceived as a governance solution. However, Azure Policy might be used:

- By Govern to apply compliance requirements.

- By Secure to harden the Azure resources.

- By Manage to automate desired systems configurations.

The inheritance model for Azure Policy is Management Groups, so all three tracks will need to collaborate to design a governance architecture. For this reason, the architect should reside in each team. The implementors may also be common.

| Task | Description | Deliverable |

| Assess | Perform an assessment of the current/future requirements, risks, and requirements. | A risk assessment with a statement of measurable objectives. |

| Author Policy | A new policy is written, or an existing policy is updated to enforce the objectives from the assessment. | A policy document is written and published. |

| Design | A solution to implement the policy is designed. The goal is to automate as much of the policy as possible. Remaining exceptions should be clearly documented and communicated with guidelines. | High-level and low-level design documentation for the technical implementation. Clearly written and communicated guidelines for other requirements. |

| Deploy | This depends on Deploy Process & Tools from Ready. Deploy the technical solution. | The technical Azure (platform landing zones) and any third-party resources are deployed to implement governance, security, and management based on the published policies. |

| Operate | The systems are run and maintained. | Continuous improvement for performance, reliability, cost, management, and functionality. The Deploy Platform Landing Zone(s) in Ready can proceed. |

Note that Govern, Secure and Manage should never finish. They should deliver a minimal viable product (MVP) to quickly enable Ready with a baseline of governance, security, and management best practices, as defined by the organisation. A regular review process will assess the policy versus new risks/requirements/experience. This will start a new cycle of continuous improvement.

This approach should be the method used for continuous risk assessment in IT Security or compliance. If this is true, then the new Azure process can be blended with those processes.

Final Thoughts

The partners of a 3-or 4-letter consulting franchise do not have to get rich from your cloud journey. The Cloud Adoption Framework does not have to be a process that generates tens of thousands of pages of reports that will never be read. The focus of this approach is to:

- Enable cloud adoption.

- Use a rapid light-touch approach that avoids change friction.

For example, a Cloud Strategy workshop can be completed in 1.5 days. A high-level design for a minimum viable security policy can be discussed in under 1 day. The Cloud Strategy will, and should, evolve. The IT Security policy will evolve with regular (risk) assessments.

If You Like This Approach …

As I stated, this is the approach that I use with Cloud Mechanix. The focus is on results, including speed and correct delivery. This process can be done during the cloud journey, or it can be done afterwards if you realise that the cloud is not working for your organisation. Contact Cloud Mechanix if you would like to learn how I can facilitate your experience of the Cloud Adoption Framework.