Microsoft is finally making updates to Azure to reduce downtime to virtual machines when a host is rebooted.

Microsoft sent out the following announcement via the regular pricing and features update email to customers last night:

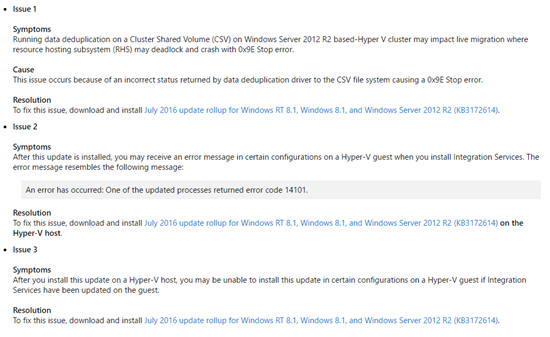

That sounds like Quick Migration. So Azure has caught up with Windows Server 2008 Hyper-V. ![]() And it sounds like later in 2016, we’ll get Live Migration … yay … Windows Server 2008 R2 Hyper-V

And it sounds like later in 2016, we’ll get Live Migration … yay … Windows Server 2008 R2 Hyper-V ![]()

Seriously, though, Azure was never designed for the kinds of high availability that we put into an on-premises Hyper-V cluster. Azure is cloud scale, with over 1 million physical hosts. A cluster has around 1000 hosts! When you build at that scale, HA is done in a different way. You encourage customers to design for an army of ants … lots of small deployments where HA is done using software design leveraging cloud fabric features, rather than by hardware. But, when you have customers (from small to huge) who have lots of legacy applications (e.g. file server) that cannot be clustered in Azure without redesign/re-deployment/expense, then you start losing customers.

So Microsoft needed to make changes that acknowledged that many customer workloads are not cloud ready … and to be honest, most of the prospects I’ve encountered where code was being written, the developers weren’t cloud ready either – they are sticking to the one DB server and one web server model that has plagued businesses since the 1990s.

These improvements are great news … and they’re just the tip of last night’s very big and busy iceberg.