These are my notes from watching the session online, presented by the man who runs Azure engineering. This high-level session is all about the hybrid cloud nature of Microsoft’s offerings. You choose where to run things, not the cloud vendor.

Data Centre

Microsoft expects a high percentage of customers want to deploy hybrid solutions, a mixture of on-premises, with hosting partners, or in public cloud (like Azure, O365, etc). IDC reckons 80% of enterprises will run hybrid strategy by 2017. This has been Microsoft’s offering since day 1, and it continues that way. Microsoft believes there are legitimate scenarios that will continue to run on-premises.

A lot of learning from Azure has fed back into Hyper-V over the years. This continues in WS2016:

- Distributed QoS

- Network Controller

- Discrete device assignment (DDA)

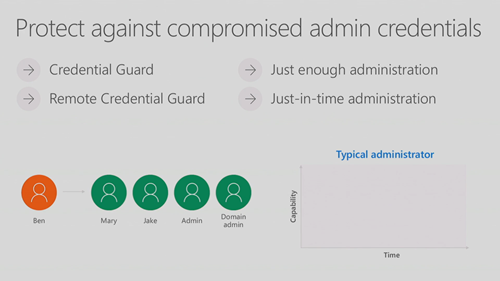

Security

The threats have evolved to target all the new endpoints that modern computing have enabled. Security starts with the basics: patching. Once an attacker is in, they expand their reach. Threats are from all over – internal and external. Advanced persistent threats from zero days and organized & financed attackers are a legit threat. It takes only 24-48 hours for an attacker to get from intrusion to domain admin access, and then they sit there, undiscovered, stealing and damaging, for a mean of 150 days!

Windows Server philosophy is to defend in depth:

Shielded Virtual Machines

The goal is that even if someone gets physical access to a host (internal threat), they cannot get into the virtual machines.

- VMs are encrypted at rest and in transit.

- A host must be trusted by an secured independent authority.

Jeff Woolsey, Principal Program Manager, comes on stage to do a demo. Admins can be bad guys! Imagine your SAN admin … he can copy VM virtual hard disks, mount them, and get your data. If that’s a DC VM, then he can steal the domain’s secrets very easily. That’s the easiest ID theft ever. Shielded VMs prevent this. The VMs are a black box that the VM owner controls, not the compute/networking/storage admins.

Jeff does a demo … easy mount and steal from un-shielded VHDX files. Then he goes to a shielded VM … wait no those are VMware VMDK files and “these guys don’t have shielded virtual machines”. He goes to the write folder, and mounting a VHDX fails because it’s encrypted using BitLocker, even though he is a full admin. He goes to Hyper-V Manager and tries a console connection to a shielded VM. There’s no console thumbnail and console is not available – you have to use RDM. The shielded VM uses a virtual unique TPM chip to seal the unique key that protects the virtual disks. The VM processes are protected, which means that you cannot attach a debugger or inspect the RAM of the VM from the host.

This is a truly unique security feature. If you want secured VMs, then WS2016 Hyper-V (and it really requires VMM 2016 for real world) is the only way to do it – forget vSphere.

Software Defined

You get the same Hyper-V on-prem that Microsoft uses in Azure – no one else does that. Scalability has increased. Linux is a first class citizen – 30% of existing Azure VMs are Linux and they think that 60% of new virtual machines are Linux. The software defined networking in WS2016 came from Azure. Load Balancing is tested in Azure and running in the fabric for VMs in WS2016. VXLAN was added too. Storage has been re-invented with Storage Spaces Direct (S2D), lowering costs and increasing performance.

Management

System Center 2016 will be generally available with WS2016 in mid October (it actually isn’t GA yet, despite the misleading tweets). Noise control has been added to SCOM, allowing you to tune alerts more easily.

Application Platform

We have new cloud-first ways to deploy applications.

Nano Server is a refactored Windows Server with no UI – you manage this installation option (it’s not a license) remotely. You can deploy it quickly, it boots up in seconds, and it uses fewer resources than any other Windows option. You can use Nano Server on hosts, storage nodes, in VMs, or in containers. The application workload is where I think Nano makes the most sense.

Containers are native in WS2016, managed by Docker or PowerShell. Deploying applications is extremely fast with containers. Coupled with orchestration, the app people stop caring about servers – all compute is abstracted away for them. Windows Server Containers is the same kind of containers that people might be aware of. Hyper-V Containers takes regular kernels so that they don’t have a shared kernel and are isolated by the DEP-backed Hyper-V hypervisor. Docker provides enterprise-ready management for containers, including WS2016. Anyone buying WS2016 gets the Docker Engine, with support from both Docker and MSFT.

Ben Golub, CEO of Docker, comes out. Chat show time … we’ll skip that.

Azure

The tenants of Azure are global, trusted, and hybrid. Note that last one.

Global:

This is Amsterdam (West Europe). The white buildings are data centers. One is the size of an American Football field (120 yards x around 53 yards). This isn’t one of the big data centers.

1.6 million miles of fibre around the world, with a new mid-Atlantic one one the way. There are roughly 90 ExpressRoute (WAN connection) PoPs around the world. The platform is broad … CSP has over 5,000 line items in the price list. Over 600 new services and features shipped in the last 12 months.

Some new announcements are highlighted.

- New H-Series VMs are live on Azure. H is for high performance or HPC.

- L-Series VMs: Storage optimized.

- N-Series (already announced): NVIDIA GPU enabled.

An in-demand application is SAP HANA. Microsoft has worked with SAP to create purpose-built infrastructure for HANA with up to 32 TB OLAP and 3 TB OLTP.

New Networking Capabilities

Field-programmable gate array (FPGA) has gone live in Azure, enabling network acceleration up to 25 Mbps. IPv6 support has been added. Also:

- Web application firewall (added to the web application proxy)

- Azure DNS is GA

Compliance

Azure has the largest compliance portfolio in cloud scale computing. Don’t just look at the logo – look at what is supported by that certification. Azure has 50% more than AWS in PCI. 300% more than AWS in FedRAMP. 3 more certs were announced:

Azure was the first to get the EU-US Privacy Shield certification.

Hybrid

Microsoft means run it on-prem or in the cloud when they hybrid (choice). Other vendors are limited to a network connection to hoover up all your systems and data (no choice).

SQL Server 2016 stretch database allows a table to span on-prem and Azure SQL. That’s a perfect example of hybrid in action.

Azure Stack Technical Preview 2 was launched. You can run it on-prem or with a partner service provider. Scenarios include:

- Data sovereignty

- Industrial: A private cloud running a factory

- Temporarily isolated environments

- Smart cities

The 2 big hurdles are software and hardware. This is why Microsoft is partnering with DellEMC, HPE and Lenovo on solutions for Microsoft Azure Stack. We see behind the HPE rack – 8 x 2U servers with SFP+ networking. There will be quarter rack stacks for getting started and bigger solutions.

Azure + Azure Stack

Bradley Bartz, Principal Group Program Manager, comes out on stage. He’s talking through a scenario. A company in Atlanta runs a local data center. Application are moving to containers. Dev/test will be done in Azure (pay as you go). Production deployment will be done on-prem. An Azure WS2016 VM runs as a container host. OMS is being used by Ops to monitor all items in both clouds. Ops use desired state configuration (DSC) to automate the deploy OMS management to everything by policy. This policy also stores credentials into KeyVault. When devs deploy a nwe container host VM, it is automatically managed by OMS. He now logs in as an operator in the Azure Stack portal. We are shown a demo of the Add Image dialog. A new feature that will come, is syndication of the Azure Marketplace from Azure to Azure Stack. Now when you create a new image in Azure Stack, you can draw down an image from the Azure Stack – this increases inventory for Azure Stack customers, and the market for those selling via the Marketplace. He adds the WS2016 with Containers image from the Marketplace. Now when the devs go into production, they can use the exact same template for their dev/test in Azure as they do for production on-prem.

When a dev is deploying from Visual Studio, they can pick the appropriate resource group in Azure, in Azure Stack, or even to a hosted Azure Stack elsewhere in the world. With Marketplace syndication, you get a consistent compute experience.

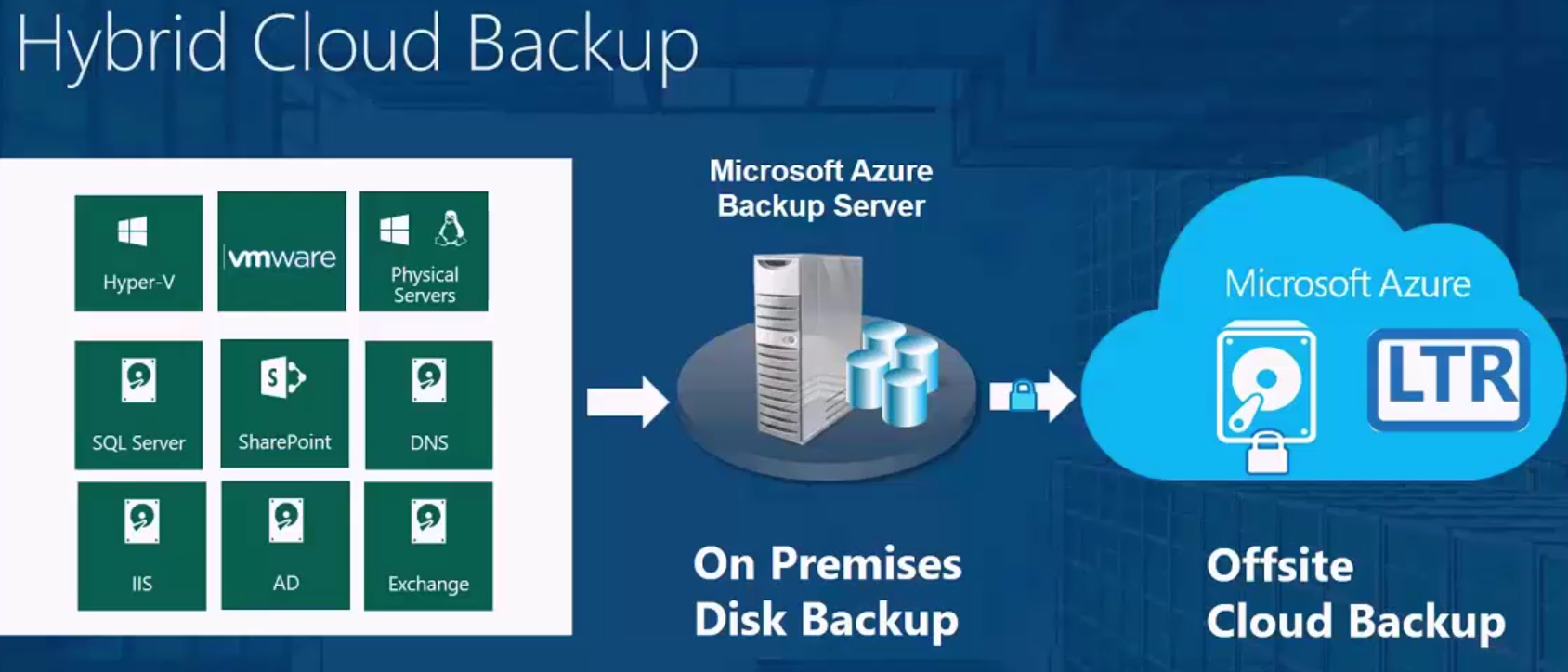

Hybrid Cloud Management

There’s more to hybrid than deployment. You need to be able to manage the footprints, including others such as AWS, vSphere and Hyper-V, as one. Microsoft’s strategy is OMS working with or without System Center. New features:

- Application dependency mapping allows you to link components that make your service, and ID failing pieces and impacts.

- Network performance monitoring allows you to see applications view of network bottlenecks or link failures.

- Automation & Control. Path management is coming to Linux. Patch management will also have crowd sourced feedback on patches.

- Azure Security Center will be converging with OMS “by the end of the year” – no mention if that was MSFT year or calendar year.

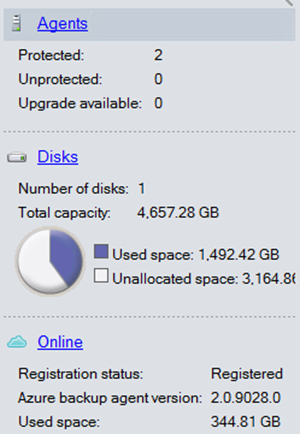

- Backup and DR have had huge improvements over the last 6 months.

Jeff Woolsey comes back out to do an OMS demo. He goes into Alert Management (integration with SCOM) to see various kinds of alerts. Drills into an alert, and there’s nice graphics that show a clear performance issue. Application Dependency Monitor shows all the pieces that make up a service. This is prevent graphically, and one of the boxes has an alert. There is a SQL assessment alert. He drills into a performance alert. We see that there’s a huge chunk of knowledge, based on Microsoft’s interactions with customers. The database needs to be scaled out. Instead of doing this by hand, he makes a runbook to remediate the problem automatically (it was created from a Microsoft gallery item). He associates the runbook with the alert – the runbook will run automatically after an alert.

3 clicks in a new alert and he allows an incident to be created in a third-party service desk. He associates the alert with another click or two. The problem can now auto-remediate, and an operator is notified and can review it.

He goes into the Security and Audit area and a map shows malicious outbound traffic, identified using intelligence from Microsoft’s digital crime unit. Notable issues highlight actions that IT need to take care of (missing updates, malware scanning, suspicious logins, etc). Re patching, he creates an update run in Updates to patch on-prem servers.