Speaker: Ajay Jagannathan, Principal PM Manager, Microsoft Data Platform Group. He leads the @mssqltiger team.

I think that this is the first every SQL Server that I’ve attended in person at a TechEd/Ignite. I was going to a PaaS session instead, but I’ve got so many customers running SQL Server on Azure VMs, that I thought that this was important for me to see. I also thought it might be useful for a lot of readers.

Microsoft Data Platform

Starting with SQL 2016, the goal was to make the platform consistent on-premises, with Azure VMs, or in Azure SQL. With Azure, scaling is possible using VM features such as scale sets. You can offload database loads, so analytics can be on a different tier:

- On-premises: SQL Server and SQL Server (DW) Reference architecture

- IaaS: SQL Server in Azure VM with SQL Server (DW) in Azure VM.

- PaaS: Azure SQL database with Azure SQL data warehouse

Common T-SQL surface area. Simple cloud migration. Single vendor for support. Develop once and deploy anywhere.

Azure VM

- Azure load balancer routes traffic to the VM NIC.

- The compute and storage are separate from the storage.

- The virtual machine issues operations to the storage.

SQL Server in Azure VM – Deployment Options

- Microsoft gallery images: SQL Server 2008 R2 – 2017, SQL Web, Std, Ent, Dev, Express. Windows Server 2008 R2 – WS2016. RHEL and Ubuntu.

- SQL Licensing: PAYG based on number of cores and SQL edition. Pay per minute.

- Bring your own license: Software Assurance required to move/license SQL to the cloud if not doing PAYG.

- Creates in ~10 miuntes.

- Connect via RDP, ADO, .NET, OLEDB, JBDC, PHO …

- Manage via Portal, SSMS, owerShell, CLI, System Center …

It’s a VM so nothing really changes from on-premises VM in terms of management.

Everytime there’s a critical update or service pack, they update the gallery images.

VM Sizes

The recommend DS__V2- or FS-Series with Premium Storage. For larger loads, they recommend the GS- and LS-Series.

For other options, there’s the ES_v2 series (memory optimized DS_v3), and the M-Series for huge RAM amounts.

VM Availability

Availability sets distribute VMs across fault and update domains in a single cluster/data centre. You get a 99.95% SLA on the service for valid configurations. Use this for SQL clusters.

Managed disks offer easier IOPS management, particularly with Premium Disks (storage account has a limit of 20,000 IOPS). Disks are distributed to different storage stamps when the VM is in an availability set – better isolation for SQL HA or AlwaysOn.

High Availability

Provision a domain controller replica in a different availability set to your SQL VMs. This can be in the same domain as your on-prem domain (ExpressRoute or site-to-site VPN).

Use (Get-Cluster).SameSubnetThreshold = 20 to relax Windows Cluster failure detection for transient network failure.

Configure the cluster to ignore storage. They recommend AlwaysOn. There is no shared storage in Azure. New-Cluster –Name $ClusterName –NoStorage –Node $LocalMachineName

Configure Azure load balancer and backend pool. Register the IP address of listener.

There are step-by-step instructions on MS documentation.

SQL Server Disaster Recovery

Store database backups in geo-replicated readable storage. Restore backups in a remote region (~30 min).

Availability group options:

- Configure Azure as remote region for on-premise

- Configure On-prem as DR for Azure

- Replicate in Azure Remote region – failover to remove in ~30s. Offload remote reads.

Automated Configuration

Some of these are provided by MS in the portal wizard:

- Optimization to a target workload: OLTP/DW

- Automated patching and shutdown – latter is very new, and to reduce costs for new dev/test workloads to reduce costs at the end of the workday.

- Automated backup to a storage account, including user and system databases. Useful for a few databases, but there’s another option coming for larger collections.

Storage Options

The recommend LRS only to keep write performance to a maximum. GRS storage is slower, and could lead to database file being written/replicated before log storage.

Premium Storage: high IOPS and low latency. Use Storage Spaces to increase capacity and performance. Enable host-based read caching in data disks for better IOPS/latency.

Backup to Premium Storage is 6x faster. Restore is 30x faster.

Azure VM Connectivity

- Over the Internet.

- Over site-site tunnel: VPN or ExpressRoute

- Apps can connect transparently via a listener, e.g. Load Balancer.

Demo: Deployment

The speaker shows a PowerShell script. Not much point in blogging this. I refer JSON anyway.

http://aka.ms/tigertoolbox is the script/tools/demos repository.

Security

- Physical security of the datacenter

- Infrastructure security: virtual network isolation, and storage encryption including bring-your-own-key self-service encryption with Key Vault. Best practices and monitoring by Security Center.

- Many certifications

- SQL Security: auto-patching, database/backup encryption, and more.

VM Configuration for SQL Server

- Use D-Series or higher.

- Use Storage Spaces for performance of disks. Use Simple disks: the number of columns should equal the number of disks. For OLTP use 64KB interleave and use 256KB for data warehouse.

- Do not use the system drive.

- Put TempDB, logs, and databases on different volumes because of their different write patterns.

- 64K allocation unit size.

- Enable read caching on disks for data files and TempDB.

- Do not use GRS storage.

SQL Configuration

- Enable instant file initialization

- Enabled locked ages

- Enable data page compression

- Disable auto-shrink for your databases

- Backup to URL with compressed backups – useful for a few VMs/databases. SQL 2016 does this very quickly.

- Move all databases to data disks, including system databases (separate data and log). Use read caching.

- Move SQL Server error log and trace file directories to data disks

Demo: Workload Performance of Standard Versus Premium Storage

A scripted demo. 2 scripts doing the same thing – one targeting a DB on Standard disk (up to 500 IOPS) and the second targets a DB on a Premium P30 (4,500 IOPS) disk. There’s table creation, 10,000 rows, inserts, more tables, etc. The scripts track the time required.

It takes a while – he has some stats from previous runs. There’s only a 25% difference in the test. Honestly – that’s no indicative of the differences. He needs a better demo.

An IFI test shows that the bigger the database file is, the bigger the difference is in terms of performance – this makes sense considering the performance nature of flash storage.

Seamless Database Migration

There is a migration guide, and tools/services. http://datamigration.microsoft.com. One-stop shop for database migrations. Guidance to get from source to target. Recommended partners and case studies.

Tools:

- Data Migration Assistant: An analysis tool to produce a report.

- Azure Database Migration Service (free service that runs in a VM): Works with Oracle, MySQL, and SQL Server to SQL Server, Azure SQL, Azure SQL Managed Instance. It works by backing up the DB on the source, moving the backup to the cloud, and restoring the backup.

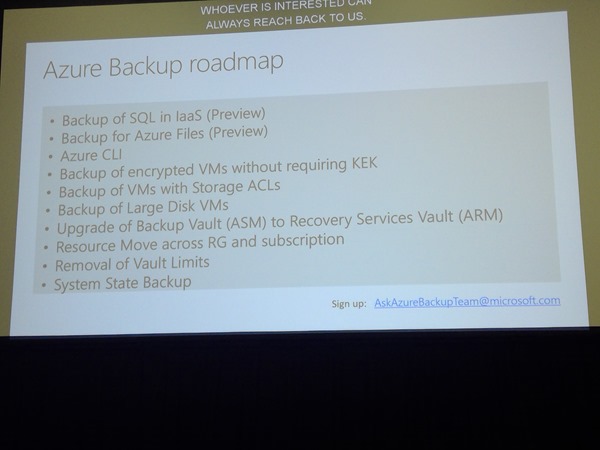

Azure Backup

Today, SQL Server can backup from the SQL VM (Azure or on-prem) to a storage account in Azure. It’s all managed from SQL Server. Very distributed, no centralized reporting, difficult/no long-term retention. Very cheap.

Azure Backup will offer centralized management of SQL Backup in an Azure VM. In preview today. Managed from the Recovery Services Vault. You select the type of backup, and a discovery will detect all SQL instances in Azure VMs, and their databases. A service account is required for this and is included in the gallery images. You must add this service for custom VMs. You then configure a backup policy for selected DBs. You can define a full backup policy, incremental, and transactional backup policy with SQL backup compression option. The retention options are the familiar ones from Azure Backup (up to 99 years by the looks of it). The backup is scheduled and you can do ad-hoc/manual backups as usual with Azure Backup.

You can restore databases too – there’s a nice GUI for selecting a restore date/time. It looks like quite a bit of work went into this. This will be the recommended solution for centralized backup of lots of databases, and for those wanting long term retention.

Backup Verification is not in this solution yet.