I’ve talked about our lab in the past and I’ve recorded/shown a few demos from it. It’s one thing to build a demo, but it’s a whole other thing to build a lab environment, where I need to be able to build lots of different demos for W2008 R2 (current support for System Center 2012), Windows Server 8 Hyper-V, OS deployment, and maybe even other things. Not only do I want to do demos, but I also want to learn for myself, and be able to use it to teach techies from our customer accounts. So that means I need something that I can wipe and quickly rebuild.

WDS, MDT, or ConfigMgr were one option. Yes, but this is a lab, and I want as few dependencies as possible. And I want to isolate the physical lab environment from the demo environment. Here’s how I’m doing it:

I’ve installed Windows Server 2008 R2 SP1 Datacenter as the standard OS on the lab hardware. Why not Windows Server 8 beta? I want an RTM supported environment as the basis of everything for reliability. This doesn’t prevent Windows Server 8 Beta from being deployed, as you’ll see soon enough.

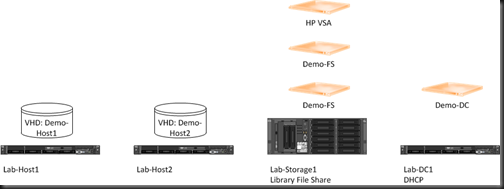

Lab-DC1 is a physical machine – it’s actually a HP 8200 Elite Microtower PC with some extra drives. It is the AD DC (forest called lab.internal) for the lab environment and provides DHCP for the network. I happen to use a remote control product so I can get to it easily – the ADSL we have in the lab doesn’t allow inbound HTTPS for RDS Gateway ![]() This DC role is intended only for the lab environment. For demos, I’ve enabled Hyper-V on this machine (not supported), and I’ll run a virtual DC for the demos that I build with a forest called demo.internal (nothing to do with lab.internal).

This DC role is intended only for the lab environment. For demos, I’ve enabled Hyper-V on this machine (not supported), and I’ll run a virtual DC for the demos that I build with a forest called demo.internal (nothing to do with lab.internal).

Lab-Storage1 is a HP DL370 G7 with 2 * 300GB drives, 12 * 2TB drives, and 16 GB RAM. This box serves a few purposes:

- It hosts the library share with all the ISOs, tools, scripts, and so forth.

- Hyper-V is enabled and this allows me to run a HP P4000 virtual SAN appliance (VSA) for an iSCSI SAN that I can use for clustering and backup stuff.

- I have additional capacity to create storage VMs for demos, e.g. a scale out file server for SMB Direct (SMB 2.2) demos

The we get on to Lab-Host1 and Lab-Host2. As the names suggest, these are intended to be Hyper-V hosts. I’ve installed Windows Server 2008 R2 SP1 on these machines, but it’s not configured with Hyper-V. It’s literally an OS with network access. It’s enough for me to copy a VHD from the storage server. Here’s what I’ve done:

- There’s a folder called C:VHD on Lab-Host1 and Lab-Host2.

- I’m enabling boot-from-VHD for the two hosts from C:VHDboot.vhd – pay attention to the bcdedit commands in this post by Hans Vredevoort.

- I’m using Wim2VHD to create VHD files from the Windows Server ISO files.

- I can copy any VHD to the C:VHD folder on the two hosts, and rename it to boot.vhd.

- I can then reboot the physical host to the OS in boot.vhd and configure it as required. Maybe I create a template from it, generalize it, and store it back on the library.

- The OS in boot.vhd can be configure as a Hyper-V host, clustered if required, and connected to the VSA iSCSI SAN.

Building a new demo now is a matter of:

- Replace virtual DC on Lab-DC1 and configure it as required.

- Provision storage on the iSCSI SAN as required.

- Deploy any virtual file servers if required, and configure them.

- Replace the boot.vhd on the 2 hosts with one from the library. Boot it up and configure as required.

Basically, I get whole new OS’s by just copying VHD files about the network, with hosts and storage primarily using 10 GbE.

If I was working with just a single VHD all of the time, then I’d check out Mark Minasi’s Steadier State.