In this post, I want to discuss how one should design network security in Microsoft Azure, dispensing with past patterns and combatting threats that are crippling businesses today.

The Past

Network security did not change much for a very long time. The classic network design is focused on an edge firewall.”All the bad guys are trying to penetrate our network from the Internet” so we’ll put up a very strong wall at the edge. With that approach, you’ll commonly find the “DMZ” network; a place where things like web proxies and DNS proxies isolate interior users and services from the Internet.

The internal network might be made up of two/more VLANs. For example, one or more client device VLANs and a server VLAN. While the route between those VLANs might pass through the firewall, it probably didn’t; they really “routed” through a smart core switch stack and there was limited to no firewall isolation between those VLANs.

This network design is fertile soil for malware. Ports usually are not let open to attack on the edge firewall. Hackers aren’t normally going to brute force their way through a firewall. There are easier ways in such as:

- Send an “invoice” PDF to the accounting department that delivers a trojan horse.

- Impersonate someone, ideally someone that travels and shouts a lot, to convince a helpful IT person to reset a password.

- Target users via phishing or spear phishing.

- Cimpromise some upstream include that developers use and use it to attack from the servers.

- Use a SQL injection attack to open a command prompt on an internal server.

- And on and on and …

In each of those cases, the attack comes from within. The spread of the blast (the attack) is unfettered. The blast area (a term used to describe the spread of an attack) is the entire network.

Secure Zones To The Rescue!

Government agencies love a nice secure zone architecture. This is a design where sensitive systems, such as GDRP data or secrets are stored on an isolated network.

Some agencies will even create a whol duplicate network that is isolated, forcing users to have two PCs – one “regular” one on the Internet-connected network and a “secure” PC that is wired onto an isolated network with limited secret services.

Realistically, that isolated network is of little value to most, but if you have that extreme a need – then good luck. By the way, that won’t work in The Cloud 🙂 Back to the more regular secure zone …

A special VLAN will be deployed and firewall rules will block all traffic into and out of that secure zone. The user experience might be to use Citrix desktops, hosted in the secure zone, to access services and data in that secure zone. But then reality starts cracking holes in the firewall’s deny all rules. No line of business app lives alone. They all require data from somewhere. Or there are integrations. Printers must be used. Scanners need to scan and share data. And legacy apps often use:

- Domain (ADDS) credentials (how many ports do you need for that!!!)

- SMB (TCP 445) for data transfer and integration

Over time, “deny all” becomes a long list of allow * from X to *, and so on, with absolutely no help from the app vendors.

The theory is that if an attack is commenced, then the blast area will be limited to the client network and, if it reaches the servers, it will be limtied to the Internal network. But this design fails to understand that:

- An attack can come from within. Consider the scneario where compromised runtimes are used or a SQL injection attack breaks out from a database server.

- All the required integrations open up holes between the secure zone and the other networks, including those legacy protocols that things like ransomware live on.

- If one workload in the secure zone is compromised, they all are because there is no network segmentation inside of the VLAN.

And eventually, the “secure zone” is no more secure than the Internal network.

Don’t Block The Internet!!!

I’m amazed how many organisations do not block outbound access to the Internet. It’s just such hard work to open up firewall rules for all these applications that have Internet dependencies. I can understand that for a client VLAN. But the server VLAN such be a controlled space – if it’s not known & controlled (i.e. governed) then it should not be permitted.

A modern attack, an advanced persistent threat (APT), isn’t just some dumb blast, grab, and run. It is a sneaky process of:

- Penetration

- Discovery, often manually controlled

- Spread, often manually controlled

- Steal

- Destroy/encrypt/etc

Once an APT gets in, it usually wants to call home to pull instructions down from a rogue IP address or compromised bot. When the APT wants to steal data, to be used as blackmail and/or to be sold on the Darknet, the malware will seek to upload data to the Internet. Both of these actions are taking advantage of the all-too-common open access to the Internet.

Azure is Different

Years of working with clients has taught me that there are three kinds of people when it comes to Azure networking:

- Those who managed on-premises networks: These folks struggle with Azure networking.

- Those who didn’t do on-premises networking, but knew what to ask for: These folks take to Azure networking quite quickly.

- Everyone else: Irrelevant to this topic

What makes Azure networking so difficult for the network admins? There is no cabling in the fabric – obviously there is cabling in the data centres but it’s all abstracted by the VXLAN software-defined networks. Packets are encapsulated on the source virtual machine’s host, transmitted over the physical network, decapstulated on the destination virtual machine host, and presented to the destination virtual machine’s NIC. In short, packets leave the source NIC and magically arrive on the destination NIC with no hops in between – this is why traceroute is pointless in Azure and why the default gateway doesn’t really exist.

I’m not going to use virtual machines, Aidan. I’m doing PaaS and serverless computing. In Azure, everything is based on virtual machines, unless they are explcitly hosted on physical hosts (Azure VMware Services and some SAP stuff, for example). Even Functions run on a VM somewhere hidden in the platform. Serverless means that you don’t need to manage it.

The software-defined thing is why:

- Partitioned subnets for a firewall appliance (front, back, VPN, and management) offer nothing from a security perspective in Azure.

- ICMP isn’t as useful as you’d imagine in Azure.

- The concept of partitioning workloads for security using subnets is not as useful as you might think – it’s actually counter-productive over time.

Transformation

I like to remind people during a presentation or a project kickoff that going on a cloud journey is supposed to result in transformation. You now re-evaluate everything and find better ways to do old things using cloud-native concepts. And that applies to network security designs too.

Micro-Segmentation Is The Word

Forget “Greece”, get on board with what you need to counter today’s threats: micro-segmentation. This is a concept where:

- We protect the edge, inbound and outbound, permitting only required traffic.

- We apply network isolation within the workload, permitting only required traffic.

- We route traffic between workloads through the edge firewall, , permitting only required traffic.

Yes, more work will be required when you migrate existing workloads to Azure. I’d suggest using Azure Migrate to map network flows. I never get to do that – I always get the “messy migration projects” and I never get to use Azure Migrate – so testing and accessing and understanding NSG Traffic Analytics and the Azure Firewall/firewall logs via KQL is a necessary skill.

Security Classification

Every workload should go through a security classification process. You need to weigh risk verus complexity. If you max the security, you will increase costs and difficulty for otherwise simple operations. For example, a dev won’t be able to connect Visual Studio straight to an App Service if you deploy that App Service on a private or isolated App Service Plan. You also will have to host your own DevOps agents/GitHub runners because the Microsoft-hosted containers won’t be able to reach your SCM endpoints.

Every piece of compute is a potential attack vector: a VM, an App Service, a Function, a Container, a Logic App. The question is, if it is compromised, will the attacker be able to jump to something else? Will the data that is accessible be secret, subject to regulation, or reputational damage?

This measurement process will determine if a workload should use resources that:

- Have public endpoints (cheapest and easiest).

- Use private endpoints (medium levels of cost, complexity, and security).

- Use full VNet integration, such as an App Service Environment or a virtual machine (highest cost/complexity but most secure).

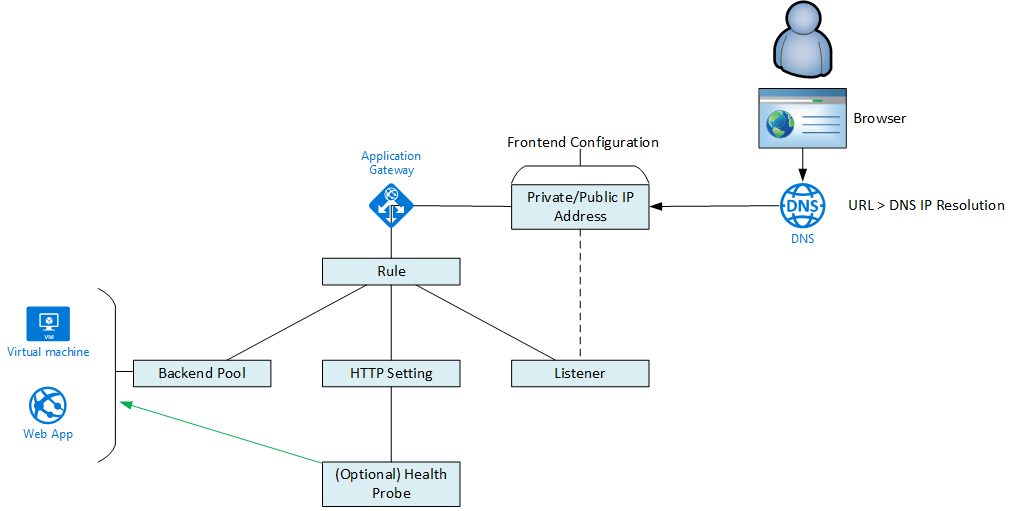

The Virtual Network & Subnet

Imagine you are building a 3-tier workload that will be isolated from the Internet using Azure virtual networking:

- Web servers on the Internet

- Middle tier

- Databases

Not that long ago, we would have deployed that workload on 3 subnets, one for each tier. Then we would have built isolation using Network Security Groups (NSGs), one for each subnet. But you just learned that a SD-network routes packets directly from NIC to NIC. An NSG is a Hyper-V Port ACL that is implemented at the NIC, even if applied at the subnet level. We can create all the isolation we want using an NSG within the subnet. That means we can flatten the network design for the workload to one subnet. A subnet-associated subnet will restrict communications between the tiers – and ideally between nodes within the same tier. That level of isolation should block everything … should 🙂

Tips for virtual networks and subnets:

- Deploy 1 virtual network per workload: Not only will this follow Azure Cloud Adoption Framework concepts, but it will help your overall security and governance design. Each workload is placed into a spoke virtual network and peered with a hub. The hub is used only for external connectivity, the firewall, and Azure Bastion (assuming this is not a vWAN hub).

- Assign a single prefix to your hub & spoke: Firewall and NSG rules will be easier.

- Keep the virtual newtorks small: Don’t waste your address space.

- Flatten your subnets: Only deploy subnets when there is a technical need, for example VMs and private endpoints are in one subnet, VNet integration for an App Services plan is in another, a SQL managed instance, is in a third.

Resource Firewalls

It’s sad to see how many people disable operating system firewalls. For example, Group Policy is used to diable Windows Firewall. Don’t you know that Microsoft and Linux added those firewalls to protect machines from internal attacks? Those firewalls should remain operational and only permit required traffic.

Many Azure resources also offer firewalls. App Services have firewalls. Azure SQL has a firewall. Use them! The one messy resource is the storage account. The location of the endpoints for storage clusters is in a weird place – and this causes interesting situations. For example, a Logic App’s storage account with a configured firewall will prevent workflows from being created/working correctly.

Network Security Groups

Take a look at the default inbound rules in an NSG. You’ll find there is a Deny All rule which is the lowest possible priority. Just up from that rule, is a built in rule to allow traffic from VirtualNetwork. VirtualNetwork includes the subnet, the virtual network, and all routed networks, including peers and site-to-site connections. So all traffic from internal networks is … permitted! This is why every NSG that I create has a custom DenyAll rule with a priority of 4000. Higher priority rules are created to permit required traffic and only that required traffic.

Tips with your NSGs:

- Use 1 NSG per subnet: Where the subnet resources will support an NSG. You will reduce your overall complexity and make troubleshooting easier. Remember, all NSG rules are actually applied at the source (outbound rules) or target (inbound rules) NIC.

- Limit the use of “any”: Rules should be as accurate as possible. For example: Allow TCP 445 from source A to destination B.

- Consider the use of Application Security Groups: You can abstract IP addresses with an Application Security Group (ASG) in an NSG rule. ASGs can be used with NICs – virtual machines and private endpoints.

- Enable NSG Flow Logs & Traffic Analytics: Great for troubleshooting networking (not just firewall stuff) and for feeding data to a SIEM. VNet Flow Logs will be a superior replacement when it is ready for GA.

The Hub

As I’ve implied already, you should employ a hub & spoke design. The hub should be simple, small and free of compute. The hub:

- Makes connections using site-to-site networking using SD-WAN, VPN, and/or ExpressRoute.

- Hosts the firewall. The firewall blocks everything in every direction by default,

- Hosts Azure Bastion, unless you are running Azure Virtual WAN – then deploy it to a spoke.

- Is the “Public IP” for egress traffic for workloads trying to reach the Internet. All egress traffic is via the firewall. Azure Policy should be used to restrict Public IP Addresses to just those requires that require it – things like Azure Bastion require a public IP and you should create a policy override for each required resource ID.

My preference is to use Azure Firewall. That’s a long conversation so let’s move on to another topic; Azure Bastion.

Most folks will go into Azure thinking that they will RDP/SSH straight to their VMs. RDP and SSH are not perfect. This is something that the secure zone concept recognised. It was not unusual for admins/operators to use a bastion host to hop via RDP or SSH from their PC to the required server via another server. RDP/SSH were not open directly to the protected machines.

Azure Bastion should offer the same isolation. Your NSG rules should only permit RDP/SSH from:

- The AzureBastionSubnet

- Any other bastion hosts that might be employed, typically by developers who will deploy specialist tools.

Azure Bastion requires:

- An Entra ID sign-in, ideally protected by features such as conditional access and MFA, to access the bastion service.

- The destination machine’s credentials.

Routing

Now we get to one of my favourite topics in Azure. In the on-prem world we can control how packets get from A to B using cables. But as you’ve learned, we can run cables in Azure. But we can control the next hop of a packet.

We want to control flows:

- Ingress from site-to-site networking to flow through the hub firewall: A route in the GatewaySubnet to use the hub firewall as the next hop.

- All traffic leaving a spoke (workload virtual network) to flow through the hub firewall: A route to 0.0.0.0/0 using the firewall backend/private IP as the next hop.

- All traffic between hub & spokes to flow through the remote hub firewall: A route to the remote hub & spoke IP prefix (see above tip) with a next hop of the remote hub firewall.

If you follow my tips, especially with the simple hub, then the routing is actually quite easy to implement and maintain.

Tips:

- Keep the hub free of compute.

- NSG Traffic Analytics helps to troubleshoot.

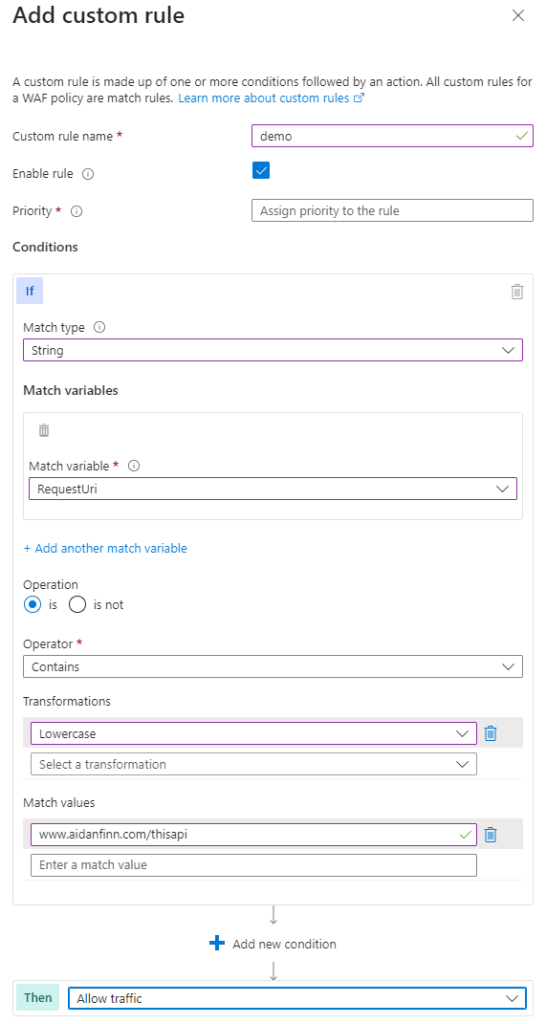

Web Application Firewall

The hub firewall shold not be used to present web applications to the Internet. If a web app is classified as requireing network security, then it should be reverse proxied using a Web Application Firewall (WAF). This specialised firewall inspects traffic at the application layer and can block threats.

The WAF will have a lot of false positives. Heavy traffic applications can produce a lot of false positives in your logs; in the case of Log Analytics, the ingestion charge can be huge so get to optimising those false positives as quickly as you can.

My preference is to route the WAF through the hub firewall to the backend applications. The WAF is a form of compte, even the Azure WAF. If you do not need end-to-end TLS, then the firewall could be used to inspect the HTTP traffic from the WAF to the backend using Intrusion Detection Prevention System (IDPS), offering another layer of protection.

Azure offers a couple of WAF options. Front Door with WAF is architecturally interesting, but the default design is that the backend has a public endpoint that limits access to your Front Door instance at the application layer. What if the backend is network connected for max protection? Then you get into complexities with Private Link/Private Endpoint.

A regional WAF is network connected and offers simpler networking, but it sacrifices the performance boosts from Front Door. You can combine Front Door with a regional WAF, but there are more costs with this.

Third party solutions are posisble Services such as Cloud Flare offer performance and security features. One could argue that Cloud Flare offers more features. From the performance perspective, keep in mind that Cloud Flare has only a few peering locations with the Microsoft WAN, so a remote user might have to take a detour to get to your Azure resources, increasing latency.

You can seek out WAF solutions from the likes of F5 and Citrix in the Azure Marketplace. Keep in mind that NVAs can continue skills challenges by siloing the skill – native cloud skills are easier to develop and contract/hire.

Summary

I was going to type something like “this post gives you a quick tour of the micro-segmentation approach/features that you can use in Azure” but then I reaslised that I’ve had keyboard diarrhea and this post is quite Sinofskian. What I’ve tried to explain is that the ways of the past:

- Don’t do much for security anymore

- Are actually more complex in architecture than Azure-native patterns and solutions that will work.

If you implement security at three layers, assuming that a breach will happen and could happen anywhere then you limit the blast area of a threat:

- The edge, using the firewall and a WAF

- The NIC, using a Network Security Group

- The resource, using a guest OS/resource firewall

This trust-no-one approach that denies all but the minimum required traffic will make life much harder for an attacker. Including logging and the use of a well configured SIEM will create trip wires that an attacker must trip over to attempt an expansion. You will make their expansion harder & slower, and make it easier to detect them. You will also limit how much they can spread and how much the damage that the attack can create. Furthermore, you will be following the guidance the likes of the FBI are recommending.

There is so much more to consider when it comes to security, but I’ve focused on micro-segmentation in a network context. People do think about Entra ID and management solutions (such as Defender for Cloud and/or SIEM) but they rarely think through the network design by assuming that what they did on-prem will still be fine. It won’t because on-prem isn’t fine right now! So take my advice, transform your network, and protect your assets, shareholders, and your career.