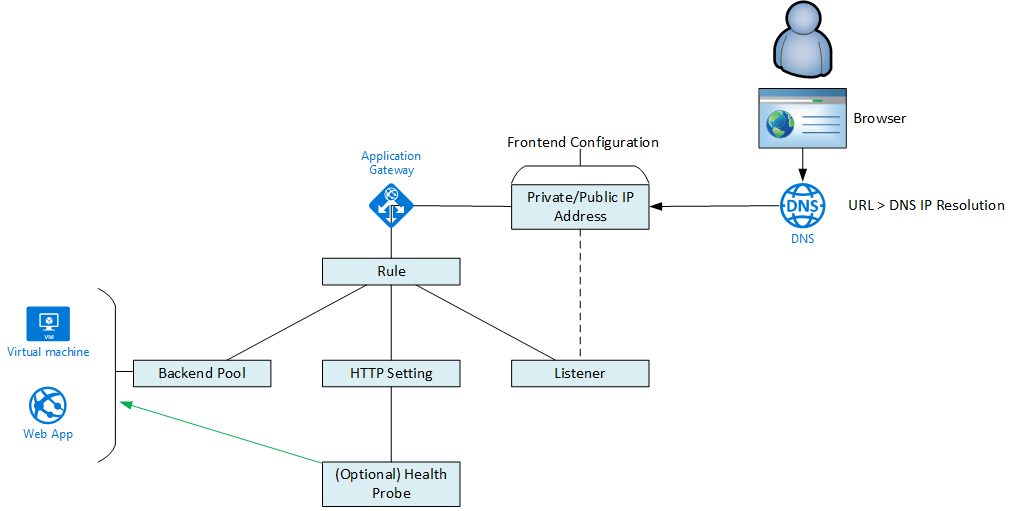

In this post, I will explain how things such as frontend configurations, listeners, HTTP settings, probes, backend pools, and rules work together to enable service publication in the Azure Web Application Gateway (WAG)/Web Application Firewall (WAF).

Introduction

The WAF/WAG is a scary beast at first. When you open one up there are just so many settings to be tweaked. If you are publishing just a simple test HTTP server, it’s easy: you populate the default backend pool and things just start to work. But if you want HTTPS, or to service many pools/sites, then things get complicated. And frustratingly slow 🙂 – Things have improved in v1 and v2 is significantly faster to configure, although it has architectural limitations (force public IP address and lack of support for route tables) that prevent me from using v2 in my large network deployments. Hopefully, the above map and following text will simplify things by explaining what all the pieces do and how they work together.

The below is not feature complete, and things will change in the future. But for 99% of you, this should (hopefully) be helpful.

Backend Pool

The backend pool describes a set of machines/services that will work together. The members of a backend pool must be all of the same type from one of these types:

- IP address/hostname: a common choice in large Azure deployments – you can span peering connections to other VNets

- Virtual machine: Select a machine from the same VNet as the WAG/WAF

- VMSS: Virtual machine scale sets in the same VNet as the WAG/WAF

- App Services: In the same subscription as the WAG/WAF

From here on out, I’ll be using the term “web server” to describe the above.

Note that this are the machines that host your website/service. They will all run the same website/service. And you can configure an optional custom probe to test the availability of the service on these machines.

(Optional) Health Probe

You can create a HTTP/HTTPS probe to do deeper probe tests of a service running on a backend pool. The probe is configured for HTTP or HTTPS and tests a hostname on the web server. You specify a path on the website, a frequency, timeout and allowed number of retries before designating a web site on a web server as being unhealthy and no longer a candidate for load balancing.

HTTP Setting

The HTTP setting configures how the WAG/WAF will talk to the members of the backend pool. It does not configure how clients talk to the site (Listener). So anything you see below here is for configuring WAG/WAF to web server communications (see HTTPS).

- Control cookie-based affinity for load balancing

- Configure connection draining when a machine is removed from a backend pool

- Specify if this is for a HTTP or a HTTPS connection to the webserver. This is for end-to-end encryption.

- For HTTPS, you will upload a certificate that will match the web servers’ certificate.

- The port that the web server is listening on.

- Override the path

- Override the hostname

- Use a custom probe

Remember that the above HTTPS setting is not required for website to be published as SSL. It is only required to ensure that encryption continues from the WAG/WAF to the web servers.

Frontend IP Configuration

A WAG/WAF can have public or private frontend IP addresses – the variation depends on if you are using V1 (you have a choice on the mix) or V2 (you must use public and private). The public front end is a single public IP address used for publishing services publicly. The private frontend is a single virtual network address used for internal service publication, requiring virtual network connectivity (virtual network, VPN, ExpressRoute, etc).

The DNS records for your sites will point at the frontend IP address of the WAG/WAF. You can use third-party or Azure DNS – Azure DNS has the benefit of being hosted in every Azure region and in edge sites around the world so it is faster to resolve names than some DNS hoster with 3 servers in a single continent.

A single frontend can be shared by many sites. http://www.aidanfinn.com, http://www.cloudmechanix.com and http://www.joeeleway.com can all point to the same IP address. The hostname configuration that you have in the Listener will determine what happens to the incoming traffic afterwards.

Listener

A Listener is configured to listen for traffic destined to a particular hostname and port number and forward it, eventually, to the correct backend pool. There are two kinds of listener:

- Basic: For very simple configurations where a site has exclusive ownership over a port number on one of the frontends. Typically this is for point solutions where a WAG/WAF is dedicated to a service.

- Multi-Site: A listener shares a frontend configuration with other listeners, and is looking for traffic destined to a specific hostname/port/protocol.

Note that the Listner is where you place the certificate to secure client > WAG/WAF communications. This is known as SSL offloading. If you enable HTTPS you will place the “site certificate” on the WAG/WAF via the Listener. You can optionally re-encrypt traffic to the webserver from the WAG/WAF using the previously discussed HTTP Setting. WAGv2/WAFv2 have a no-support preview to use certs that are securely stored in Key Vault.

The configuration of a basic listener is:

- Frontend

- Frontend port

- HTTP or HTTPS protocol

- The certificate for securing client > WAG/WAF traffic

- Optional custom error pages

The multi-site listener is adds an extra configuration: hostname. This is because now the listener is sharing the frontend and is only catching traffic for its website. So if I want 3 websites on my WAG/WAF sharing a frontend, I will have 3 x HTTPS listeners and maybe 3 x HTTP listeners.

Rules

A rule glues together the configuration. A basic rule is pretty easy:

- Traffic comes into a Listener

- The HTTP Setting determines how to forward that traffic to the backend pool

- The Backend Pool lists the web servers that host the site

A path-based rule allows you to extend your site across many backend pools. You might have a set of content for /media on pool1. Therefore all http://www.aidanfinn.com/media content is pulled from that pool1. All video content might be on http://www.aidanfinn.com/video, so you’ll redirect /video to pool2. And so on. And you can have individual HTTP settings for each redirection.

My Tips

- There’s nothing like actually setting this up at scale to try this out. You will need a few DNS names to be able to work with.

- Remember to enable the protection mode of WAF. I have audited deployments and found situations where people thought they had Layer-7 security but only had the default “alert-only” configuration of WAFv1.

- In large environments, don’t forget to ensure that the NSGs protecting any webservers allow traffic in from the WAG/WAF’s subnet into the web servers on the port(s) specified in the HTTP Setting(s). Also ensure that any guest OS firewall is similarly configured.

- Possibly the biggest issue you will have is with devs not assigning hostnames to websites in their webservers. If you’re using shared WAGs/WAFs you must use multi-site listeners and the websites should be configured with the hostname.

- And the biggest tip I can give is to work out a naming standard for each of the above components so you know what piece is associated with what site. I can’t share what we’re using at work, but we have some big configurations and they are very easy to troubleshoot because of how we have named things.