This session (original here) introduces WS2016 and SysCtr 2016 at a high level. The speakers were:

- Mike Neil: Corporate VP, Enterprise Cloud Group at Microsoft

- Erin Chapple: General Manager, Windows Server at Microsoft

A selection of other people will come on stage to do demos.

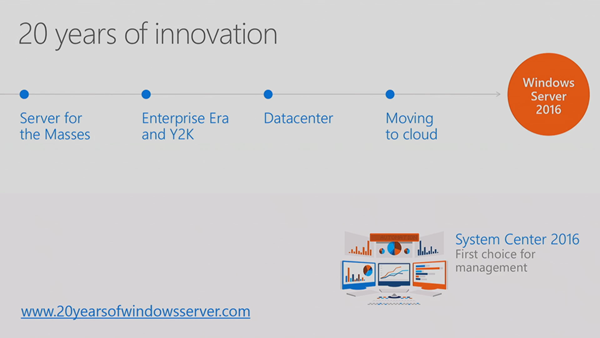

20 Years Old

Windows Server is 20 years old. Here’s how it has evolved:

The 2008 release brought us the first version of Hyper-V. Server 2012 brought us the same Hyper-V that was running in Azure. And Windows Server 2016 brings us the cloud on our terms.

The Foundation of Our Cloud

The investment that Microsoft made in Azure is being returned to us. Lots of what’s in WS2016 came from Azure, and combined with Azure Stack, we can run Azure on-prem or in hosted clouds.

There are over 100 data centers in Azure over 24 regions. Windows Server is the platform that is used for Azure across all that capacity.

IT is Being Pulled in Two Directions – Creating Stresses

- Provide secure, controlled IT resources (on prem)

- Support business agility and innovation (cloud / shadow IT)

By 2017, 50% of IT spending will be outside of the organization.

Stress points:

- Security

- Data centre efficiency

- Modernizing applications

Microsoft’s solution is to use unified management to:

- Advanced multi-layer security

- Azure-inspired, software-defined,

- Cloud-read application platform

Security

Mike shows a number of security breach headlines. IT security is a CEO issue – costs to a business of a breach are shown. And S*1t rolls downhill.

Multi-layer security:

- Protect identity

- Secure virtual machines

- Protect the OS on-prem or in the cloud

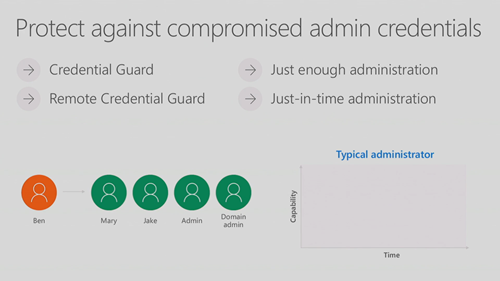

Challenges in Protecting Credentials

Attack vectors:

- Social engineering is the one they see the most

- Pass the hash

- Admin = unlimited rights. Too many rights given to too many people for too long.

To protect against compromised admin credentials:

- Credential Guard will protect ID in the guest OS

- JEA limits rights to just enough to get the job done

- JITA limits the time that an admin can have those rights

The solution closes the door on admin ID vulnerabilities.

Ryan Puffer comes on stage to do a demo of JEA and JITA. The demo is based on PowerShell:

- He runs Enter-PSSession to log into a domain controller (DNS server). Local logon rights normally mean domain admin.

- He cannot connect to the DC, because his current logon doesn’t have DC rights, so it fails.

- He tries again, but adding –ConfiguratinName to add a JEA config to Enter-PSSession, and he can get in. The JEA config was set up by a more trusted admin. The JEA authentication is done using a temporary virtual local account on the DC that resides nowhere else. This account exists only for the duration of the login session. Malware cannot use this account because it has limited rights (to this machine) and will disappear quickly.

- The JEA configuration has also limited rights – he can do DNS stuff but he cannot browse the file system, create users/groups, etc. His ISE session only shows DNS Get- cmdlets.

- He needs some modify rights. He browses to a Microsoft Identity Manager (MIM) portal and has some JITA roles that he can request – one of these will give his JEA temp account more rights so he can modify DNS (via a group membership). He selects one and has to enter details to justify the request. He puts in a time-out of 30 minutes – 31 minutes later he will return to having just DNS viewer rights. MFA via Azure can be used to verify the user, and manager approval can be required.

- He logs in again using Enter-PSSession with the JEA config. Now he has DNS modify rights. Note: you can whitelist and blacklist cmdlets in a role.

Back to Mike.

Challenges Protecting Virtual Machines

VMs are files:

- Easy to modify/copy

- Too many admins have access

Someone can mount a VMs disks or copy a VM to gain access to the data. Microsoft believes that attackers (internal and external) are interested in attacking the host OS to gain access to VMs, so they want to prevent this.

This is why Shielded Virtual Machines was invented – secure the guest OS by default:

- The VM is encrypted at rest and in transit

- The VM can only boot on authorised hosts

Azure-Inspired, Software-Defined

Erin Chapple comes on stage.

This is a journey that has been going on for several releases of Windows Server. Microsoft has learned a lot from Azure, and is bringing that learning to WS2016.

Increase Reliability with Cluster Enhancements

- Cloud means more updates, with feature improvements. OS upgrades weren’t possible in a cluster. In WS2016, we get cluster rolling upgrades. This allows us to rebuild a cluster node within a cluster, and run the cluster temproarily in mixed-version mode. Now we can introduce changes without buying new cluster h/w or VM downtime. Risk isn’t an upgrade blocker.

- VM resiliency deals with transient errors in storage, meaning a brief storage outage pauses a VM instead of crashing it.

- Fault domain-aware clusters allows us to control how errors affect a cluster. You can spread a cluster across fault domains (racks) just like Azure does. This means your services can be spread across fault domains, so a rack outage doesn’t bring down a HA service.

24 TB of RAM on a physical host and 12 TB RAM in a guest OS are supported. 512 physical LPs on a host, and 240 virtual processors in a VM. This is “driven by Azure” not by customer feedback.

Complete Software-Defined Storage Solution

Evolving Storage Spaces from WS2012/R2. Storage Spaces Direct (S2D) takes DAS and uses it as replicated/shared storage across servers in a cluster, that can either be:

- Shared over SMB 3 with another tier of compute (Hyper-V) nodes

- Used in a single tier (CSV, no SMB 3) of hyper-converged infrastructure (HCI)

Storage Replica introduces per-volume sync/async block-level beneath-the-file system replication to Windows Server, not caring about what the source/destination storage is/are (can be different in both sites) as long as it is cluster-supported.

Storage QoS guarantees an SLA with min and max rules, managed from a central point:

The owner of S2D, Claus Joergensen, comes on stage to do an S2D demo.

- The demo uses latest Intel CPUs and all-Intel flash storage on 16 nodes in a HCI configuration (compute and storage on a single cluster, shared across all nodes).

- There are 704 VMs run using an open source tool called VMFleet.

- They run a profile similar to Azure P10 storage (each VHD has 500 IOPS). That’s 350,000 IOPS – which is trivial for this system.

- They change this to Azure P20: now each disk has 2,300 IOPS, summing 1.6 million IOPS in the system – it’s 70% read and 30% write. Each S2D cluster node (all 16 of them) is hitting over 100,000 IOPS, which is about the max that most HCI solutions claim.

- Clause changes the QoS rules on the cluster to unlimited – each VM will take whatever IOPS the storage system can give it.

- Now we see a total of 2.7 million IOPS across the cluster, with each node hitting 157,000 to 182,000 IOPS, at least 50% more than the HCI vendors claim.

Note the CPU usage for the host, which is modest. That’s under 10% utilization per node to run the infrastructure at max speed! Thank Storage Spaces and SMB Direct (RDMA) for that!

- Now he switches the demo over to read IO only.

- The stress test hits 6.6 million read IOPS, with each node offering between 393,000 and 433,000 IOPS – that’s 16 servers, no SAN!

- The CPU still stays under 10% per node.

- Throughput numbers will be shown later in the week.

If you want to know where to get certified S2D hardware, then you can get DataON from MicroWarehouse in Dublin (www.mwh.ie):

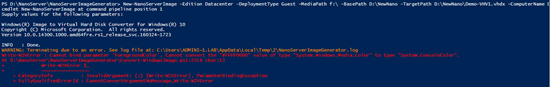

Nano Server

Nano Server is not an edition – it is an installation option. You can install a deeply stripped down version of WS2016, that can only run a subset of roles, and has no UI of any kind, other than a very basic network troubleshooting console.

It consumes just 460 MB disk space, compared to 5.4 GB of Server Core (command prompt only). It boots in less than 10 seconds and a smaller attack surface. Ideal scenario: born in the cloud applications.

Nano Server is not launched in Current Branch for Business. If you install Nano Server, then you are forced into installing updates as Microsoft releases them, which they expect to do 2-3 times per year. Nano will be the basis of Microsoft’s cloud infrastructure going forward.

Azure-Inspired Software-Defined Networking

A lot of stuff from Azure here. The goal is that you can provision new networks in minutes instead of days, and have predictable/secure/stable platforms for connecting users/apps/data that can scale – the opposite of VLANs.

Three innovations:

- Network Controller: From Azure, a fabric management solution

- VXLAN support: Added to NVGRE, making the underlying transport less important and focusing more on the virtual networks

- Virtual network functions: Also from Azure, getting firewall, load balancing and more built into the fabric (no, it’s not NLB or Windows Firewall – see what Azure does)

Greg Cusanza comes on stage – Greg has a history with SDN in SCVMM and WS2012/R2. He’s going to deploy the following:

That’s a virtual network with a private address space (NAT) with 3 subnets that can route and an external connection for end user access to a web application. Each tier of the service (file and web) has load balancers with VIPs, and AD in the back end will sync with Azure AD. This is all familiar if you’ve done networking in Azure Resource Manager (ARM).

- A bunch of VMs have been created with no network connections.

- He opens a PoSH script that will run against the network controller – note that you’ll use Azure Stack in the real world.

- The script runs in just over 29 seconds – all the stuff in the screenshot is deploy and the VMs are networked and have Internet connectivity – He can browse the Net from a VM, and can browse the web app from the Internet – he proves that load balancing (virtual network function) is working.

Now an unexpected twist:

- Greg browses a site and enters a username and password – he has been phished by a hacker and now pretends to be the attacker.

- He has discovered that the application can be connected to using remote desktop and attempts to sign in used the phished credentials. He signs into one of the web VMs.

- He uploads a script to do stuff on the network. He browses shares on the domain network. He copies ntds.dit from a DC and uploads it to OneDrive for a brute force attack. Woops!

This leads us to dynamic security (network security groups or firewall rules) in SDN – more stuff that ARM admins will be familiar with. He’ll also add a network virtual appliance (a specialised VM that acts as a network device, such as an app-aware firewall) from a gallery – which we know that Microsoft Azure Stack will be able to syndicate from :

- Back in PoSH, he runs another script to configure network security groups, to filter traffic on a TCP/UDP port level.

- Now he repeats the attack – and it fails. He cannot RDP to the web servers, he couldn’t browse shared folders if he did, and he prevented outbound traffic from the web servers anyway (stateful inspection).

The virtual appliance is a network device that runs a customized Linux.

- He launches SCVMM.

- We can see the network in Network Service – so System Center is able to deploy/manage the Network Controller.

Erin finished by mentioning the free WS2016 Datacenter license offer for retiring vSphere hosts “a free Datacenter license for every vSphere host that is retired”, good until June 30, 2017 – see www.microsoft.com/vmwareshift

Cloud-Ready Application Platform

Back to Mike Neil. We now have a diverse set of infrastructure that we can run applications one:

WS2016 adds new capabilities for cloud-based applications. Containers was a huge thing for MSFT.

A container virtualizes the OS, not the machine. A single OS can run multiple Windows Server Containers – 1 container per app. So that’s a single shared kernel – that’s great for internal & trusted apps, similar to containers that are available on Linux. Deployment is fast and you can get great app density. But if you need security, you can deploy compatible Hyper-V Containers. The same container images can be used. Each container has a stripped down mini-kernal (see Nano) isolated by a Hyper-V partition, meaning that untrusted or external apps can be run safely, isolated from each other and the container host (either physical or a VM – we have nested Hyper-V now!). Another benefit of Hyper-V Containers is staggered servicing. Normal (Windows Server) Containers share the kernal with the container host – if you service the host then you have to service all of the containers at the same time. Because they are partitioned/isolated, you can stagger the servicing of Hyper-V Containers.

Taylor Brown (ex- of Hyper-V and now Principal Program Manager of Containers) comes on stage to do a demo.

- He has a VM running a simple website – a sample ASP.NET site in Visual Studio.

- In IIS Manager, he does a Deploy > Export Application, and exports a .ZIP.

- He copies that to a WS2016 machine, currently using 1.5 GB RAM.

- He shows us a “Docker File” (above) to configure a new container. Note how EXPOSE publishes TCP ports for external access to the container on TCP 80 (HTTP) and TCP 8172 (management). A PowerShell snap-in will run webdeploy and it will restore the exported ZIP package.

- He runs Docker Build –t mysite … with the location of the docker file.

- A few seconds later a new container is built.

- He starts the container and maps the ports.

- And the container is up and running in seconds – the .NET site takes a few seconds to compile (as it always does in IIS) and the thing can be browsed.

- He deploys another 2 instances of the container in seconds. Now there are 3 websites and only .5 GB extra RAM is consumed.

- He uses docker run -isolation=hyperv to get an additional Hyper-V Container. The same image is started … it takes an extra second or two because of “cloning technology that’s used to optimize deployment of Hyper-V Containers”.

- Two Hyper-V containers and 3 normal containers (that’s 5 unique instances of IIS) are running in a couple of minutes, and the machine has gone from using 1.5 GB RAM to 2.8 GB RAM.

Microsoft has been a significant contributor to the Docker open source project and one MS engineer is a maintainer of the project now. There’s a reminder that Docker’s enterprise management tools will be available to WS2016 customers free of charge.

On to management.

Enterprise-Class Data Centre Management

System Center 2016:

- 1st choice for Windows Server 2016

- Control across hybrid cloud with Azure integrations (see SCOM/OMS)

SCOM Monitoring:

- Best of breed Windows monitoring and cross-platform support

- N/w monitoring and cloud infrastructure health

- Best-practice for workload configuration

Mahesh Narayanan, Principal Program Manager, comes on stage to do a demo of SCOM. IT pros struggle with alert noise. That’s the first thing he wants to show us – it’s really a way to find what needs to be overriden or customized.

- Tune Management Packs allows you to see how many alerts are coming from each management pack. You can filter this by time.

- He click Tune Alerts action. We see the alerts, and a count of each. You can then do an override (object or group of objects).

Maintenance cycles create a lot of alerts. We expect monitoring to suppress these alerts – but it hasn’t yet! This is fixed in SCOM 2016:

- You can schedule maintenance in advance (yay!). You could match this to a patching cycle so WSUS/SCCM patch deployments don’t break your heart on at 3am on a Saturday morning.

- Your objects/assets will automatically go into maintenance mode and have a not-monitored status according to your schedules.

All those MacGuyver solutions we’ve cobbled together for stopping alerts while patching can be thrown out!

That was all for System Center? I am very surprised!

PowerShell

PowerShell is now open source.

- DevOps-oriented tooling in PoSH 5.1 in WS2016

- vNext Alpha on Windows, macOS, and Linux

- Community supported releases

Joey Aiello, Program Manager, comes up to do a demo. I lose interest here. The session wraps up with a marketing video.