An unfortunately common scenario is where you must create a site-to-site network connection with a third-party network from your Azure network using NAT. This post will explain a few solutions.

The Scenario

There are those out there who think that every implementation in The Cloud is 100% under your control and is cloud-ready. But sometimes, you must fit in with other people’s designs and you can’t use cool integrations such as Private Link or API. Sometimes you need to connect your network to a third party and they dictate the terms of the connection.

The connection is typically a site-to-site connection, usually VPN but I have seen ExpressRoute used. VPN means there are messy bits – you can control that with your own on-premises firewalls but you have no control over the VPN configuration of an externally owned firewall.

Site-to-site connections with a service provider means that there could be IP address overlap. The only way to handle that is to use NAT – and that is not always possible natively in the platform or it’s really badly documented.

Solution 1: On-Premises Relay

In this scenario, the third-party will make a connection to your on-premises network. NAT is implemented on the on-premises network to translate your private Azure address to some “public address” (it is routed only over the private connections).

The connection between on-premises and Azure could be VPN or ExpressRoute.

This design is useful in two situations:

- You are using ExpressRoute – the ExpressRoute Gateway does not offer NAT functionality.

- The third-party insists that you use some kind of VPN configuration that is not supported in Azure, for example, GRE.

The downside with this design is that might be additional latency between the third-party and your Azure network.

Solution 2: AWS Relay

Oh – did this post by an Azure MVP just mention AWS? Sure – there is a time and a place for everything.

This solution is similar to the on-premises relay solution but it replaces on-premises with AWS. This can be useful where:

- You want to minimise on-premises resources. AWS does support GRE so a VPN connection to a third-party that requires GRE can be handled in this way.

- You can use an AWS region that is close to either the third-party and/or your Azure region and minimise latency.

Note that the connection from AWS or Azure could be either VPN or ExpressRoute (with an ISP that supports Azure ExpressRoute and AWS Direct Connect).

The downside is that there is still “more stuff” and a requirement for skills that you might not have. On the plus side, it offers compatibility with reduced latency.

Solution 3: Azure Relay

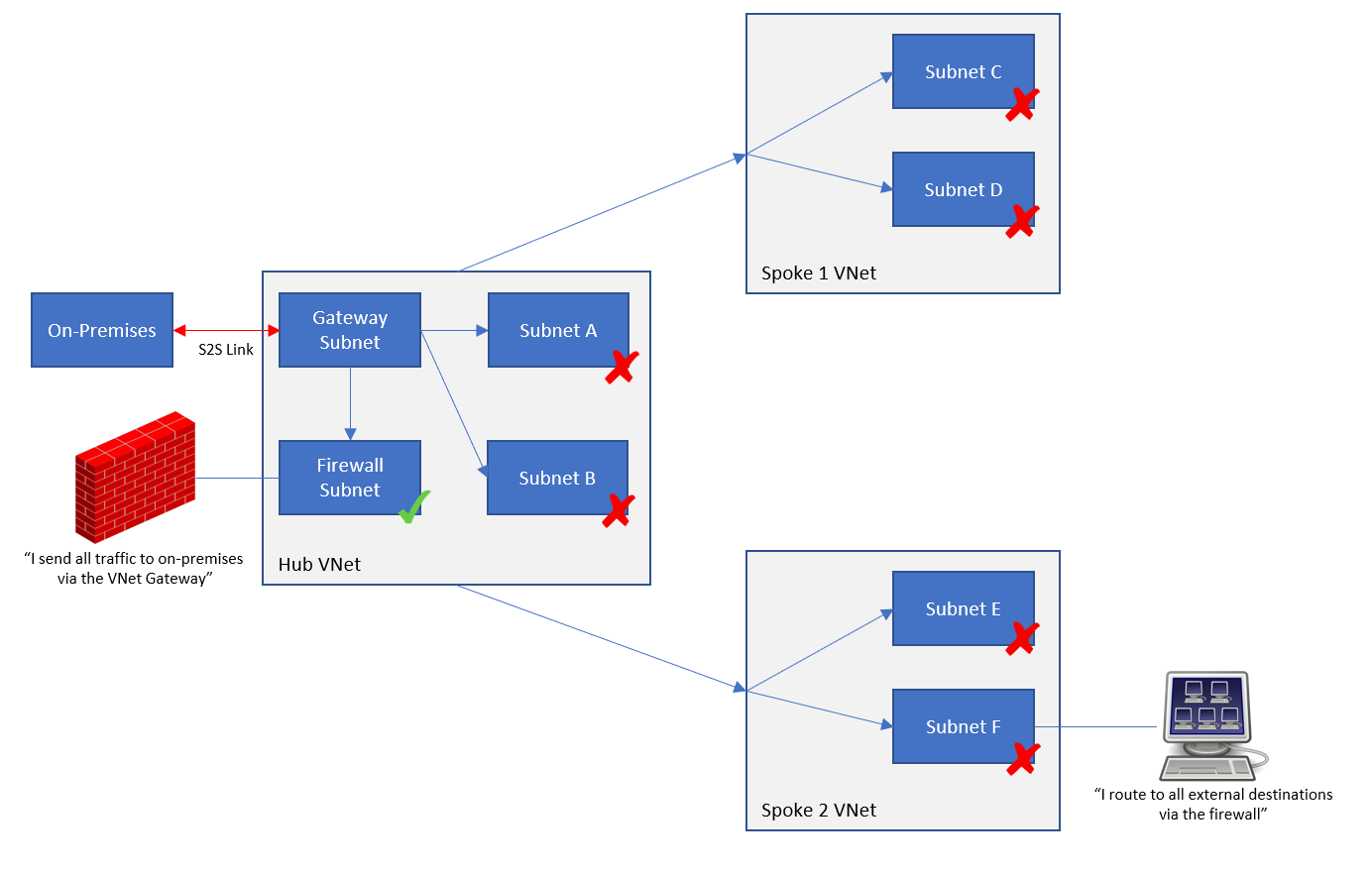

In this design, the third-party makes a connection to your Azure network(s) using ExpressRoute. But as usual, you must implement a NAT rule. The ExpressRoute Gateway cannot natively implement NAT. That requires that you must deploy “an appliance” (NVA or Linux VM with NAT tables).

In the above design, there is a route table associated with the GatewaySubnet of the ExpressRoute Gateway. An user-defined route with a prefix of 40.40.40.4 will forward to the appliance as the next hop. A user-defined route on the VM’s subnet with a prefix of the third-party network(s) will use the appliance as the next hop.

This design allows you to use ExpressRoute to connect to the third-party but it also allow you to implement NAT.

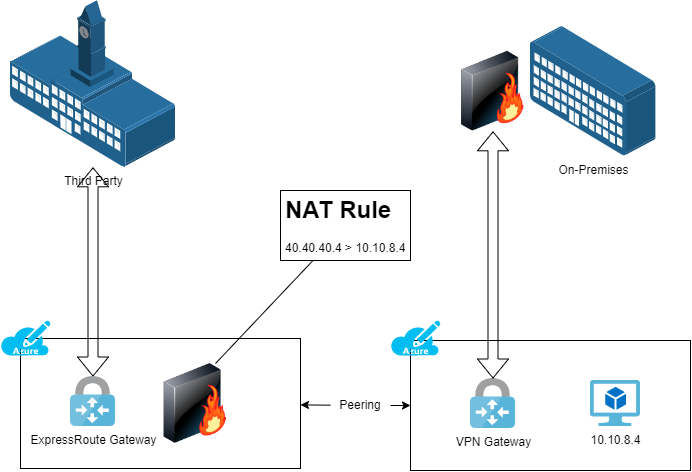

Solution 4: VPN Gateway & NAT

Other than using some modern solution, such as authenticated API over HTTPS, this is probably “the best” scenario in terms of Azure resource simplicity.

The third-party connects to your Azure network using a site-to-site VPN. The connection is terminated in Azure using a VPN Gateway. The Azure VPN Gateway is capable of supporting NAT rules. Unfortunately, that’s where things begin to fall apart because of the documentation (quality and completeness).

This is a simple scenario where the third-party needs access to an IP address (VM other otherwise) hosted in your Azure network. That internal address of your Azure resource must be translated to a different External IP Address.

As long as your VPN Gateway is VpnGw2/VpnGw2Az or higher, then you can create NAT rules in the Gateway. The scenario that I have described requires a confusingly-named egress NAT rule – you are translating an internal IP address(es) to an external IP address(es) to abstract the internal address(es) for ingress traffic. An ingress NAT rule translates an external IP address(es) to an internal address(es) to abstract the external address(es) for ingress traffic.

The Terraform code for my scenario is shown below: I want to make my Azure resource with 10.10.8.4 available externally as 40.40.40.4 on TCP 443:

Once you have the NAT rule, you will associate it with the Connection resource for the VPN.

And that’s it – 10.10.8.4 will be available as 40.40.40.4 on TCP 443 to the third-party – no other connection can use this NAT rule unless it is associated with it.

Solution 5 – NVA & NAT

This is alm ost the same as the previous example, but an NVA is used instead of the Azure VPN Gateway, maybe because you like their P2S VPN solution or you are using SD-WAN. The NAT rules are implemented in the NVA.