This post is a collection of my notes from the Ben Armstrong’s (Principal Program Manager Lead in Hyper-V) session (original here) on the features of WS2016 Hyper-V. The session is an overview of the features that are new, why they’re there, and what they do. There’s no deep-dives.

A Summary of New Features

Here is a summary of what was introduced in the last 2 versions of Hyper-V. A lot of this stuff still cannot be found in vSphere.

And we can compare that with what’s new in WS2016 Hyper-V (in blue at the bottom). There’s as much new stuff in this 1 release as there were in the last 2!

Security

The first area that Ben will cover is security. The number of attack vectors is up, attacks are on the rise, and the sophistication of those attacks is increasing. Microsoft wants Windows Server to be the best platform. Cloud is a big deal for customers – some are worried about industry and government regulations preventing adoption of the cloud. Microsoft wants to fix that with WS2016.

Shielded Virtual Machines

Two basic concepts:

- A VM can only run on a trusted & healthy host – a rogue admin/attacker cannot start the VM elsewhere. A highly secured Host Guardian Service must authorize the hosts.

- A VM is encrypted by the customer/tenant using BitLocker – a rogue admin/attacker/government agency cannot inspect the VM’s contents by mounting the disk(s).

There are levels of shielding, so it’s not an all or nothing.

Key Storage Drive for Generation 1 VMs

Shielding, as above, required Generation 2 VMs. You can also offer some security for Generation 1 virtual machines: Key Storage Drive. Not as secure as shielded virtual machines or virtual TPM, but it does give us a safe way to use BitLocker inside a Generation 1 virtual machine – required for older applications that depend on older operating systems (older OSs cannot be used in Generation 2 virtual machines).

Virtual Secure Mode (VSM)

We also have Guest Virtual Secure Mode:

- Credential Guard: protecting ID against pass-the-hash by hiding LSASS in a secured VM (called VSM) … in a VM with a Windows 10 or Windows Server 2016 guest OS! Malware running with admin rights cannot steal your credentials in a VM.

- Device Guard: Protect the critical kernel parts of the guest OS against rogue s/w, again, by hiding them in a VSM in a Windows 10 or Windows Server 2016 guest OS.

Secure Boot for Linux Guests

Secure boot was already there for Windows in Generation 2 virtual machines. It’s now there for Linux guest OSs, protecting the boot loader and kernel against root kits.

Host Resource Protection (HRP)

Ben hopes you never see this next feature in action in the field ![]() This is because Host Resource Protection is there to protect hosts/VMs from a DOS attack against a host by someone inside a VM. The scenario: you have an online application running in a VM. An attacker compromises the application (example: SQL injection) and gets into the guest OS of the VM. They’re isolated from other VMs by the hypervisor and hardware/DEP, so they attack the host using DOS, and consume resources.

This is because Host Resource Protection is there to protect hosts/VMs from a DOS attack against a host by someone inside a VM. The scenario: you have an online application running in a VM. An attacker compromises the application (example: SQL injection) and gets into the guest OS of the VM. They’re isolated from other VMs by the hypervisor and hardware/DEP, so they attack the host using DOS, and consume resources.

A new feature, from Azure, called HRP will determine that the VM is aggressively using resources using certain patterns, and start to starve it of resources, thus slowing down the DOS attack to the point of being pointless. This feature will be of particular interest to:

- Companies hosting external facing services on Hyper-V/Windows Azure Pack/Azure Stack

- Hosting companies using Hyper-V/Windows Azure Pack/Azure Stack

This is another great example of on-prem customers getting the benefits of Azure, even if they don’t use Azure. Microsoft developed this solution to protect against the many unsuccessful DOS attacks from Azure VMs, and we get it for free for our on-prem or hosted Hyper-V hosts. If you see this happening, the status of the VM will switch to Host Resource Protection.

Security Demos

Ben starts with virtual TPM. The Windows 10 VM has a virtual TPM enabled and we see that the C: drive is encrypted. He shuts down the VM to show us the TPM settings of the VM. We can optionally encrypt the state and live migration traffic of the VM – that means a VM is encrypted at rest and in transit. There is a “performance impact” for this optional protection, which is why it’s not on by default. Ben also enables shielding – and he loses console access to the VM – the only way to connect to the machine is to remote desktop/SSH to it.

Note: if he was running the full host guardian service (HGS) infrastructure then he would have had no control over shielding as a normal admin – only the HGS admins would have had control. And even the HGS admins have no control over BitLocker.

He switches to a Generation 1 virtual machine with Key Storage Drive enabled. BitLocker is running. In the VM settings (Generation 1) we see Security > Key Storage Drive Enabled. Under the hood, an extra virtual hard disk is attached to the VM (not visible in the normal storage controller settings, but visible in Disk Management in the guest OS). It’s a small 41 MB NTFS volume. The BitLocker keys are stored there instead of a TPM – virtual TPM is only in Generation 2, but it’s using the same sorts of tech/encryption/methods to secure the contents in the Key Storage Drive, but it cannot be as secure as virtual TPM, but it is better than not having BitLocker. Microsoft can make the same promises with data at rest encryption for Generation 1 VMs, but it’s still not as good as a Generation 2 VM with vTPM or even a shielded VM (requires Generation 2).

Availability

The next section is all about keeping services up and running in Hyper-V, whether it’s caused by upgrades or infrastructure issues. Everyone has outages and Microsoft wants to reduce the impact of these. Microsoft studied the common causes, and started to tackle them in WS2016

Cluster OS Rolling Upgrades

Microsoft is planning 2-3 updates per year for Nano Server, plus there’ll be other OS upgrades in the future. You cannot upgrade a cluster node. And in the past we could only do cluster-cluster migrations to adopt new versions of Windows Server/Hyper-V. Now, we can:

- Remove cluster node 1

- Rebuild cluster node 1 with the new version of Windows Server/Hyper-V

- Add cluster node 1 to the old cluster – the cluster runs happily in mixed-mode for a short period of time (weeks), with failover and Live Migration between the old/new OS versions.

- Repeat steps 1-3 until all nodes are up to date

- Upgrade the cluster functional level – Update-ClusterFunctionalLevel (see below for “Emulex incident”)

- Upgrade the VMs’ version level

Zero VM downtime, zero new hardware – 2 node cluster, all the way to a 64 node cluster.

If you have System Center:

- Upgrade to SCVMM 2016.

- Let it orchestrate the cluster upgrade (above)

Supports starts with WS2012 R2 to WS2016. Re-read that statement: there is no support for W2008/W2008 R2/WS2012. Re-read that last statement. No need for any questions now ![]()

To avoid an “Emulex incident” (you upgrade your hosts – and a driver/firmware fails even though it is certified, and the vendor is going to take 9 months to fix the issue) then you can actually:

- Do the node upgrades.

- Delay the upgrade to the cluster functional level for a week or two

- Test your hosts/cluster for driver/firmware stability

- Rollback the cluster nodes to the older OS if there is an issue –> only possible if the cluster functional level is on the older version.

And there’s no downtime because it’s all leveraging Live Migration.

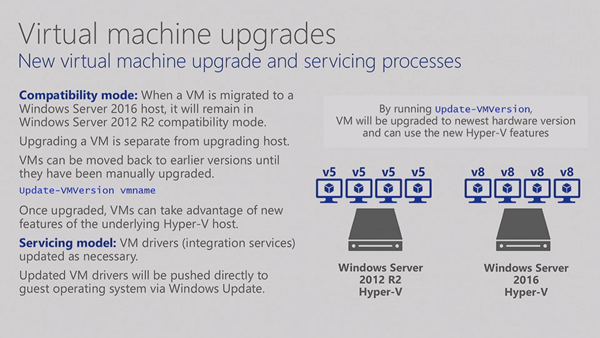

Virtual Machine Upgrades

This was done automatically when you moved a VM from version X to version X+1. Now you control it (for the above to work). Version 8 is WS2016 host support.

Failover Clustering

Microsoft identified two top causes of outages in customer environments:

- Brief storage “outages” – crashing the guest OS of a VM when an IO failed. In WS2016, when an IO fails, the VM is put in a paused-critical state (for up to 24 hours, by default). The VM will resume as soon as the storage resumes.

- Transient network errors – clustered hosts being isolated causing unnecessary VM failover (reboot), even if the VM was still on the network. A very common 30 seconds network outage will cause a Hyper-V cluster to panic up to and including WS2012 R2 – attempted failovers on every node and/or quorum craziness! That’s fixed in WS2016 – the VMs will stay on the host (in an unmonitored state) if they are still networked (see network protection from WS2012 R2). Clustering will wait (by default) for 4 minutes before doing a failover of that VM. If a host glitches 3 times in an hour it will be automatically quarantined, after resuming from the 3rd glitch, (VMs are then live migrated to other nodes) for 2 hours, allowing operator inspection.

Guest Clustering with Shared VHDX

Version 1 of this in WS2012 R2 was limited – supported guest clusters but we couldn’t do Live Migration, replication, or backup of the VMs/shared VHDX files. Nice idea, but it couldn’t really be used in production (it was supported, but functionally incomplete) instead of virtual fibre channel or guest iSCSI.

WS2016 has a new abstracted form of Shared VHDX – it’s even a new file format. It supports:

- Backup of the VMs at the host level

- Online resizing

- Hyper-V Replica (which should lead to ASR support) – if the workload is important enough to cluster, then it’s important enough to replicate for DR!

One feature that does not work (yet) is Storage Live Migration. Checkpoint can be done “if you know what you are doing” – be careful!!!

Replica Support for Hot-Add VHDX

We could hot-add a VHDX file to a VM, but we could not add that to replication if the VM was already being replicated. We had to re-replicate the VM! That changes in WS2016, thanks to the concept of replica sets. A new VHDX is added to a “not-replicated” set and we can move it to the replicated set for that VM.

Hot-Add Remove VM Components

We can hot-add and hot-remove vNICs to/from running VMs. Generation 2 VMs only, with any supported Windows or Linux guest OS.

We can also hot-add or hot-remove RAM to/from a VM, assuming:

- There is free RAM on the host to add to the VM

- There is unused RAM in the VM to remove from the VM

This is great for those VMs that cannot use Dynamic Memory:

- No support by the workload

- A large RAM VM that will benefit from guest-aware NUMA

A nice GUI side-effect is that guest OS memory demand is now reported in Hyper-V Manager for all VMs.

Production Checkpoints

Referring to what used to be called (Hyper-V) snapshots, but were renamed to checkpoints to stop dumb people from getting confused with SAN and VSS snapshots – yes, people really are that stupid – I’ve met them.

Checkpoints (what are now called Standard Checkpoints) were not supported by many applications in a guest OS because they lead to application inconsistency. WS2016 adds a new default checkpoint type called a Production Checkpoint. This basically uses backup technology (and IT IS STILL NOT A BACKUP!) to create an application consistent checkpoint of a VM. If you apply (restore) the checkpoint the VM:

- The VM will not boot up automatically

- The VM will boot up as if it was restoring from a backup (hey dumbass, checkpoints are STILL NOT A BACKUP!)

For the stupid people, if you want to backup VMs, use a backup product. Altaro goes from free to quite affordable. Veeam is excellent. And Azure Backup Server gives you OPEX based local backup plus cloud storage for the price of just the cloud component. And there are many other BACKUP solutions for Hyper-V.

Now with production checkpoints, MSFT is OK with you using checkpoints with production workloads …. BUT NOT FOR BACKUP!

Demos

Ben does some demos of the above. His demo rig is based on nested virtualization. He comments that:

- The impact of CPU/RAM is negligible

- There is around a 25% impact on storage IO

Storage

The foundation of virtualization/cloud that makes or breaks a deployment.

Storage Quality of Service (QOS)

We had a basic system in WS2012 R2:

- Set max IOPS rules per VM

- Set min IOPS alerts per VM that were damned hard to get info from (WMI)

And virtually no-one used the system. Now we get storage QoS that’s trickled down from Azure.

In WS2016:

- We can set reserves (that are applied) and limits on IOPS

- Available for Scale-Out File Server and block storage (via CSV)

- Metrics rules for VHD, VM, host, volume

- Rules for VHD, VM, service, or tenant

- Distributed rule application – fair usage, managed at storage level (applied in partnership by the host)

- PoSH management in WS2016, and SCVMM/SCOM GUI

You can do single-instance or multi-instance policies:

- Single-instance: IOPS are shared by a set of VMs, e.g. a service or a cluster, or this department only gets 20,000 IOPS.

- Multi-instance: the same rule is applied to a group of VMs, the same rule for a large set of VMs, e.g. Azure guarantees at least X IOPS to each Standard storage VHD.

Discrete Device Assignment – NVME Storage

DDA allows a virtual machine to connect directly to a device. An example is a VM connects directly to extremely fast NVME flash storage.

Note: we lose Live Migration and checkpoints when we use DDA with a VM.

Evolving Hyper-V Backup

Lots of work done here. WS2016 has it’s only block change tracking (Resilient Change Tracking) so we don’t need a buggy 3rd party filter driver running in the kernel of the host to do incremental backups of Hyper-V VMs. This should speed up the support of new Hyper-V versions by the backup vendors (except for you-know-who-yellow-box-backup-to-tape-vendor-X, obviously!).

Large clusters had scalability problems with backup. VSS dependencies have been lessened to allow reliable backups of 64 node clusters.

Microsoft has also removed the need for hardware VSS snapshots (a big source of bugs), but you can still make use of hardware features that a SAN can offer.

ReFS Accelerated VHDX Operations

Re-FS is the preferred file system for storing VMs in WS2016. ReFS works using metadata which links to data blocks. This abstraction allows very fast operations:

- Fixed VHD/X creation (seconds instead of hours)

- Dynamic VHD/X expansion

- Checkpoint merge, which impacts VM backup

Note, you’ll have to reformat WS2012 R2 ReFS to get the new version of ReFS.

Graphics

A lot of people use Hyper-V (directly or in Azure) for RDS/Citrix.

RemoteFX Improvements

The AVC444 thing is a lossless codec – lossless 3D rendering, apparently … that’s gobbledegook to me.

DDA Features and GPU Capabilities

We can also use DDA to connect VMs directly to CPUs … this is what the Azure N-Series VMs are doing with high-end NVIDIA GFX cards.

- DirectX, OpenGL, OpenCL, CUDA

- Guest OS: Server 2012 R2, Server 2016, Windows 10, Linux

The h/w requirements are very specific and detailed. For example, I have a laptop that I can do RemoteFX with, but I cannot use for DDA (SRIOV not supported on my machine).

Headless Virtual Machine

A VM can be booted without display devices. Reduces the memory footprint, and simulates a headless server.

Operational Efficiency

Once again, Microsoft is improving the administration experience.

PowerShell Direct

You can now to remote PowerShell into a VM via the VMbus on the host – this means you do not need any network access or domain join. You can do either:

- Enter-PSSession for an interactive session

- Invoke-Command for a once-off instruction

Supports:

- Host: Windows 10/WS2016

- Guest: Windows 10/WS2016

You do need credentials for the guest OS, and you need to do it via the host, so it is secure.

This is one of Ben’s favourite WS2016 features – I know he uses it a lot to build demo rigs and during demos. I love it too for the same reasons.

PowerShell Direct – JEA and Sessions

The following are extensions of PowerShell Direct and PowerShell remoting:

- Just Enough Administration (JEA): An admin has no rights with their normal account to a remote server. They use a JEA config when connecting to the server that grants them just enough rights to do their work. Their elevated rights are limited to that machine via a temporary user that is deleted when their session ends. Really limits what malware/attacker can target.

- Justin-Time Administration (JITA): An admin can request rights for a short amount of time from MIM. They must enter a justification, and company can enforce management approval in the process.

vNIC Identification

Name the vNICs and make that name visible in the guest OS. Really useful for VMs with more than 1 vNIC because Hyper-V does not have consistent device naming.

Hyper-V Manager Improvements

Yes, it’s the same MMC-based Hyper-V Manager that we got in W2008, but with more bells and whistles.

- Support for alternative credentials

- Connect to a host IP address

- Connect via WinRM

- Support for high-DPI monitors

- Manage WS2012, WS2012 R2 and WS2016 from one HVM – HVM in Win10 Anniversary Update (The big Redstone 1 update in Summer 2016) has this functionality.

VM Servicing

MS found that the vast majority of customers never updated the Integration services/components (ICs) in the guest OS of VMs. It was a horrible manual process – or one that was painful to automate. So customers ran with older/buggy versions of ICs, and VMs often lacked features that the host supported!

ICs are updated in the guest OS via Windows Update on WS2016. Problem sorted, assuming proper testing and correct packaging!

MSFT plans to release IC updates via Windows Update to WS2012 R2 in a month, preparing those VMs for migration to WS2016. Nice!

Core Platform

Ben was running out of time here!

Delivering the Best Hyper-V Host Ever

This was the Nano Server push. Honestly – I’m not sold. Too difficult to troubleshoot and a nightmare to deploy without SCVMM.

I do use Nano in the lab. Later, Ben does a demo. I’d not seen VM status in the Nano console before, which Ben shows – the only time I’ve used the console is to verify network settings that I set remotely using PoSH ![]() There is also an ability to delete a virtual switch on the console.

There is also an ability to delete a virtual switch on the console.

Nested Virtualization

Yay! Ben admits that nested virtualization was done for Hyper-V Containers on Azure, but we people requiring labs or training environments can now run multiple working hosts & clusters on a single machine!

VM Configuration File

Short story: it’s binary instead of XML, improving performance on dense hosts. Two files:

- .VMCX: Configuration

- .VMRS: Run state

Power Management

Client Hyper-V was impacted badly by Windows 8 era power management features like Connected Standby. That included Surface devices. That’s sorted now.

Development Stuff

This looks like a seed for the future (and I like the idea of what it might lead to, and I won’t say what that might be!). There is now a single WMI (Root\HyperVCluster\v2) view of the entire Hyper-V cluster – you see a cluster as one big Hyper-V server. It really doesn’t do much now.

And there’s also something new called Hyper-V sockets for Microsoft partners to develop on. An extension of the Windows Socket API for “fast, efficient communication between the host and the guest”.

Scale Limits

The numbers are “Top Gear stats” but, according to a session earlier in the week, these are driven by Azure (Hyper-V’s biggest customer). Ben says that the numbers are nuts and we normals won’t ever have this hardware, but Azure came to Hyper-V and asked for bigger numbers for “massive scale”. Apparently some customers want massive super computer scale “for a few months” and Azure wants to give them an OPEX offering so those customers don’t need to buy that h/w.

Note Ben highlights a typo in max RAM per VM: it should say 12 TB max for a VM … what’s 4 TB between friends?!?!

Ben wraps up with a few demos.