Speakers: Saurabh Sensharma & Shivam Garg

Saurabh starts. He shows a real ransomware email. The ransom was 1.7 bitcoins for 1 PC or 29 bitcoins for all PCs. Part of the process to restore was to send files to the attacker to prove decryption works. The two files the customer sent contained customer data! Stuff like this has GDPR implications, brand, etc.

Secure Backup is Your Last Line of Defense

Azure Backup – a built-in service. Lower and predictable TCO. Can be zero-infrastructure. And it offers trust-no-one encryption and secure backups.

Shivam comes up. He’s going to play the role of the customer in this session.

Question: Backup is decades old – what has changed?

Digital transformation. People using the cloud to transform on-prem IT, even if it stays on-prem.

Shivam: Backup should be like a checkbox. Customers want a seamless experience. Backup should not be a distraction.

Azure Backup releases you from the management of a backup infrastructure. Azure Backup is built on:

- Scalability

- Availability

- Resilience

Shivam: What does this “built-in” mean if I have a three-tier .Net app running in the cloud?

We see a demo of restoring a SQL Server database in an Azure VM. We see the point-in-time restore will be an option because there are log backups. Saurabh shows the process to backup SQL Server in Azure VMs. He highlights “auto-protect” – if the instance is being protected then all the databases (even new ones that are created later) are backed up.

Saurabh demos creating a new VM. He highlights the option to enable backup during the VM creation – something many didn’t know was possible when this option wasn’t in the VM creation process. VMs are backed up using a snapshot in local storage. 7 of those are kept, and the incremental is sent to the recovery services vault. If you want to restore from a recent backup, you can restore very quickly from the snapshot.

A new restore option is coming soon – Replace Existing (virtual machine). They place the existing disks of the VM into a staging location – this gives them a rollback if something goes wrong. Then the disks of the VM are replaced from backup. So this solves the availability set issue.

Under the Covers – SQL

Anything that has a native backup engine is referred to as enlightened. Azure Backup talks to the SQL Backup Engine using native APIs via Azure Backup plugin for SQL (VM extension). They ask SQL Backup Engine to create the backup APIs. Data is temporarily stored in VM storage. And then there is a HTTPS transfer using incremental backups to the RSV where they are encrypted at rest using SSE.

It’s all built-in. No manual agents, no backup servers, etc.

Non-Enlightened VM Workloads

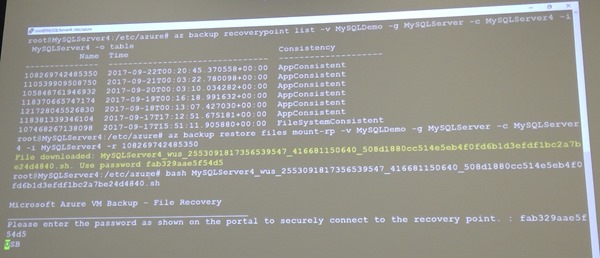

E.g. MySQL in a VM. Azure Backup can call a pre-script. This can instruct MySQL to freeze transactions to disk. When you recover, there’s no need to do a fixup. A snapshot of the disks is taken, enabling a backup. And then a post-script is called and the database is thawed. Application providers typically share these on GitHub.

VM Backup

An extension is in every Azure VM. The extension associates itself to a backup policy that you select in the RSV. A command is sent to the backup extension. This executes a snapshot (VSS for Windows). It’s an Instant Recovery Snapshot in the VM storage. A HTTPS transfer to SSE storage as incremental blocks.

Azure Disk Encryption

KEK and BEK keys are stored in Azure Keyvault. These are also protected when you backup the VM. This ensures that the files can be unlocked when restored.

Up to 1000 VMs can be protected in a single RSV now.

Azure VM Restore

VM restore options:

- Files

- Disks

- VM

- Replace Disks

Replace Disks (new):

- They snapshot copy the VMs disks to a staging location. This allows roll backup if the process is broken.

- They replace the disks by restore.

This (confirmed) is how restoring a VM will allow you to keep availability set membership.

Azure File Sync

The MS sync/tiering solution. Everything is stored in the cloud. So you can move on-prem backup for file servers to the cloud. Demo of deleting a file and restoring it. Saurabh clicks Manage Backups in the Azure File Share and clicks File Recovery and goes through the process.

When the backup API triggers a backup of Files, it pauses sync to create a snapshot. After the snapshot, the sync resumes. Now they have a means to a file consistent backup.

On-Prem Resources

There is no Azure File Sync in this scenario, but they want to use cloud backup without a backup server. This scenario is Azure Backup MARS agent with Windows Admin Center. A demo of enabling Azure Backup protection via the WAC.

Deleting Backup

- Malware cannot delete your backups because this task requires you to manually generate a PIN in the Azure Portal (human authentication)

- If a human maliciously deletes a backup, Azure Backup retains backups for 14 days. And it will send an email to the registered notification address(es).

Security

- Security PIN for critical tasks

- Azure Disk Encryption support

- SSE encryption with TLS 1.2

- RBAC for roles

- Alerts in the portal and via notifications

- On-server encryption (on-prem) before transport to Azure

Rich Management

Questions:

- What’s my storage consumption?

- Are my backups healthy?

- Can I get insights by looking at trends?

This is the sort of stuff that normally requires a lot of on-prem infrastructure. Azure leverages Azure features, such as a Storage Account. No infrastructure, enterprise-wide, and it uses an open data model (published online on docs.microsoft.com) that anyone can use (Kusto, etc). You can also integrate with Service Manager, ServiceNow, and more (ITSM).

Custom reports.

And ….. cross-tenant support! Yay! This is a big deal for partners. It’s a PowerBI solution. It’s a content pack that you can import. It ingests Azure reporting data from a storage account.

Once you set this up, it takes up to 24 hours to get data moving, and then it’s real-time after that.

Roadmap

Cloud resources:

- Azure VM abckup – Standard SSD, resource improvements, 16+ disks, cross-region support

- Azure Files Backup: Premium Files, 5 TB+ shares, ACL, secondary backups.

- Workloads: SAP Hana, SQL in Azure VM GA.

Availability Zones:

- ZRS

- Recovery from cross-zone backups

And more that I couldn’t grab in time.