I’m going to do my best (no guarantees – I only have one body and pair of ears/eyes and NDA stuff is hard to track!) to update this page with a listing of each new feature in Windows Server 2016 (WS2016) Hyper-V and Hyper-V Server 2016 after they are discussed publicly by Microsoft. The links will lead to more detailed descriptions of each feature.

Note, that the features of WS2012 can be found here and the features of WS2012 R2 can be found here.

This list was last updated on 25/May/2015 (during Technical Preview 2).

Active memory dump

Windows Server 2016 introduces a dump type of “Active memory dump”, which filters out most memory pages allocated to VMs making the memory.dmp file much smaller and easier to save/copy.

Azure Stack

A replacement for Windows Azure Pack (WAPack), bringing the code of the “Ibiza” “preview portal” of Azure to on-premises for private cloud or hosted public cloud. Uses providers to interact with Windows Server 2016. Does not require System Center, but you will want management for some things (monitoring, Hyper-V Network Virtualization, etc).

Azure Storage

A post-RTM update (flight) will add support for blobs, tables, and storage accounts, allowing you to deploy Azure storage on-premises or in hosted solutions.

Backup Change Tracking

Microsoft will include change tracking so third-party vendors do not need to update/install dodgy kernel level file system filters for change tracking of VM files.

Binary VM Configuration Files

Microsoft is moving away from text-based files to increase scalability and performance.

Cluster Cloud Witness

You can use Azure storage as a witness for quorum for a multi-site cluster. Stores just an incremental sequence number in an Azure Storage Account, secured by an access key.

Cluster Compute Resiliency

Prevents the cluster from failing a host too quickly after a transient error. A host will go into isolation, allowing services to continue to run without disruptive failover.

Cluster Functional Level

A rolling upgrade requires mixed-mode clusters, i.e. WS2012 R2 and Windows Server vNext hosts in the same cluster. The cluster will stay and WS2012 R2 functional level until you finish the rolling upgrade and then manually increase the cluster functional level (one-way).

Cluster Quarantine

If a cluster node is flapping (going into & out of isolation too often) then the cluster will quarantine a node, and drain it of resources (Live Migration – see MoveTypeThreshold and DefaultMoveType).

Cluster Rolling Upgrade

You do not need to create a new cluster or do a cluster migration to get from WS2012 R2 to Windows Server vNext. The new process allows hosts in a cluster to be rebuilt IN THE EXISTING cluster with Windows Server vNext.

Containers

Deploy born-in-the-cloud stateless applications using Windows Server Containers or Hyper-V Containers.

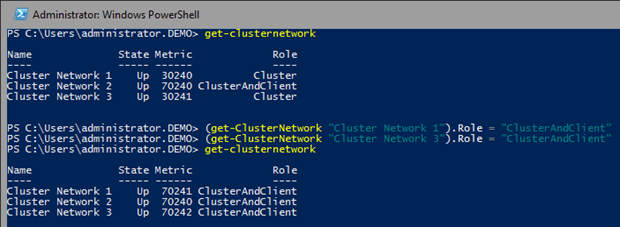

Converged RDMA

Remote Direct Memory Access (RDMA) NICs (rNICs) can be converged to share both tenant and host storage/clustering traffic roles.

Delivery of Integration Components

This will be done via Windows Update

Differential Export

Export just the changes between 2 known points in time. Used for incremental file-based backup.

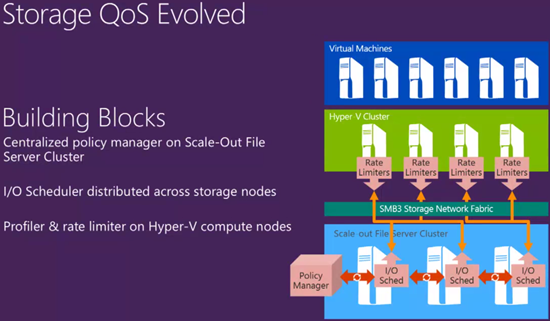

Distributed Storage QoS

Enable per-virtual hard disk QoS for VMs stored on a Scale-Out File Server, possibly also available for SANs.

File-Based Backup

Hyper-V is decoupling from volume backup for scalability and reliability reasons

Host Resource Protection

An automated process for restricting resource availability to VMs that display unwanted “patterns of access”.

Hot-Add & Hot-Remove of vNICs

You can hot-add and hot-remove virtual NICs to/from a running virtual machine.

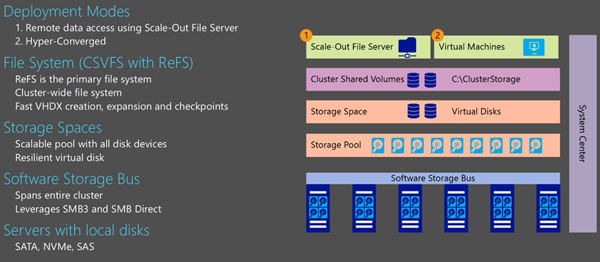

Hyper-convergence

This is made possible with Storage Spaces Direct and is aimed initially at smaller deployments.

Hyper-V Cluster Management

A new administration model that allows tools to abstract the cluster as a single host. Enables much easier VM management, visible initially with PowerShell (e.g. Get-VM, etc).

Hyper-V Replica & Hot Add of Disks

You can add disks to a virtual machine that is already being replicated. Later you can add the disks to the replica set using Set-VMReplication.

Hyper-V Manager Alternative Credentials

With CredSSP-enabled PCs and hosts, you can connect to a host with alternative credentials.

Hyper-V Manager Down-Level Support

You can manage Windows Server vNext, WS2012 R2 and WS2012 Hyper-V from a single console

Hyper-V Manager WinRM

WinRM is used to connect to hosts.

MS-SQOS

This is a new protocol for Microsoft Storage QoS. It uses SMB 3.0 as a transport, and it describes the conversation between Hyper-V compute nodes and the SOFS storage nodes. IOPS, latency, initiator names, imitator node information is sent from the compute nodes to the storage nodes. The storage nodes, send back the enforcement commands to limit flows, etc.

Nested Virtualization

Yes, you read that right! Required for Hyper-V containers in a hosted environment, e.g. Azure. Side-effect is that WS2016 Hyper-V can run in WS2016 via virtualization of VT-X.

Network Controller

A new fabric management feature built-into Windows Server, offering many new features that we see in Azure. Examples are a distributed firewall and software load balancer.

Online Resize of Memory

Change memory of running virtual machines that don’t have Dynamic Memory enabled.

Power Management

Hyper-V has expanded support for power management, including Connected Standby

PowerShell Direct

Target PowerShell at VMs via the hypervisor (VMbus) without requiring network access. You still need local admin credentials for the guest OS.

Pre-Authentication Integrity

When talking from one machine to the next via SMB 3.1.1. This is a security feature that uses checks on the sender & recipient side to ensure that there is no man-in-the-middle.

Production Checkpoints

Using VSS in the guest OS to create a consistent snapshots that workload services should be able to support. Applying a checkpoint is like performing a VM restore from backup.

Nano Server

A new installation option that allows you to deploy headless Windows Servers with tiny install footprint and no UI of any kind. Intended for storage and virtualization scenarios at first. There will be a web version of admin tools that you can deploy centrally.

RDMA to the Host

Remote Direct Memory Access will be supported to the management OS virtual NICs via converged networking.

ReFS Accelerated VHDX Operations

Operations are accelerated by converting them into metadata operations: fixed VHDX creation, dynamic VHDX extension, merge of checkpoints (better file-based backup).

RemoteFX

OpenFL 4.4 and OpenCL 1.1 API are supported.

Replica Support for Hot-Add of VHDX

When you hot-add a VHDX to a running VM that is being replicated by Hyper-V Replica, the VHDX is available to be added to the replica set (MSFT doesn’t assume that you want to replicate the new disk).

Replica support for Cross-Version Hosts

Your hosts can be of different versions.

Runtime Memory Resize

You can increase or decrease the memory assigned to Windows Server vNext guests.

Secure Boot for Linux

Enable protection of the boot loader in Generation 2 VMs

Shared VHDX Improvements

You will be able to do host-based snapshots of Shared VHDX (so you get host-level backups) and guest clusters. You will be able to hot-resize a Shared VHDX.

Shared VHDX will have its own hardware category in the UI. Note that there is a new file format for Shared VHDX. There will be a tool to upgrade existing files.

Shielded Virtual Machines

A new security model that hardens Hyper-V and protects virtual machines against unwanted tampering at the fabric level.

SMB 3.1.1

This is a new version of the data transport protocol. The focus has been on security. There is support for mixed mode clusters so there is backwards compatibility. SMB 3.02 is now called SMB 3.0.2.

SMB Negotiated Encryption

Moving from AES CCM to AES GCM (Galois Counter Mode) for efficiency and performance. It will leverage new modern CPUs that have instructions for AES encryption to offload the heavy lifting.

SMB Forced Encryption

In older versions of SMB, SMB encryption was opt-in on the client side. This is no longer the case in the next version of Windows Server.

Storage Accounts

A later release of WS2016 will bring support for hosting Azure-style Storage accounts, meaning that you can deploy Azure-style storage on-premises or in a hosted cloud.

Storage Replica

Built-in, hardware agnostic, synchronous and asynchronous replication of Windows Storage, performed at the file system level (volume-based). Enables campus or multi-site clusters.

Requires GPT. Source and destination need to be the same size. Need low latency. Finish the solution with the Cluster Cloud Witness.

Storage Spaces Direct (S2D)

A “low cost” solution for VM storage. A cluster of nodes using internal (DAS) disks (SAS or SATA, SSD, HDD, or NVMe) to create a consistent storage spaces pools that stretch across the servers. Compute is normally on a different cluster (converged) but it can be on one tier (hyper-converged)

Storage Transient Failures

Avoid VM bugchecks when storage has a transient issue. The VM freezes while the host retries to get storage back online.

Stretch Clusters

The preferred term for when Failover Clustering spans two sites.

System Center 2016

Those of you who can afford the per-host SMLs will be able to get System Center 2016 to manage your shiny new Hyper-V hosts and fabric.

System Requirements

The system requirements for a server host have been increased. You now must have support for Second-Level Address Translation (SLAT), known as Intel EPT or AMD RVI or NPT. Previously SLAT (Intel Nehalem and later) was recommended but not required on servers and required on Client Hyper-V. It shouldn’t be an issue for most hosts because SLAT has been around for quite some time.

Virtual Machine Groups

Group virtual machines for operations such as orchestrated checkpoints (even with shared VHDX) or group checkpoint export.

Virtual Machine ID Management

Control whether a VM has same or new ID as before when you import it.

Virtual Network Adapter Identification

Not vCDN! You can create/name a vNIC in the settings of a VM and see the name in the guest OS.

Virtual Secure Mode (VSM)

A feature of Windows 10 Enterprise that protects LSASS (secret keys) from pass-the-hash attacks by storing the process in a stripped down Hyper-V virtual machine.

Virtual TPM (vTPM)

A feature of shielded virtual machines that enables secure boot, disk encrypting within the virtual machine, and VSC.

VM Storage Resiliency

A VM will pause when the physical storage of that VM goes offline. Allows the storage to come back (maybe Live Migration) without crashing the VM.

VM Upgrade Process

VM versions are upgraded manually, allowing VMs to be migrated back down to WS2012 R2 hosts with support from Microsoft.

VXLAN Support

The new Network Controller will support VXLAN as well as the incumbent NVGRE for network virtualization.

Windows Containers

This is Docker in Windows Server, enabling services to run in containers on a shared set of libaries on an OS, giving you portability, per-OS density, and fast deployment.