Here are my notes from TechEd NA session WSV410, by Claus Joergensen. A really good deep session – the sort I love to watch (very slowly, replaying bits over). It took me 2 hours to watch the first 50 or so minutes 🙂

For Server Applications

The Scale-Out File Server (SOFS) is not for direct sharing of user data. MSFT intend it for:

- Hyper-V: store the VMs via SMB 3.0

- SQL Server database and log files

- IIS content and configuration files

Required a lot of work by MSFT: change old things, create new things.

Benefits of SOFS

- Share management instead of LUNs and Zoning (software rather than hardware)

- Flexibility: Dynamically reallocate server in the data centre without reconfiguring network/storage fabrics (SAN fabric, DAS cables, etc)

- Leverage existing investments: you can reuse what you have

- Lower CapEx and OpEx than traditional storage

Key Capabilities Unique to SOFS

- Dynamic scale with active/active file servers

- Fast failure recovery

- Cluster Shared Volume cache

- CHKDSK with zero downtime

- Simpler management

Requirements

Client and server must be WS2012:

- SMB 3.0

- It is application workload, not user workload.

Setup

I’ve done this a few times. It’s easy enough:

- Install the File Server and Failover Clustering features on all nodes in the new SOFS

- Create the cluster

- Create the CSV(s)

- Create the File Server role – clustered role that has it’s own CAP (including associated computer object in AD) and IP address.

- Create file shares in Failover Clustering Management. You can manage them in Server Manager.

Simple!

Personally speaking: I like the idea of having just 1 share per CSV. Keeps the logistics much simpler. Not a hard rule from MSFT AFAIK.

And here’s the PowerShell for it:

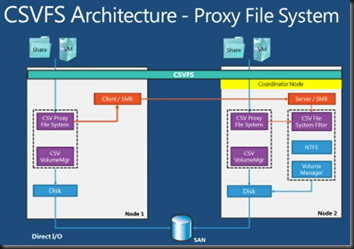

CSV

- Fundamental and required. It’s a cluster file system that is active/active.

- Supports most of the NTFS features.

- Direct I/O support for file data access: whatever node you come in via, then Node 2 has direct access to the back end storage.

- Caching of CSVFS file data (controlled by oplocks)

- Leverages SMB 3.0 Direct and Multichannel for internode communication

Redirected IO:

- Metadata operations – hence not for end user data direct access

- For data operations whena file is being accessed simultaneously by multiple CSVFS instances.

CSV Caching

- Windows Cache Manager integration: Buffered read/write I/O is cached the same way as NTFS

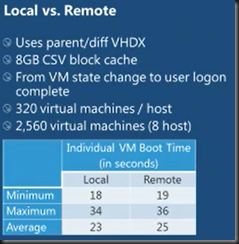

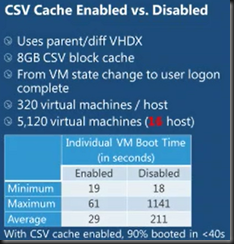

- CSV Block Caching – read only cache using RAM from nodes. Turned on per CSV. Distributed cache guaranteed to be consistent across the cluster. Huge boost for polled VDI deployments – esp. during boot storm.

CHDKDSK

Seamless with CSV. Scanning is online and separated from repair. CSV repair is online.

- Cluster checks once/minute to see if chkdsk spotfix is required

- Cluster enumerates NTFS $corrupt (contains listing of fixes required) to identify affected files

- Cluster pauses the affected CSVFS to pend I/O

- Underlying NTFS is dismounted

- CHKDSK spotfix is run against the affected files for a maximum of 15 seconds (usually much quicker) to ensure the application is not affected

- The underlying NTFS volume is mounted and the CSV namespace is unpaused

The only time an application is affected is if it had a corrupted file.

If it could not complete the spotfix of all the $corrupt records in one go:

- Cluster will wait 3 minutes before continuing

- Enables a large set of corrupt files to be processed over time with no app downtime – assuming the apps’ files aren’t corrupted – where obviously the would have had downtime anyway

Distributed Network Name

- A CAP (client access point) is created for an SOFS. It’s a DNS name for the SOFS on the network.

- Security: creates and manages AD computer object for the SOFS. Registers credentials with LSA on each node

The actual nodes of the cluster nodes are used in SOFS for client access. All of them are registered with the CAP.

DNN & DNS:

- DNN registers node UP for all notes. A virtual IP is not used for the SOFS (previous)

- DNN updates DNS when: resource comes online and every 24 hours. A node added/removed to/from cluster. A cluster network is enabled/disabled as a client network. IP address changes of nodes. Use Dynamic DNS … a lot of manual work if you do static DNS.

- DNS will round robin DNS lookups: The response is a list of sorted addresses for the SOFS CAP with IPv6 first and IPv4 done second. Each iteration rotates the addresses within the IPv6 and IPv4 blocks, but IPv6 is always before IPv4. Crude load balancing.

- If a client looks up, gets the list of addresses. Client will try each address in turn until one responds.

- A client will connect to just one cluster node per SOFS. Can connect to multiple cluster nodes if there are multiple SOFS roles on the cluster.

SOFS

Responsible for:

- Online shares on each node

- Listen to share creations, deletions and changes

- Replicate changes to other nodes

- Ensure consistency across all nodes for the SOFS

It can take the cluster a couple of seconds to converge changes across the cluster.

SOFS implemented using cluster clone resources:

- All nodes run an SOFS clone

- The clones are started and stopped by the SOFS leader – why am I picturing Homer Simpson in a hammock while Homer Simpson mows the lawn?!?!?

- The SOFS leader runs on the node where the SOFS resources is actually online – this is just the orchestrator. All nodes run independently – moving or crash doesn’t affect the shares availability.

Admin can constrain what nodes the SOFS role is on – possible owners for the DNN and SOFS resource. Maybe you want to reserve other nodes for other roles – e.g. asymmetric Hyper-V cluster.

Client Redirection

SMB clients are distributed at connect time by DNS round robin. No dynamic redistribution.

SMB clients can be redirected manually to use a different cluster node:

Cluster Network Planning

- Client Access: clients use the cluster nodes client access enable public networks

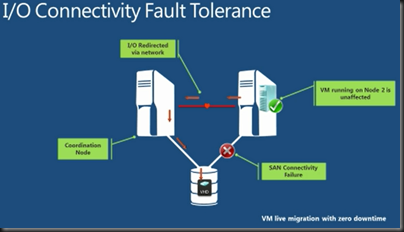

CSV traffic IO Redirection:

- Metadata updates – infrequent

- CSV is built using mirrored storage spaces

- A host loses direct storage connectivity

Redirected IO:

- Prefers cluster networks not enabled for client access

- Leverages SMB Multichannel and SMB Direct

- iSCSI Networks should automatically be disabled for cluster use – ensure this is so to reduce latency.

Performance and Scalability

SMB Transparent Failover

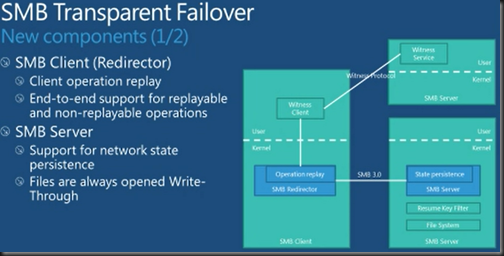

Zero downtime with small IO delay. Supports planned and unplanned failovers. Resilient for both file and directory operations. Requires WS2012 on client and server with SMB 3.0.

Client operation replay – If a failover occurs, the SMB client reissues those operations. Done with certain operations. Others like a delete are not replayed because they are not safe. The server maintains persistence of file handles. All write-throughs happen straight away – doesn’t effect Hyper-V.

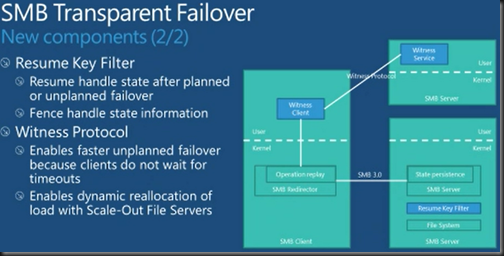

The Resume Key Filter fences off file handles state after failover to prevent other clients grabbing files when the original clients expect to have access when they are failed over by the witness process. Protects against namespace inconsistency – file rename in flight. Basically deals with handles for activity that might be lost/replayed during failover.

Interesting: when a CSV comes online initially or after failover, the Resume Key Filter locks the volume for a few seconds (less than 3 seconds) for a database (state info store in system volume folder) to be loaded from a store. Namespace protection then blocks all rename and create operations for up to 60 seconds to allow for local file hands to be established. Create is blocked for up to 60 seconds as well to allow remote handles to be resumed. After all this (up to total of 60 seconds) all unclaimed handles are released. Typically, the entire process is around 3-4 seconds. The 60 seconds is a per volume configurable timeout.

Witness Protocol (do not confuse with Failover Cluster File Share Witness):

- Faster client failover. Normal SMB time out could be 40-45 seconds (TCP-based). That’s a long timeout without IO. The cluster informs the client to redirect when the cluster detects a failure.

- Witness does redirection at client end. For example – dynamic reallocation of load with SOFS.

Client SMB Witness Registration

- Client SMB connects to share on Node A

- Witness on client obtains list of cluster members from Witness on Node A

- Witness client removes Node A as the witness and selects Node B as the witness

- Witness registers with Node B for notification of events for the share that it connected to

- The Node B Witness registers with the cluster for event notifications for the share

Notification:

- Normal operation … client connects to Node A

- Unplanned failure on Node A

- Cluster informs Witness on Node B (thanks to registration) that there is a problem with the share

- The Witness on Node B notifies the client Witness that Node A went offline (no SMB timeout)

- Witness on client informs SMB client to redirect

- SMB on client drops the connection to Node A and starts connecting to another node in the SOFS, e.g. Node B

- Witness starts all over again to select a new Witness in the SOFS. Will keep trying every minute to get one in case Node A was the only possibility

Event Logs

All under Application and Services – Microsoft – Windows:

- SMBClient

- SMBServer

- ResumeKeyFilter

- SMBWitnessClient

- SMBWitnessService