It’s taken me nearly all day to fast-read through this lot. Here’s a dump of info from Build, Ignite, and since Ignite. Have a nice weekend!

Hyper-V

- What’s New in Windows Server 2016 Hyper-V: A post I wrote for Petri.com

- Linux Integration Services 4.0 Announcement: As part of this release Microsoft has expanded the access to Hyper-V features and performance to the latest Red Hat Enterprise Linux, CentOS, and Oracle Linux versions.

- Linux Integration Services Version 4.0 for Hyper-V: New version of LIS supported Linux distros.

- Windows Server 2016 Failover Cluster Troubleshooting Enhancements – Active Dump: This enhancement has significant advantages when you are troubleshooting and getting memory.dmp files from servers running Hyper-V

- What’s New in Windows Server Hyper-V: The official statement from Microsoft.

- PowerShell Direct – Running PowerShell inside a virtual machine from the Hyper-V host: I wish I had this feature for my WS2012 R2 demos at Ignite.

Windows Server

- How to Install Windows Server 2016 Nano in a VM: How to install Nano, the refactored minimal install option for Windows Server designed for cloud apps and micro services.

- How Many CSVs Should a Scale-Out File Server Have? A post I wrote for Petri.com

- Local Administrator Password Solution (LAPS): The “Local Administrator Password Solution” (LAPS) provides management of local account passwords of domain joined computers. Passwords are stored in Active Directory (AD) and protected by ACL, so only eligible users can read it or request its reset.

- Security Thoughts: Microsoft Local Administrator Password Solution (LAPS, KB3062591): A step by step guide.

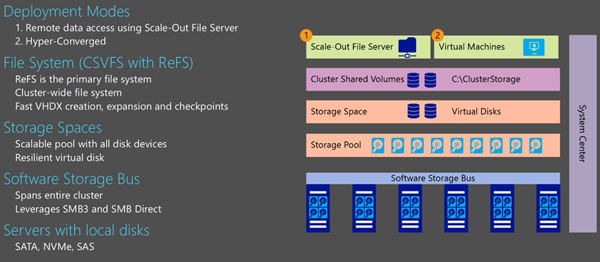

- Storage Spaces Direct: Get the story straight from the PM of this new storage architecture, S2D.

- Next-generation storage for the software-defined datacenter: Hear from the boss of the PM of S2D.

- Windows Server 2016 Failover Cluster Troubleshooting Enhancements – Cluster Log: This log is valuable for Microsoft’s support as well as those out there who have expertise at troubleshooting failover clusters.

- What’s new in SMB 3.1.1 in the Windows Server 2016 Technical Preview 2: In this blog post, you’ll see what changed with the new version of SMB that comes with the Windows 10 Insider Preview released in late April 2015 and the Windows Server 2016 Technical Preview 2 released in early May 2015.

- How to display ipconfig on Nano Server every time it boots: At least you’d have some indication that the thing has powered up!

- Data Deduplication in Windows Server Technical Preview 2: See what’s currently in the works for WS2016.

- What’s new in Windows Server 2016 Technical Preview 2: A subset of features.

Windows Client

- Windows 10 and Azure Active Directory – Embracing the Cloud: Since Windows 10’s capabilities to leverage AAD are now starting to appear in the Windows 10 preview builds, this is a great time to explore them in more detail

- Azure AD on Windows 10 Personal Devices: How to use Windows 10 with both a personal and a work account at the same time.

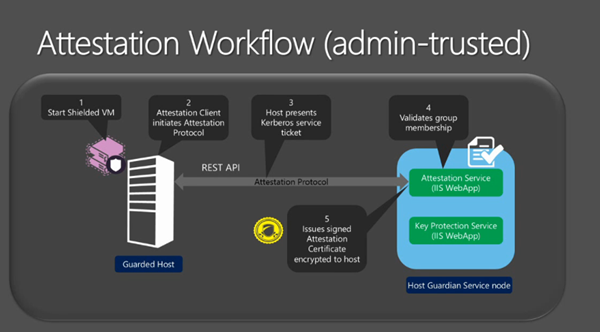

- Windows 10 Security: Microsoft Passport and Virtual Secure Mode – the latter is based on Hyper-V Shielded VM technology.

System Center

- Announcing the availability of System Center 2012 R2 Configuration Manager SP1 and System Center 2012 Configuration Manager SP2: Now generally available and can be downloaded on the Microsoft Evaluation Center. These service packs deliver full compatibility with existing features for Windows 10 deployment, upgrade, and management. Also included in these service packs are new hybrid features for customers using System Center Configuration Manager integrated with Microsoft Intune to manage devices.

- Microsoft System Center Operations Manager Management Pack for Windows Server Storage Spaces 2012 R2: Finally!

- Windows Azure Pack Websites V2 Update Rollup 6: This release introduces a new MMC application that can be used for install, upgrade and configuration.

- Announcing the Microsoft Azure Stack: The new cloud solution from Microsoft, replacing Widows Azure Pack.

- Azure Stack – What’s new and what’s changed: A nice description of Azure Stack.

- Update Rollup 6 for System Center 2012 R2 Virtual Machine Manager is now available: And allegedly has bugs – I’m stunned!

Azure

- Disaster Recovery to Azure enhanced, and we’re listening: Support for Generation 2 VMs, vSphere, physical servers, and more changes coming.

- ASR Now Supports NetApp Private Storage for Microsoft Azure: Replicate on-premises NetApp SAN to an NetApp Private Storage appliance in Azure, and orchestrate it using Azure Site Recovery.

- New features and innovation: Azure Resource Manager allows deployment of IaaS from JSON templates

- Microsoft Azure Marketplace – new features and enhancements: Making the store bigger and more interactive

- Introducing App Service Environment: A new scalable and secure environment for Web Apps, spanning the worlds of IaaS and PaaS.

- IaaS Just Got Easier. Again: I don’t know about “easier” but JSON templates should be quicker – once you learn this language and syntax.

- Build 2015 Azure Storage Announcements! Premium Storage is GA. Azure Files has support.

- Azure shines bright at Ignite! A host of announcements from Ignite, including Azure DNS.

- Application-Aware Availability Solutions with Azure Site Recovery: Azure Site Recovery solutions have been tested and are now supported for SharePoint, Dynamics AX, Exchange 2013 (single server – AF), Remote Desktop Services, SQL Server (non-replica/cluster members – AF), IIS applications and System Center family like Operations Manager.

- Azure Automation – New Graphical and Textual Authoring Features: Revealing Automation through the Ibiza Preview Portal.

- Azure Site Recovery at Ignite 2015: The breakout sessions that featured ASR.

- Avoid Running Out of Azure Open Credits: A post by me on Petri.com

- 5 Things That Would Improve Microsoft Azure: A post by me on Petri.com

- System Center Orchestrator Migration Toolkit: A collection of tools for migrating integration packs, standard activities, and runbooks from System Center 2012 – Orchestrator to Azure Automation and Service Management Automation.

- Azure Cloud App Discovery GA and our new Privileged Identity Management service: How Azure AD can help you and your organization detect and manage risk related to access to cloud resources.

- RDP to a Linux Server in Azure: Very handy guide on how to get onto GUI of a Linux VM.

- April updates to Azure RemoteApp: Plenty of updates here, some which made the service much easier to use.

Office 365

- Modern productivity–Office news at Ignite: What the Office group had to launch at the conference.

- Manage change and stay informed in Office 365: Microsoft is adding a new “Select people” option to First Release.

Intune

- Announcing support for Windows 10 management with Microsoft Intune: Microsoft announced that Intune now supports the management of Windows 10. All existing Intune features for managing Windows 8.1 and Windows Phone 8.1 will work for Windows 10.

- Announcing the Mobile Device Management Design Considerations Guide: If you’re an IT Architect or IT Professional and you need to design a mobile device management (MDM) solution for your organization, there are many questions that you have to answer prior to recommending the best solution for the problem that you are trying to solve. Microsoft has many new options available to manage mobile devices that can match your business and technical requirements.

- Mobile Application Distribution Capabilities in Microsoft Intune: Microsoft Intune allows you to upload and deploy mobile applications to iOS, Android, Windows, and Windows Phone devices. In this post, Microsoft will show you how to publish iOS apps, select the users who can download them, and also show you how people in your organization can download these apps on their iOS devices.

- Microsoft Intune App Wrapping Tool for Android: Use the Microsoft Intune App Wrapping Tool for Android to modify the behavior of your existing line-of-business (LOB) Android apps. You will then be able to manage certain app features using Intune without requiring code changes to the original application.

Licensing

- Disaster Recovery Rights and License Mobility: Fail-over server rights do not apply in the case of software moved to shared third party servers under License Mobility through Software Assurance.

Miscellaneous

- Microsoft Ignite 2015 Keynote Highlights for IT Pros: A post I wrote for Petri.com

- Getting Started with Microsoft Operations Management Suite: I don’t know if this falls under Azure or not!

- Populating machines in Microsoft Operations Management Suite: The next step after getting started.

- Protecting your datacenter and cloud from emerging threats: This level 100 post covers a number of areas.