I am love blogging this session so please hit refresh to get the latest notes.

Pre-show, everything is running nice and smoothly. I got in at 7am and check-in was running nicely (lots of desks) but I was even luckier by being able to register at the media desk. One breakfast later and we were let into the keynote hall after just a few minutes’ wait, and I went into the press reserved area up to the left of the front. We had lots of handlers there … handy when my ultrabook refused to see the TechEd network and I had to find other means to connect.

Rock music was playing, and then came out a classic New Orleans brass band to liven things up. All we needed was some beer ![]()

Lots of well known media types start appearing in the press section as the band plays “The Saints Come Marching In” (at least until the 49ers D crushes them).

TechEd 2014 is in Houston next year. Hopefully there is a route that does not include Dallas Fort Worth airport.

Brad Anderson

A pre-video where “the bits have been stolen” and Brad goes all James Bond to get them back, chasing the baddies in an Aston Martin while wearing a tux. The Windows USB key is being unsuccessfully uploaded (BitLocker to go)? And he recovers his shades ![]() And he drives out onto the stage with the Aston Martin. Best keynote entrance ever.

And he drives out onto the stage with the Aston Martin. Best keynote entrance ever.

All new versions of datacenter products:

-Devices

-Services to light up devices and enable users (BYOD)

-Azure and Visual Stuid to create great apps

-SQL Server to unlock insights into data

-The cloud platform: what enables the entire stack

Iain McDonald (Windows Core)

Makes the kernel, virtualisation, ID, security, and file system for all the products using Windows Core (Azure, Windows 8, Phone, XBoxOne, etc). Windows is our core business, he says. In other words, Windows lets you get your stuff. Windows 8 is out for 8 months and sold 100,000,000 copies in that time.

A Windows 8 blurb video, and during that a table full of Windows 8 devices comes out. Confirms that Windows 8.1 will be compatible, out this year, and free. Preview bits out on June 26th. Personalized background on the Start Screen. Some biz features will be shown:

- Start Screen control: We can lock down tile customization. You can set up specific apps and setup. Set up a template machine. It’s an XML file export-startlayout. Set a GPO: Start Screen Layout. Paste a UNC path to the XML file. GPO refresh on the user machine, and the start screen is locked out. Windows 8.1 Industry line (embedded) does a lot of lock down and customization stuff for hard appliances.

- Mirrorcast: a powerpoint display technology. He pairs a machine with a streamless wiring device. Now he presents from a tablet. I want this now. I need this now. Much better than VGA over Wifi – which just flat out doesn’t work with animated systems like Windows 8 Start Screen.

- Wifi Printer with NFC. Tab the tablet and it pairs with the printer, and adds the device/printer. The demo gods are unkind

Eventually he goes into Mail and can open an attachment side-by-side (50/50 split). And he sends the attachment to a printer. This is why wifi in big demo rooms does not work: the air is flooded – the print doesn’t appear as expected.

Eventually he goes into Mail and can open an attachment side-by-side (50/50 split). And he sends the attachment to a printer. This is why wifi in big demo rooms does not work: the air is flooded – the print doesn’t appear as expected. - Surface Pro is up next. Can build VPN into apps in 8.1. Can work with virtual smart card for multi-factor authentication.

On the security front:

- Moving from a defensive posture to an offensive posture in the security space.

- 8” Atom powered Acer tablet (see below).

- Toshiba super hi-res Kira ultrabook

Back to Brad

1.2 billion consumer devices sold since last TechEd. 50% of companies told to support them. 20-somethings think BYOD is a right not a privilege. IT budgets are not expanding to support these changes.

Identity: Windows Server AD syncs with and blends with Windows Azure Active Directory (WAAD). Windows Intune connects to on-premise ConfigMgr (System Center). Manage your devices where they live, with a single user ID. Don’t try to manage BYOD or mobile devices using on-premise systems – that just flat-out doesn’t work.

Aston Martin has lots of widely distributed and small branch offices (retail). Windows Intune is perfect to manage this, and they use it for BYOD.

Windows Server and System Center 2012 R2 are announced, as is a new release of Windows Intune (wave E). Get used to the name of Windows Server and System Center. Microsoft has designed for the cloud, and brought it on-premises. Scalability, flexibility, and dependability.

Out comes Molly Brown, Principal Development Lead.

Workplace Join: She is going to show some new solutions in 2012 R2. Users can work on the devices they want while you remain in control She has a Windows 8.1 tablet and logs into a TemShare site. Her access is deined. She can “join her workplace”. This is like joining a domain. Policy is applied to her identity rather than to the device. Think of this as a modern domain join – Anderson. She joins the workplace in Settings -Network – Workplace. She enters her corporate email address and password, and then she has to prove herself, via multifactor authentication, e.g. a phone call. All she has to do is press the # key when prompted. Now she can view the Sharepoint site.

To get IT apps, she can enrol her device for management via Workplace (into Intune). Now she can (if the demo works – wifi) access IT published apps through Intune.

Work Folders: A new feature of WS2012 R2. Users have access to all their files across all their devices. Files replicated to file servers in the datacenter and out to all devices owned by the user. Relies on the device being enrolled.

You can easily leave the workplace and turn off management with 2 taps. All your personal stuff is left untouched. BYOD is made much easier.

Remote wipe is selective, only removing corporate assets from personal devices.

App and device management is Intune. You brand your service to the business, and manage cross-platform devices including Apple and Android (I found IOS device management to actually the be easier than Windows!).

So you empower end users, unify the environment, and secure the business.

Back to Brad

Apps. Devs want rapid lifecycles and flexibility. Need support for cross-platform deployment. And data, any size. And make it secure while being highly available.

On to the public cloud and Azure sales pitch. A dude from Easyjet comes out. I hope everyone has paid to use the priority lane to exit the hall. He talks about cloud scalability.

Scott Guthrie

Corp VP for Windows Azure. Cloud great for dev/test because of agility without waiting on someone to do something for you. Same hypervisor on premise in Hyper-V as in Azure, so you can choose where your app is deployed (hybrid cloud).

No charge for stopped VMs in Windows Azure from now on. You can stop it and start it, knowing that you’ve saved money by shutting it down. Now there is pro-rated per-minute billing. Great for elastic workload. You can use MSDN licenses on Azure for no charge. Or you can deploy pre-created images in the portal. A new rate for MSDN subscribers to run any number of VMs in Azure at up to 97% discount. MSDN subscribers get monthly credits ($50 pro, $100 premium, $150 ultimate), and you can use these VMs for free for dev/test purposes. The portal has been updated today to see what your remaining credit balance is. I might finally fire up an Azure VM.

http://aka.ms/azurecontest .. MSDN competition for subscribers that deploy an Azure app. Could win an Aston Martin.

Brian Harry

Technical Fellow – Appliance lifecycle management

Next version of Visual Studio and TFS 2013 later this year. Preview on June 26th in line with Build. How to help devs to get from idea-implementation-into customer hands-feedback and all over again. New cloud load test service from the cloud. Create the test in VS/TFS, upload it to the cloud, and it runs from there.

SQL Server 2014 is announced. Hybrid scenarios for Azure. Lots of memory work – transaction processing in RAM. Edgenet is an early adopter. They need reliable stock tracking, without human verification. This feature has moved away from once/day stock inventory batch jobs to realtime.

PixelSense monster touch TV comes out. And they start doing touch-driven analytics on the attendees. A cool 3D map of the globe allows them to visualize attendees based on regions.

Back to Brad

Windows Server 2012 R2 and System Center 2012 R2 out at the end of the year, and the previews out in June. These are based on the learnings from Azure for you to use on-premise or to build your own public cloud. Same Hyper-V as in Azure. This gives us consistency across clouds – ID, data, services across all clouds with no conversion.

Windows Azure Pack for Windows Server. This layers on top of System Center and System Center. This is the new name for Katal by the looks of it. Same portal as Azure. Get density and Service Bus on top of WSSC 2012 R2. Users deploy services on the cloud of choice.

Clare Henry, Director of Product Management comes out. You get a stack to build your clouds. Demo: and we see the Katal portal, renamed to Windows Azure Pack. Creates a VM from a gallery as a self-service user. Can deploy different versions of a VM template. All the usual number/scalability and network configuration options.

The self-service empowers the end user, builds on top of WSSC for automation, and allows the admin hands-off total control.

On to the fabric and the infrastructure. Here’s the cool stuff.

Jeff Woolsey

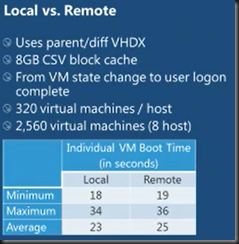

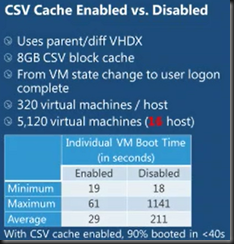

WSSC 2012 R2 is about agility. Storage Spaces. Automated storage tiering is coming to Storage Spaces using SSD and HDD. Bye bye EMC. That gave 16x performance improvement from 7K to 124K IOPS.

Deduplication. Enabling Dedup will actually improve the performance of VDI. We now have a special VDI mode for Hyper-V VDI. It is NOT FOR SERVER VMs. Dedup will actually 2x the performance of those VDI VMs.

Live Migration just got unreal. WS2012 R2 Live Migration can use resources of the host to do compression (for 10 GbE or less). It’ll use some resources if available … it won’t compress if there’s resource contention – to prioritise VMs.

Now LM can use SMB Direct over RDMA. And SMB Multichannel. You get even faster LMs over 10 GbE or faster networks using RDMA.

Hyper-V Replica now supports: Site A – Site B – Site C replication, e.g. replicate to local DR, and from local DR to remote DR.

I wonder how VMware’s Eric Gray will try to tap dance and spin that faster Live Migration isn’t needed. They don’t have anything close to this.

Hyper-V Recovery Manager gives you orchestration via the cloud. DR was never this easy.

Brad is back

Blue led a new development cadence. What they’ve accomplished in 9 months is simply amazing.

We can reduce the cost of infrastructure again, increase flexibility, and be heroes.

Post Event Press Conference

Hybrid cloud was the core design principal from day 1 – Brad Anderson. Organizations should demand consistency – it gives flexibility to move workloads anywhere. It’s not just virtualization – storage, Identity, networks, the whole stack.

Scott Guthrie: private cloud will probably continue forever. But don’t make forks in the road that limit your flexibility.

Windows Azure Pack is confirmed as the renamed next generation version of Katal. A new feature is the ability to use Service Bus on Windows Server, with a common management portal for private and public. No preview release date.

Thanks to Didier Van Hoye for this one. Stockholders not too confident in VMware this morning. Is it a coincidence that Microsoft stole their lunch money this morning?

To quote Thomas Maurer: we are entering the post-VMware era.

What is in Windows 8.1 for the enterprise? It is the "next vision of Windows 8". "No compromises to corporate IT".

Making your PC a hotspot is a new feature. BYOD is huge in the 8.1 release, enabled by Windows Intune. The Workplace join and selective resets are great. And the file sync feature controlled by the biz is also a nice one. XP End of Life: what is the guidance… the official line will be “the easiest path to Windows 8.1 is Windows 8”. Actually they are being realistic about Windows 7 deployment being the norm. Mobility and touch scenarios should be future proofed with the right devices. Windows 8 is the natural OS choice for this.

On System Center, it is now WSSC, Windows Server and System Center as a combined solution, designed to work at data center scale. It’s one holistic set of capabilities. Watch for networking and storage being lit up at scale via System Center. The new version of Orchestrator is entire based on PowerShell.