Welcome to the Wednesday keynote at WPC, the Microsoft partner conference. This keynote is usually very business, strategy and competition based. It was usually the stage for COO (and head of sales) Kevin Turner, who recently left Microsoft to become the CEO of a finance company. We’ll see how his replacements handle this presentation in the fuzzier warmer world of the new Satya Nadella Microsoft.

Gavriella Schuster

The corporate vice president worldwide partner group kicks things off by thanking repeat attendees and welcomes first-timers too.

Washington DC will be the venue in 2017. There’s a bunch of speakers today at the keynote. Gavriella hands over and will return later.

Brad Smith

The chief lawyer comes on stage.

I like him as a a blogger/speaker … very plain spoken which is unusual for a legal person, especially for someone of his rank, and strikes me as being honestly passionate.

He starts to talk about the first industrial revolution which was driven by steam power. We had mass manufacturing and transport that could start to replace the horse. In the late 1800s we had the second revolution. He shows a photo of Broadway, NY. That time, 25% of all agriculture was taken to feed horses … lots of horse drawn transport. 25 years later, Broadway is filled with trams and cars and no horses. And then we had the PC – the 3rd revolution. We are now at the start of the 4th:

- Advances in physical computing: machines, 3D printing, etc.

- Biology: Genomes, treatment, engineering.

- Digital: IoT, Blockchain, disruptive business models.

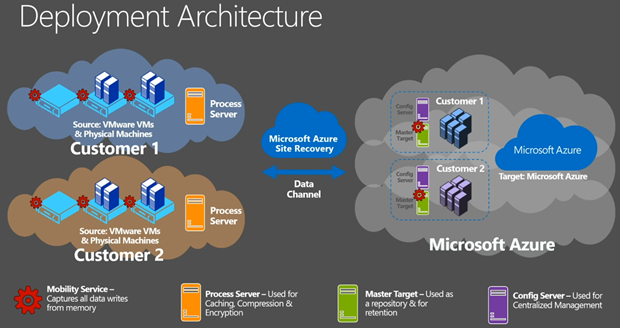

Each revolution was driven by 1 or 2 techs. The 4th revolution has one connection between everything: The Cloud, which explains MIcrosoft’s investments in the last decade: over 100 data centres in 40 countries, opening the world to new possibilities.

Toni Townes-Whitley

While there’s economic opportunity, we also need to address societal impact. Growth of business doesn’t need to be irresponsible. 7.4 billion people can be positively impacted by digital transformation – just not by Cortana at the moment. There’s a video on how Azure data analytics is used by a school district to council kids.

Back to Smith.

What do we need to do?

- Build a cloud that people can trust. People need confidence that rights and protections that they’ve enjoyed will persist. Microsoft will engineer to protect customers from governments, but Microsoft will assist governments with legal searches, e.g. taking 30 minutes to do searches after the Paris attacks. More transparency. Microsoft is suing the US government to allow customers to know that their data is being seized. Protect people globally. The US believes that US law applies everywhere else. They play a video of a testimony where a questioner rips apart a government witness about the FBI/Microsoft/Ireland mailbox case.We need an Internet that is governed by good law. We need to practice what we preach. Cloud vendors need to respect people’s privacy.

- A responsible cloud. The environment – Azure consumes more electricity than the state of Vermont. Soon it could be the size of a mid-size European country. This is why Microsoft is going to be transparent about consumption and plans. R&D will be focused on consuming less electricity. They are going to use renewable electricity more – I think that’s where Europe North is sourced (wind).

- An inclusive cloud. It’s one of the defining issues of our time. Humans have been displaced from so many jobs of 100 years ago. What jobs will disappear in the next 10, 20, 50 years? Where will the new jobs come from and where will those people come from? Business needs to lead – and remember that the western population is getting older! Coding and computer science needs to start earlier in school. Broader bridges are better than higher walls – diversity is better for everyone. We need to reach every country with public cloud.

Cool videos up. One about a village in rural Kenya that gets affordable high-speed Internet via UHF whitespace. A young man there works tech support for a US start up. Next is a school where OneNote is being used for special needs teaching. A kid with dyslexia and dysgraphia goes from reading 4 words per minute and called himself stupid – one year later he reads way better and knows he’s not stupid, he just needed the right help.

Gavriella Schuster

Back to talk to us again. The only constant in life is change … welcome to IT 🙂 It is not only constant, but faster, and self-driven.

Cloud speeds drive the pace of change faster than ever before. Industries have changed faster than ever: Air BnB, Netflix and Uber. Customers change too. More than 1 cloud feature improvement per day last year in MSFT.

The greater cloud model will top $500B by 2020. Cloud is the new normal. IDC says that 80% of business buyers have deployed or fully embrace the cloud. You need to be quick to capture this opportunity: embrace, innovate and be agile … or be left behind.

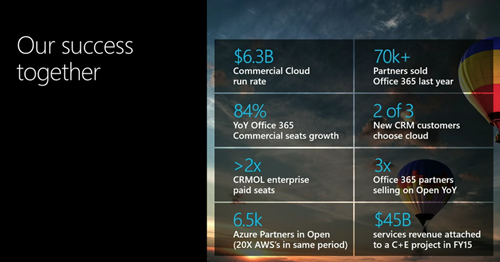

Triple growth on Azure this year. 17,000 partners are transacting CSP. 3 million seats sold. In May alone, CSP sales exceed that of Open, Advisor, and syndication.

Microsoft asked their most profitable partners what it is that they do to be so profitable.

65% of buyers make their decision before talking to a sales person. I see that in the questions I get asked. Often the wrong question is being asked. Microsoft partners need to be where their customers are, and influence that decision/question earlier in the process.

In the next 2 years, customer cloud maturity will go from 10% to 50%. In the next 3 years, 60% of CIOs expect themselves to be the chief innovation (not just IT) officer. Now is the time to invest in new ways of doing business, not just “sell some cloud”.

Steve Guggenheimer

I guess he’s a fan of New Zealand’s All Blacks. I wonder if we’ll get a Microsoft Haka?

The chief dev evangelist and owner of the MVP program comes out. This will be dev-centric, I’m guessing, so I might tune out.

He announces Microsoft Professional Degree. Some sort of mixture of self- and class-based learning to become a data scientist (huge industry shortage).

There is an “intellectual property” 5 minute break here.

Judson Althoff

Freshly promoted to partly replace Kevin Turner as COO, now the Executive Vice President Worldwide Commercial Business.

He reaffirms the message that Microsoft will continue to be lead by partners. CSP is their preferred channel, and CSP is an exclusively partner-sold and -invoiced channel.

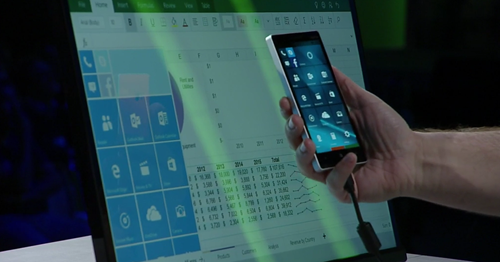

Very interesting video where MSFT partnered with a smart-glasses company to make a vision assistant for visually impaired people, that is paired with a phone, and driven by the cloud. For example, it guides him to take a photo of a menu, and then reads out the items. He can get descriptions of people around him, including facial expressions – “a 40 year old man with a surprised expression”.

6 priorities that MSFT sales force will work with partners on over the next year:

86% of CEOs think digital is their number 1 priority. You have to speak in the vernacular of business outcomes, not tech features.

I like a line from Judson in a video: We can’t do this stuff ourselves. This is a joint opportunity.

You have to rethink your own customer engagement, and not live on the old transactional engagement of the past. Embrace the cloud and move forward. Focus on customer lifetime value, not just a sale ( love this line, and it applies to a lot of partners who really mis-understand the capabilities of the cloud).

Now for the fun: competition 🙂 First, Azure.

The number 1 reason that customers are leaving AWS, not considering Google, and coming to Azure is the partner-ability with Microsoft.

Office 365:

True-cross platform capability. Office 2016 was out on the Apple platforms before Windows!

Microsoft is differentiating with security from the device/user to the data center (a unique selling point):

“Data is the new black”. Microsoft does everything from relational data on-prem to unstructured data in the cloud. Data is the ticket to the C-suite (the board).

And that’s all folks!