Microsoft announced that Azure Backup for Azure IaaS virtual machines (VMs) was released to generally availability yesterday. Personally, I think this removes a substantial roadblock from deploying VMs in Azure for most businesses (forget the legal stuff for a moment).

No Backup – Really?

I’ve mentioned many times that I once worked in the hosting business. My first job was as a senior engineer with what was then a large Irish-owned company. We ran three services:

- Websites: for a few Euros a month, you could get a plan that allowed 10+ websites. We also offered SQL Server and MySQL databases.

- Physical servers: Starting from a few hundred Euros, you got one or more physical servers

- Virtual machines: I deployed the VMware (yeah, VMware) farm running on HP blades and EVA, and customers got their own VNET with one or more VMs

The official line on websites was that there was no backup of websites or databases. You lose it, you LOST it. In reality we retained 1 daily backup to cover our own butts. Physical servers were not backed up unless a customer paid extra for it, and they got an Ahsay agent and paid for storage used. The same went for VMware VMs – pay for the agent + storage and you could get a simple form of cloud backup.

Backup-less Azure

Until very recently there was no backup of Azure VMs. How could that be? This line says a lot about how Microsoft thinks:

Treat your servers like cattle, not pets

When Azure VMs originally launched in beta, the VMs were stateless, much like containers. If you rebooted the VM it reset itself. You were supposed to write your applications so that they used Azure storage accounts or Azure SQL databases. There was no DC or SQL Server VM in the cloud – that aws silly because no one deploys or uses stateful machines anymore. Therefore you shouldn’t care if a VM dies, gets corrupted, or is accidentally removed – you just deploy a new one and carry on.

Except …

Almost no one deploys servers like that.

I can envision some companies, like an Ebay or an Amazon running stateless application or web servers. But in my years of working in large and small/medium businesses, I’ve never seen stateless machines, and I’ve never encountered anyone with a need for those style of applications – the web server/database server configuration still dominates AFAIK.

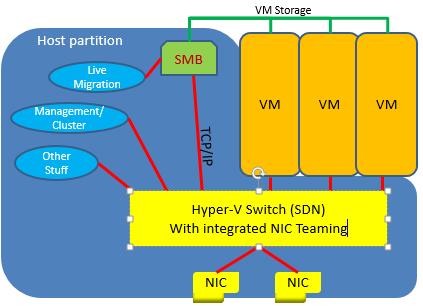

So this is why Azure never had a backup service for VMs. A few years ago, Microsoft changed Azure VMs to be stateful (Hyper-V) virtual machines that we are familiar with and started to push this as a viable alternative to traditional machine deployments. I asked the question: what happens if I accidentally delete a VM – and I got the old answer:

Prepare your CV/résumé.

Mark Minasi quoted me at TechEd North America in one of his cloud Q&A’s with Mark Russinovich 2 years ago – actually he messed up the question a little and Russinovich gave a non-answer. The point was: how could I possibly deploy a critical VM into Azure if I could not back it up.

Use DPM!

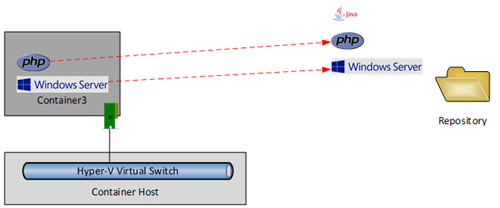

Yeah, Microsoft last year blogged that customers should use System Center Data Protection Manager to protect VMs in Azure. You’d install an agent into the guest OS (you have no access to Azure hosts and there is no backup API) and backup files, folders, databases to DPM running in another VM. The only problem with this would be the cost:

- You’d need to deploy an Azure VM for DPM.

- You would have to use Page Blobs & Disks instead of Block Blobs, doubling the cost of Azure storage required.

- The cost of System Center SMLs would have been horrific. A Datacenter SML ($3,607 on Open NL) would cover up to 8 Azure virtual machines.

Not to mention that you could not simply restore a VM:

- Create a new VM

- Install applications, e.g. SQL Server

- Install the DPM agent

- Restore files/folders/databases

- Pray to your god and any others you can think of

Azure Backup

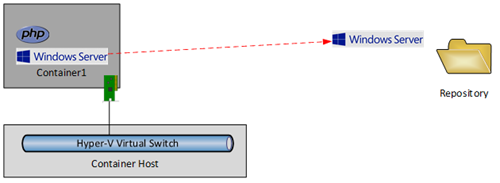

Azure has a backup service called Azure Backup. This was launched as a hybrid cloud service, enabling you to backup machines (PCs, servers) to the cloud using an agent (MARS). You can also install the MARS agent onto an on-premises DPM server to forward all/subset of your backup data to the cloud for off-site storage. Azure Backup uses Block Blob storage (LRS or GRS) so it’s really affordable.

Earlier this year, Microsoft launched a preview of Azure Backup for Azure IaaS VMs. With this service you can protect Azure VMs (Windows or Linux) using a very simple VM backup mechanism:

- Create a backup policy – when to backup and how long to retain data

- Register VMs – installs an extension to consistently backup running VMs

- Protect VMs: Associate registered VMs with a policy

- Monitor backups

The preview wasn’t perfect. In the first week or so, registration was hit and miss. Backup of large VMs was quite slow too. But the restore process worked – this blog exists today only because I was able to restore the Azure VM that it runs on from an Azure backup – every other restore method I had for the MySQL database failed.

Generally Available

Microsoft made Azure Backup for IaaS VMs generally available yesterday. This means that now you can, in a supported, simple, and reliable manner, backup your Windows/Linux VMs that are running in Azure, and if you lose one, you can easily restore it from backup.

A number of improvements were included in the GA release:

- A set of PowerShell based cmdlets have been released – update your Azure PowerShell module!

- You can restore a VM with an Azure VM configuration of your choice to a storage account of your choice.

- The time required to register a VM or back it up has been reduced.

- Azure Backup is in all regions that support Azure VMs.

- There is improved logging for auditing purposes.

- Notification emails can be sent to administrators or an email address of your choosing.

- Errors include troubleshooting information and links to documentation.

- A default policy is included in every backup vault

- You can create simple or complex retention policies (similar to hybrid cloud backup in MARS agent) that can keep data up to 99 years.

Summary

With this release, Microsoft now has solved my biggest concern with running production workloads in Azure VMs – now we can backup and restore stateful machines that have huge value to the business.