WS2016 TPv3 (Technical Preview 3) includes a new feature called Switch Embedded Teaming (SET) that will allow you to converge RDMA (remote direct memory access) NICs and virtualize RDMA for the host. Yes, you’ll be able to converge SMB Direct networking!

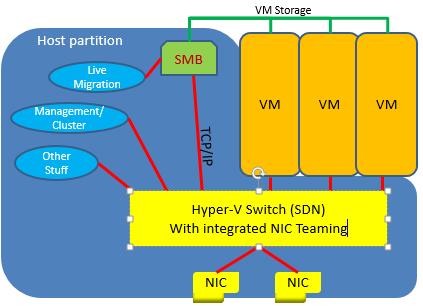

In the below diagram you can see a host with WS2012 R2 networking and a similar host with WS2016 networking. See how:

- There is no NIC team in WS2012: this is SET in action, providing teaming by aggregating the virtual switch uplinks.

- RDMA is converged: DCB is enabled, as is recommended – it’s even recommended in iWarp where it is not required.

- Management OS vNICs using RDMA: You can use converged networks to use SMB Direct.

Note, according to Microsoft:

In Windows Server 2016 Technical Preview, you can enable RDMA on network adapters that are bound to a Hyper-V Virtual Switch with or without Switch Embedded Teaming (SET).

Right now in TPv3, SET does not support Live Migration – which is confusing considering the above diagram.

What is SET?

SET is an alternative to NIC teaming. It allows you to converge between 1 and 8 physical networks using the virtual switch. The pNICs can be on the same or different physical switches. Obviously, the networking of the pNICs must be the same to allow link aggregation and failover.

No – SET does not span hosts.

Physical NIC Requirements

SET is much more fussy about NICs than NIC teaming (which continues as a Windows Server networking technology because SET requires a virtual switch, or Hyper-V). The NICs must be:

- On the HCL, aka “passed the Windows Hardware Qualification and Logo (WHQL) test in a SET team in Windows Server 2016 Technical Preview”.

- All NICs in a SET team must be identical: same manufacturer, same model, same firmware and driver.

- There can be between 1 and 8 NICs in a single SET team (same switch on a single host).

SET Compatibility

SET is compatible with the following networking technologies in Windows Server 2016 Technical Preview.

- Datacenter bridging (DCB)

- Hyper-V Network Virtualization – NV-GRE and VxLAN are both supported in Windows Server 2016 Technical Preview.

- Receive-side Checksum offloads (IPv4, IPv6, TCP) – These are supported if any of the SET team members support them.

- Remote Direct Memory Access (RDMA)

- SDN Quality of Service (QoS)

- Transmit-side Checksum offloads (IPv4, IPv6, TCP) – These are supported if all of the SET team members support them.

- Virtual Machine Queues (VMQ)

- Virtual Receive Side Scalaing (RSS)

SET is not compatible with the following networking technologies in Windows Server 2016 Technical Preview.

- 802.1X authentication

- IPsec Task Offload (IPsecTO)

- QoS in host or native OSs

- Receive side coalescing (RSC)

- Receive side scaling (RSS)

- Single root I/O virtualization (SR-IOV)

- TCP Chimney Offload

- Virtual Machine QoS (VM-QoS)

Configuring SET

There is no concept of a team name in SET; there is just the virtual switch which has uplinks. There is no standby pNIC; all pNICs are active. SET only operates in Switch Independent mode – nice and simple because the switch is completely unaware of the SET team and there’s no networking (no Googling for me).

All that you require is:

- Member adapters: Pick the pNICs on the host. The benefit is that when VMQ is used because inbound traffic paths are predictable.

- Load balancing mode: Hyper-V Port or Dynamic. Outbound traffic is hashed and balanced across the uplinks. Inbound traffic is the same as with Hyper-V mode.

Like with WS2012 R2, I expect Dynamic will be the normally recommended option.

VMQ

SET was designed to work well with VMQ. We’ll see how well NIC drivers and firmware behave with SET. As we’ve seen in the past, some manufacturers take up to a year (Emulex on blade servers) to fix issues. Test, test, test, and disable VMQ if you see Hyper-V network outages with SET deployed.

In terms of tuning, Microsoft says:

- Ideally each NIC should have the *RssBaseProcNumber set to an even number greater than or equal to two (2). This is because the first physical processor, Core 0 (logical processors 0 and 1), typically does most of the system processing so the network processing should be steered away from this physical processor. (Some machine architectures don’t have two logical processors per physical processor so for such machines the base processor should be greater than or equal to 1. If in doubt assume your host is using a 2 logical processor per physical processor architecture.)

- The team members’ processors should be, to the extent practical, non-overlapping. For example, in a 4-core host (8 logical processors) with a team of 2 10Gbps NICs, you could set the first one to use base processor of 2 and to use 4 cores; the second would be set to use base processor 6 and use 2 cores.

Creation and Management

You’ll hear all the usual guff about System Center and VMM. The 8% that can afford System Center can do that, if they can figure out the UI. PowerShell can be used to easily create and manage a SET virtual switch.

Summary

SET is a great first (or second behind vRSS in WS2012 R2) step:

- Networking is simplified

- RDMA can be converged

- We get vRDMA to the host

We just need Live Migration support and stable physical NIC drivers and firmware.

Are we suggesting that all Hyper-V host traffic should go over 2 NIC’s? Would we also need to consider QoS under this approach? Would the complications of Bandwidth management outweigh the simplified network? As all hosts would have at least 4 NIC’s as you need 2 cards for redundancy I am not fully understanding the real benefit of TP3 architecture illustrated above.

The host would have 2 NICs. The benefit is simple: you have 2 NICs, not 4, not 8, not 12. Windows QoS in conjunction with DCB will guarantee the flow and bandwidth of traffic. And the bigger benefit: network deployment is a PowerShell or SysCtr deployment, abstracting it from hardware specifics and making it very quick and simple to deploy. Example: I’ll be doing similar to this for a customer in 2 days with servers on the other side of the planet. One small script that I wrote 2 years ago will do the work in seconds, and they’ll get something that previously a customer could only get with advanced networking gear ($36,000+ for 16 blades) could have done.

Dear AFinn

what is the last update in windows 2016, do we have to configure set manually ?

Follow the link above for the official docs.