Storage is the bedrock of all virtualisation. If you get the storage wrong, then you haven’t a hope. And unfortunately I have seen too many installs where the customer/consultant has focused on capacity, and the performance has been dismal; so bad, in fact, that IT are scared to do otherwise normal operations that will impact production systems because the storage system cannot handle the load.

Introducing AutoCache

I was approached by TechEd NA 2014 by some folks from a company called Proximal Data. Their product, AudoCache, which works with Hyper-V and vSphere is designed to improve the read performance of storage systems.

A read cache is created on the hosts. This cache might be an SSD that is plugged into each host. Data is read from the storage. Data deemed hot is cached on the SSD. The next time that data is required, it is read from the SSD, thus getting some serious speed potential. Cooler data is read from the storage, and writes go direct to the storage.

Installation and management is easy. There’s a tiny agent for each host. In the Hyper-V world, you license AutoCache, configure the cache volume, and monitor performance using System Center Virtual Machine Manager (SCVMM). And that’s it. AutoCache does the rest for you.

So how does it perform?

The Test Lab

I used the test lab at work to see how AutoCache performed. My plan was simple: I created a single generations 1 virtual machine with a 10 GB Fixed VHDX D: drive on the SCSI controller . I installed SQLIO in the virtual machine. I created a simple script to run SQLIO 10 times, one after the other. Each job would perform 120 seconds of random 4K reads. That’s 20 minutes of thumping the storage system per benchmark test.

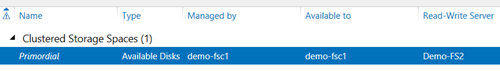

I have two hosts: Dell R420s, each connected to the storage system via dual iWARP (10 GbE RMDA) SFP+ NICs. Each host is running a fully patched WS2012 R2 Hyper-V. The hosts are clustered.

One host, Demo-Host1, had AutoCache installed. I also installed a Toshiba Q Series Pro SATA SSD (554 MB/s and 512 MB/S write) into this host. I licensed AutoCache in SCVMM, and configured a cache drive on the SSD. Note: that for each test involving this host, I deleted and recreated the cache to start with a blank slate.

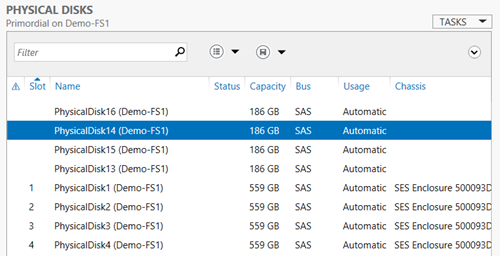

The storage was a Scale-Out File Server (SOFS). Two HP DL360 G7 servers are the nodes, each allowing hosts to connect via dual iWARP NICs. The HP servers are connected to a single DataOn DNS-1640 JBOD. The JBOD contains:

- 8 x Seagate Savvio® 10K.5 600GB HDDs

- 4 x SanDisk SDLKAE6M200G5CA1 200 GB SSDs

- 2 x STEC S842E400M2 SSDs

There is single storage pool. A 3 column tiered 2-way mirrored virtual disk (50 SSD + 550 HDD) was used in the test. To get clean results, I pinned the virtual machine files either to the SSD tier or to the HDD tier; this allowed me to see the clear impact of AutoCache using a local SSD drive as a read cache.

Tests were run on Demo-Host1, with AutoCache and a Cache SDD, and then the virtual machine was live migrated to Demo-Host2, which does not have AutoCache or a cache SSD.

To be clear: I do not have a production workload. I create VMs for labs and tests, that’s it. Yes, the test is unrealistic. I am using a relatively large cache compared to my production storage and storage requirements. But it’s what I have and the results do show what the product can offer. In the end, you should test for your storage system, servers, network, workloads, and work habits.

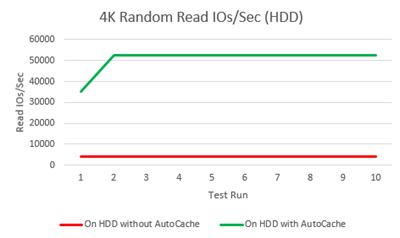

The Results – Using HDD Storage

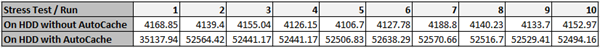

My first series of tests on Demo-Host1 and Demo-Host2 were set up with the virtual machine pinned to the HDD tier. This would show the total impact of AutoCache using a single SSD as a cache drive on the host. First I ran the test on Demo-Host2 without AutoCache, and then I ran the test on Demo-Host1 with AutoCache. The results are displayed below:

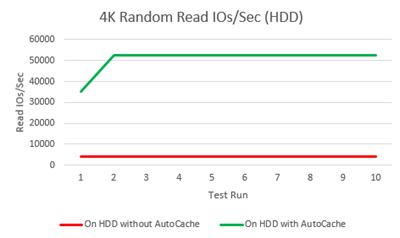

We can see that the non-enhanced host offered and average of 4143 4K random reads per second. That varied very little. However, we can see that once the virtual machine was on a host with AutoCache, running the tests quickly populated the cache partition and led to increases in read IOPS, eventually averaging at around 52522 IOPS.

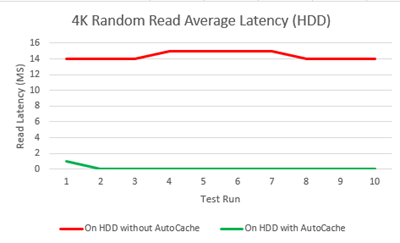

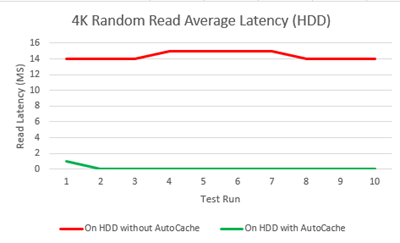

IOPS is interesting but I think the DBAs will like to see what happened to read latency:

Read latency average 14.4 milliseconds without AutoCache. Adding AutoCache to the game reduced latency almost immediately, eventually settling at a figure so small that SQLIO reported it as zero milliseconds!

So, what does this mean? AutoCache did an incredible job, boosting throughput 12 times above it’s original level using a single consumer grade SSD as the local cache in my test. I think those writing time sensitive SQL queries will love that latency will be near 0 for hot data.

The Results – Using SSD Storage

I thought it might be interesting to see how AutoCache would perform if I pinned the virtual machine to the SSD tier. Here’s why: My SSD tier consists of 6 SSDs (3 columns). 6 SSDs is faster than 1! The raw data is presented below:

Now things get interesting. The SSD tier of my storage system offered up an average of 62,482 random 4K read operations without AutoCache. This contrasts with the AutoCache-enabled results where we got an average of 52,532 IOPS once the cache was populated. What happened? I already alluded to the cause: the SSD tier of my virtual disk offered up more IOPS potential than the single local SSD that AutoCache was using as a cache partition.

So it seems to me, that if you have a suitably sized SSD tier in your storage spaces, then this will offer superior read performance to AutoCache and the SSD tier will also give you write performance via a Write-Back Cache.

HOWEVER, I know that:

- Not everyone is buying SSD for Storage Spaces

- Not everyone is buying enough SSDs for their working set of data

So there is a market to use fewer SSDs in the hosts as read cache partitions via AutoCache.

What About Other Kinds Of Storage?

From what I can see, AutoCache doesn’t care what kind of storage you use for Hyper-V or vSphere. It operates in the host and works by splitting the IO stream. I decided to run some tests using a WS2012 R2 iSCSI target presented directly to my hosts as a CSV. I moved the VM onto that iSCSI target. Once again, I saw almost immediate boosts in performance. The difference was not so pronounced (around 4.x), because of the different nature of the physical storage that the iSCSI target VM was on (20 HDDs offering more IOPS than 8), but it was still impressive.

Would I Recommend AutoCache?

Right now, I’m saying you should really consider evaluating AutoCache on your systems to see what it can offer.