Some sales/marketing/channel type in Microsoft will get angry reading this. Good. I am an advocate of Microsoft tech, and I speak out when things are good, and I speak out when things are bad. Friends will criticise each other when one does something stupid. So don’t take criticism personally and get angry, sending off emails to moan about me. Trying to censor me won’t solve the problem. Hear the feedback. Fix the issue.

We’re still around many months away from the release of Windows Server 2016 (my guess: September, the week of Ignite 2016) but Microsoft has released the details of how licensing of WS2016 will be changing. Yes; changing; a lot.

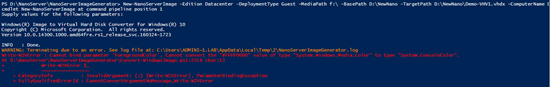

In 2011, I predicted that the growth of cores per processor would trigger Microsoft to switching from per-socket licensing of Windows Server to per-core. Well, I was right. Wes Miller (@getwired) tweeted a link to a licensing FAQ on WS2016 – this paper confirms that WS2016 and System Center 2016 will be out in Q3 2016.

There are two significant changes:

- Switch to per-core licensing

- Standard and Datacenter editions are not the same anymore

Per-Core Licensing

The days when processors got more powerful by becoming faster are over. We are in a virtualized multi-threaded world where capacity is more important than horsepower – plus the laws of physics kicked in. Processors now grow by adding cores.

The largest processor that I’ve heard of from Intel (not claiming that it’s the largest ever) has 60 (SIXTY!) cores!!! Imaging you deploy a host with 2 of those Xeon Phi processors … you can license a huge amount of VMs with just 2 copies of WS2012 R2 Datacenter (no matter what virtualization you use). Microsoft is losing money in the upper end of the market because of the scale-out of core counts, so a change was needed.

I hoped that Microsoft would preserve the price for normal customers – it looks like they have, for many customers, but probably not all.

Note – this is per PHYSICAL CORE licensing, not virtual core, not logical processor, not hyperthread.

Yes, the language of this document is horrendous. The FAQ needs a FAQ.

It reads like you must purchase a minimum of 8 cores per physical proc, and then purchase incremental counts of 2 cores to meet your physical core count. The customer that is hurt most is the one with a small server, such as a micro-server – you must purchase a minimum of 16 cores.

One of the marketing lines on this is that on-premises licensing will align with cloud licensing – anyone deploying Windows Server in Azure or any other hosting company is used to the core model. A software assurance benefit was allegedly announced in October on the very noisy Azure blog (I can’t find it). You can move your Windows Server (with SA) license to the cloud, and deploy it with a blank VM minus the OS charge. I have no further details – it doesn’t appear on the benefits chart either. More details in Q1 2016.

CALs

The switch to core-focused licensing does not do away with CALs. You still need to buy CALs for privately owned licenses – we don’t need Windows Server CALs in hosting, e.g. Azure.

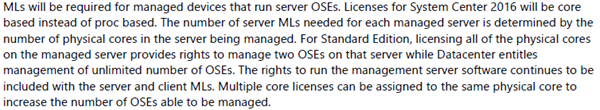

System Center

You’re switching to per-core licensing too.

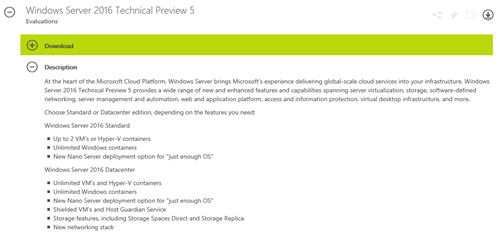

Nano?

This is just an installation type and is not affected by licensing or editions.

Editions?

We know about the “core” editions of WS2016: Standard and Datacenter – more later in this post.

As for Azure Stack, Essentails, Storage Server, etc, we’re told to wait until Q1 2016 when someone somewhere in Redmond is going to have to eat some wormy crow. Why? Keep reading.

Standard is not the same as Datacenter

I found out about the licensing announcement after getting an email from Windows Server User Voice to tell me that my following feedback was rejected:

I knew that some stuff was probably going to end up in Datacenter edition only. Many of us gave feedback: “your solutions for reducing infrastructure costs make no sense if they are in Datacenter only because then your solution will be more expensive than the more mature and market-accepted original solution”.

The following are Datacenter Edition only:

- Storage Spaces Direct

- Storage Replica

- Shielded Virtual Machines

- Host Guardian Service

- Network Fabric

I don’t mind the cloud stuff being Datacenter only – that’s all for densely populated virtualization hosts that Datacenter should be used on. But it’s freaking stupid to put the storage stuff only in this SKU. Let’s imagine a 12 node S2D cluster. Each node has:

- 2 * 800 GB flash

- 8 * 8 TB SATA

That’s 65 TB of raw capacity per node. We have roughly 786 TB raw capacity in the cluster, and we’ll guestimate 314 TB of usable capacity. If each node costs $6155 then the licensing cost alone (forget RDMA network switches, NICs, servers, and flash/HDD) will be $73,860. Licensing for storage will be $73,860. Licensing. How much will that SAN cost you? Where was the cost benefit in going with commodity hardware there, may I ask?

This is almost as bad a cock-up as VMware charging for vRAM.

As for Storage Replica, I have a hard time believing that licensing 4 storage controllers for synch replication will cost more than licensing every host/application server for Storage Replica.

S2D is dead. Storage Replica is irrelevant. How are techs that are viewed with suspicion by customers going to gain any traction if they cannot compete with the incumbent? It’s a pity that some marketing bod can’t use Excel, because the storage team did what looks like an incredible engineering job.

If you agree that this decision was stupid then VOTE here.