Noes from TechEd North America 2012 session WSV430:

New in Windows Server 2012

- File services is supported on CSV for application workloads. Can leverage SMB 3.0 and be used for transparent failover Scale-Out File Server (SOFS)

- Improved backup/restore

- Improved performance with block level I/O redirection

- Direct I/O during backup

- CSV can be built on top of Storage Spaces

New Architecture

- Antivirus and backup filter drivers are now compatible with CSV. Many are already compatible.

- There is a new distributed application consistent backup infrastructure.

- ODX and spot fixing are supported

- BitLocker is supported on CSV

- AD not longer a dependency (!?) for improved performance and resiliency.

Metadata Operations

Lightweight and rapid. Relatively infrequent with VM workloads. Require redirected I/O. Includes:

- VM creation/deletion

- VM power on/off

- VM mobility (live migration or storage live migration)

- Snapshot creation

- Extending a dynamic VHD

- Renaming a VHD

Parallel metadata operations are non disruptive.

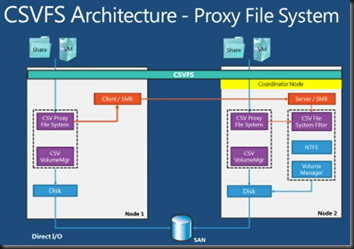

Flow of I/O

- For non-metadata IO: Data sent to the CSV Proxy File System. It then routes to the disk via CSV VolumeMgr via direct IO.

- For metadata redirected IO (see above): We get SMB redirected IO on non-orchestrator (not the CSV coordinator/owner for the CSV in question) nodes. Data is routed via SMB redirected IO by the CSV Proxy File System to the orchestrator via the cluster communications network so the orchestrator can handle the activity.

Interesting Note

You can actually rename C:ClusterStorageVolume1 to something like C:ClusterStorageCSV1. That’s supported by CSV. I wonder if things like System Center support this?

Mount Points

- Used custom reparse points in W2008 R2. That meant backup needed to understand these.

- Switched to standard Mount Points in WS2012.

Improved interoperability with:

- Performance coutners

- OpsMgr (never had free space monitoring before)

- Free space monitoring (speak of the devil!)

- Backup software can understand mount points.

CSV Proxy File System

Appears as CSVFS instead of NTFS in disk management. NTFS under the hood. Enabled applications and admins to be CSV aware.

Setup

No opt-in any more. CSV enabled by default. Appears in normal storage node in FCM. Just right click on available storage to convert to CSV.

Resiliency

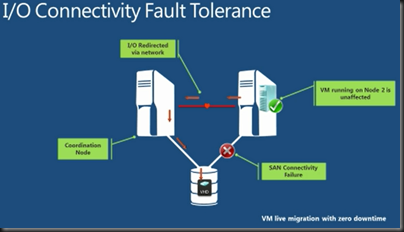

CSV enables fault tolerance file handles. Storage path fault tolerance, e.g. HBA failure. When a VM opens a VHD, it gets a virtual file handle that is provided by CSVFS (metadata operation). The real file handle is opened under the covers by CSV. If the HBA that the host is using to connect the VM to VHD fails, then the real file handle needs to be recreated. This new handle is mapped to the existing virtual file handle, and therefore the application (the VM) is unaware of the outage. We get transparent storage path fault tolerance. The fault tolerant SAN connectivity (remember that direct connection via HBA has failed and should have failed the VM’s VHD connection) is re-routed by Redirected IO via the Orchestrator (CSV coordinator) which “proxies” the storage IO to the SAN.

If the Coordinator node fails, IO is queued briefly and the orchestration role fails over to another node. No downtime in this brief window.

If the private cluster network fails, the next available network is used … remember you should have at least 2 private networks in a CSV cluster … the second private network would be used in this case.

Spot-Fix

- Scanning is separated from disk repair. Scanning is done online.

- Spot-fixing requires offline only to repair. It is based on the number of errors to fix rather than the size of the volume … could be 3 seconds.

- This offline does not cause the CSV to go “offline” for applications (VMs) using that CSV being repaired. CSV proxy file system virtual file handles appear to be maintained.

This should allow for much bigger CSVs without chkdsk concerns.

CSV Block Cache

This is a distributed write-through cache. Un-buffered IO is targeted. This is excluded by the Windows Cache Manager (buffered IO only). The CSV block cache is consistent across the cluster.

This has a very high value for pooled VDI VM scenario. Read-only (differencing) parent VHD or read-write differencing VHDs.

You configure the memory for the block cache on a cluster level. 512 MB per host appears to be the sweet spot. Then you enable CSV block cache on a per CSV basis … focus on the read-performance-important CSVs.

Less Redirected IO

- New algorithm for detecting type of redirected IO required

- Uses OpsLocks as a distributed locking mechanism to determine if IO can go via direct path

Comparing speeds:

- Direct IO: Block level IO performance parity

- Redirected IO: Remote file system (SMB 3.0) performance parity … can leverage multichannel and RDMA

Block Level Redirection

This is new in WS2012 and provides a much faster redirected IO during storage path failure and redirection. It is still using SMB. Block level redirection goes directly to the storage subsystem and provides 2x disk performance. It bypasses the CSV subsystem on the coordinator node – SMB redirected IO (metadata) must go through this.

You can speed up redirected IO using SMB 3.0 features such as Multichannel (many NICs and RSS on single NICs) and RDMA. With all the things turned on, you should get 98% of the performance of direct IO via SMB 3.0 redirected IO – I guess he’s talking about Block Level Redirected IO.

VM Density per CSV

- Orchestration is done on a cluster node (parallelized) which is more scalable than file system orchestration.

- Therefore there are no limits placed on this by CSV, unlike in VMFS.

- How many IOPS can your storage handle, versus how many IOPS do your VMs need?

- Direct IO during backup also simplifies CSV design.

If your array can handle it, you could (and probably won’t) have 4,000 VMs on a 64 node cluster with a single CSV.

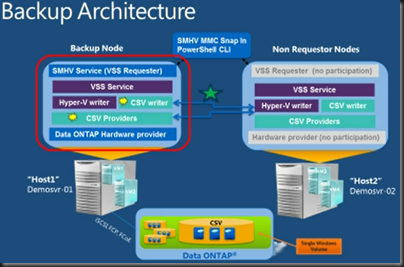

CSV Backup and Restore Enhancements

- Distributed snapshots: VSS based application consistency. Created across the cluster. Backup applications query the CSV to do an application consistent backup.

- Parallel backups can be done across a cluster: Can have one or more concurrent backups on a CSV. Can have one or more concurrent CSV backups on a single node.

- CSV ownership does not change. There is no longer a need for redirected IO during backup.

- Direct IO mode for software snapshots of the CSV – when there is no hardware VSS provider.

- Backup no longer needs to be CSV aware.

Summary: We get a single application consistent backup snapshot of multiple VMs across many hosts using a single VSS snapshot of the CSV. The VSS provider is called on the “backup node” … any node in the cluster. This is where the snapshot is created. Will result in less data being transmitted, fewer snapshots, quicker backups.

How a CSV Backup Work in WS2012

- Backup application talks to the VSS Service on the backup node

- The Hyper-V writer identifies the local VMs on the backup node

- Backup node CSV writer contacts the Hyper-V writer on the other hosts in cluster to gather metadata of files being used by VMs on that CSV

- CSV Provider on backup node contacts Hyper-V Writer to get quiesce the VMs

- Hyper-V Writer on the backup node also quiesces its own VMs

- VSS snapshot of the entire CSV is created

- The backup tool can then backup the CSV via the VSS snapshot