Seeing as a VMware marketing employee was so kind as to link to this post from his “independent” blog, I’ll gladly inform his followers of his employers’ desperation and that VMware storage is insecure. You’re welcome, Eric 🙂

We have the ability to run many live migrations at once between Windows Server 2012 (WS2012) Hyper-V hosts. By default, we can do 2 simultaneous Live Migrations at once: that’s 2 live migrations and 2 storage migrations.

A word of warning. Storage live migrations cause a spike in IOPS so you don’t want to do too many of those at once. Anyway, they are more of a planned move, e.g. relocation of workloads. The same applies to a shared-nothing live migration where the first 50% of the job is a storage migration.

In this post, I’m more interested in a traditional Live Migration where the storage isn’t moving. It’s either on a SAN or on an SMB 3.0 share. In W2008 R2, we could do 1 of these at a time. In vSphere 5.0, you can do 4 of these at a time. Windows Server 2012 hasn’t applied a limit, just a default of 2.

So how many simultaneous live migrations is the right amount? How long is a piece of string? It really does depend. Remember that a VM live migration is a copy/synchronisation of the VM’s changing memory from one host to another over the network, followed by a pause on hostA with an un-pause on hostB. Harder working VMs = more memory trash = longer process per VM. More bandwidth = the ability to run more migrations at once.

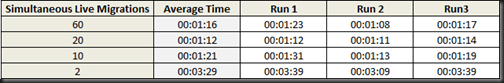

So I decided to run a quick test tonight. This afternoon I build a converged fabric cluster using WS2012 RC. It’s based on 4 * 10 GbE NICs in a team, with 9014 sized jumbo frames enabled, and I have a QoS poicy to guarantee 10% of bandwidth to Live Migration. I deployed 60 * 512 MB RAM Ubuntu VMs. And then I ran 4 sets of tests, 3 in each set, where I changed the number of concurrent live migrations. I started with 60 simultaneous, dropped to 20, then to 10, and then to the default of 2.

Here’s a summary on the findings:

- There is a sweet spot in my configuration: 20 VMs at once. With a bit more testing I might have found if the sweet spot was closer to 15 or 25.

- Running 2 simultaneous live migrations was the slowest by far, over double the time required for 60 concurrent live migrations.

Clearly in my configuration, lots of simultaneous live migrations speeds up the evacuation of a host. But the sweet spot could change if:

- The memory trashing of VMs was higher, rather than with idle VMs that I had

- I was using a different network, such as a single 1 GbE or 10 GbE NIC, for Live Migration instead of a 40 GbE converged fabric

Sow how do you assess the right setting for your farm? That’s a tough one, especially at this pre-release stage. My thoughts are that you test the installation just like I did during the pilot. See what works best. Then tune it. If you’re on 1 GbE, then maybe try 10 and work your way down. If you’re on 10 GbE converged fabric then try something like what I did. Find the sweet spot and then stick with that. At least, that’s what I’m thinking at the moment.