See my more recent post which talks in great detail about how Hyper-V Replica works and how to use it.

At WPC11, Microsoft introduced (at a very high level) a new feature of Windows 8 (2012?) Server called Hyper-V Replica. This came up in conversation in meetings yesterday and I immediately thought that customers in the SMB space, and even those in the corporate branch/regional office would want to jump all over this – and need the upgrade rights.

Let’s look at the DR options that you can use right now.

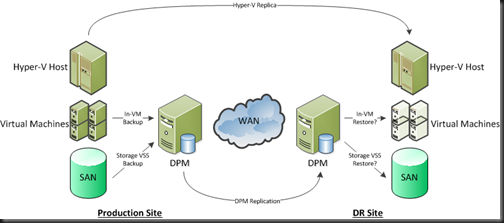

Backup Replication

One of the cheapest around and great for the SMB is replication by System Center Data Protection Manager 2010. With this solution you are leveraging the disk-disk functionality of your backup solution. The primary site DPM server backs up your virtual machines. The DR site DPM server replicates the backed up data and it’s metadata to the DR site. During the invocation of the DR plan, virtual machines can be restored to an alternative (and completely different) Hyper-V host or cluster.

Using DPM is cost effective, and thanks to throttling, is light on the bandwidth and has none of the latency (distance) concerns of higher-end replication solutions. It is a bit more time consuming for the invocation.

This is a nice economic way for an SMB or a branch/regional office to do DR. It does require some work during invocation: that’s the price you pay for a budget friendly solution that kills two marketing people with one stone – Hey; I like birds but I don’t like marke …Moving on …

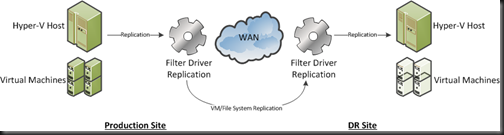

Third-Party Software Based Replication

The next solution up the ladder is a 3rd party software replication solution. At a high level there are two types:

- Host based solution: 1 host replicates to another host. These are often non-clustered hosts. This works out being quite expensive.

- Simulated cluster solution: This is where 1 host replicates to another. It can integrate with Windows Failover Clustering, or it may use it’s own high availability solution. Again, this can be expensive, and solutions that feature their own high availability solution can possibly be flaky, maybe even being subject to split-brain active-active failures when the WAN link fails.

- Software based iSCSI storage: Some companies produce an iSCSI storage solution that you can install on a storage server. This gives you a budget SAN for clustering. Some of these solutions can include synchronous or asynchronous replication to a DR site. This can be much cheaper than a (hardware) SAN with the same features. Beware of using storage level backup with these … you need to know if VSS will create the volume snapshot within the volume that’s being replicated. If it does, then you’ll have your WAN link flooded with unnecessary snapshot replication to the DR site every time you run that backup job.

This solution gives you live replication from the production to the DR site. In theory, all you need to do to recover from a site failure is to power up the VMs in the DR site. Some solutions may do this automatically (beware of split brain active-active if the WAN link and heartbeat fails). You only need to touch backup during this invocation if the disaster introduced some corruption.

Your WAN requirements can also be quite flexible with these solutions:

- Bandwidth: You will need at least 1 Gbps for Live Migration between sites. 100 Mbps will suffice for Quick Migration (it still has a use!). Beyond that, you need enough bandwidth to handle data throughput for replication and that depends on change to your VMs/replicated storage. Your backup logs may help with that analysis.

- Latency: Synchronous replication will require very low latency, e.g. <2 MS. Check with the vendor. Asynchronous replication is much better at handling long distance and high latency connections. You may lose a few seconds of data during the disaster, but it’ll cost you a lot less to maintain.

I am not a fan of this type of solution. I’ve been burned by this type of software with file/SQL server replication in the past. I’ve also seen it used with Hyper-V where compromises on backup had to be made.

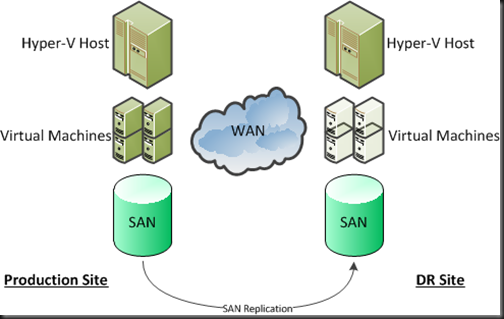

SAN Replication

This is the most expensive solution, and is where the SAN does the replication at the physical storage layer. It is probably the simplest to invoke in an emergency, and depending on the solution, can allow you to create multi-site clusters, sometimes with CSVs that span the sites (and you need to plan very carefully if doing that). For this type of solutions you need:

- Quite an expensive SAN. That expense varies wildly. Some SANs include replication, and some really high end SANs require an additional replication license(s) to be purchased.

- Lots of high quality, and probably ultra low latency, WAN pipe. Synchronous replication will need a lot of bandwidth and very low latency connections. The benefit is (in theory) zero data loss during an invocation. When a write happens in site A on the SAN, then it happens in site B. Check with the manufacturer and/or an expert in this technology (not honest Bob, the PC salesman, or even honest Janet, the person you buy your servers from).

This is the Maybach of DR solutions for virtualisation, and is priced as such. It is therefore well outside the reach of the SMB. The latency limitations with some solutions can eliminate some of the benefits. And it does require identical storage in both sites. That can be an issue with branch/regional office to head office replication strategies, or using hosting company rental solutions.

Now let’s consider what 2012 may bring us, based purely on the couple of minutes presentation of Hyper-V replica that was at WPC11.

Hyper-V Replica Solution

I previously blogged about the little bit of technology that was on show at WPC 2011, with a couple of screenshots that revealed functionality.

Hyper-V Replica appears (in the demonstrated pre-beta build and things are subject to change) to offer:

- Scheduled replication, which can be based on VSS to maintain application/database consistency (SQL, Exchange, etc). You can schedule the replication for outside core hours, minimizing the impact on your Internet link on normal business operations.

- Asynchronous replication. This is perfect for the SMB or the distant/small regional/branch office because it allows the use of lower priced connections, and allows replication over longer distances, e.g. cross-continent.

- You appear to be able to maintain several snapshots at the destination site. This could possibly cover you in the corruption scenario.

- The choice of authentication between replicating hosts appeared to allow Kerberos (in the same forest) and X.509 certificates. Maybe this would allow replication to a different forest: in other words a service provider where equipment or space would be rented?

What Hyper-V Replica will give us is the ability to replicate VMs (and all their contents) from one site to another in a reliable and economic manner. It is asynchronous and that won’t suit everyone … but those few who really need synchronous replication (NASDAQ and the like) don’t have an issue buying two or three Hitachi SANs, or similar, at a time.

I reckon DPM and DPM replication still have a role in the Hyper-V Replica (or any replication) scenario. If we do have the ability to keep snapshots, we’ll only have a few of them. What do you do if you invoke your DR after losing the primary site (flood, fire, etc) and someone needs to restore a production database, or a file with important decision/contract data? Are you going to call in your tapes from last week? Hah! I bet that courier is getting themselves and their family to safety, stuck in traffic (see post-9/11 bridge closures or state of the roads in New Orleans floods), busy handling lots of similar requests, or worse (it was a disaster). Replicating your back to the secondary site will allow you restore data (that is still on the disk store) where required without relying on external services.

Some people actually send their tapes to be stored at their DR site as their offsite archival. That would also help. However, remember you are invoking a DR plan because of an unexpected emergency or disaster. Things will not be going smoothly. Expect it to be the worst day of your career. I bet you’ve had a few bad ones where things don’t go well. Are you going to rely entirely on tape during this time frame? Your day will only get worse if you do: tapes are notoriously unreliable, especially when you need them most. Tapes are slow, and you may find a director impatiently mouth-breathing behind you as the tape catalogues on the backup server. And how often do you use that tape library in the DR site?

To me, it seems like the best backup solution, in addition to Hyper-V Replica (a normal feature of the new version of Hyper-V that I cannot wait to start selling), is to combine quick/reliable disk-disk-disk backup/replication for short term backup along with tape for archival.

That’s my thinking now, after seeing just a few minutes of a pre-beta demo on a webcast. As I said, it’s subject to change. We’ll learn more at/after Build in September and as we progress from beta-RC-RTM. Until then, these are musings, and not something to start strategising on.