This article was written just after the beta of WS2012 was launched. We now now that the performance of SMB 3.0 is -really- good, e.g. 1 million IOPS from a VM good.

WS2012 is bringing a lot of changes in how we design storage for our Hyper-V hosts. There’s no one right way, just lots of options, which give you the ability to choose the right one for your business.

There were two basic deployments in Windows Server 2008 R2 Hyper-V, and they’re both sill valid with Windows Server 2012 Hyper-V:

- Standalone: The host had internal disk or DAS and the VMs that ran on the host were stored on this disk.

- Clustered: You required a SAN that was either SAS, iSCSI, or FIbre Channel (FC) attached (as below).

And there’s the rub. Everyone wants VM mobility and fault tolerance. I’ve talked about some of this in recent posts. Windows Server 2012 Hyper-V has Live Migration that is independent of Failover Clustering. Guest clustering is limited to iSCSI in Windows Server 2012 Hyper-V but Windows Server 2012 Hyper-V is adding support for Virtual Fibre Channel.

Failover Clustering is still the ideal. Whereas Live Migration gives proactive migration (move workloads before a problem, e,g, to patch a host), Failover Clustering provides high availability via reactive migration (move workloads automatically in advance of a problem, e.g. host failure). The problem here is that a cluster requires shared storage. And that has always been expensive iSCSI, SAS, or FC attached storage.

Expensive? To whom? Well, to everyone. For most SMEs that buy a cluster, the SAN is probably the biggest IT investment that that company will ever make. Wouldn’t it suck if they got it wrong, or if they had to upgrade/replace it in 3 years? What about the enterprise? They can afford a SAN. Sure, but their storage requirements keep growing and growing. Storage is not cheap (don’t dare talk to me about $100 1 TB drives). Enterprises are sick and tired of being held captive by the SAN companies for 100% of their storage needs.

We’re getting new alternatives from Microsoft in Windows Server 2012. This is all made possible by a new version of the SMB protocol.

SMB 3.0 (Formerly SMB 2.2)

Windows Server 2012 is bringing us a new version of the SMB protocol. With the additional ability to do multichannel, where file share data transfer automatically spans multiple NICs with fault tolerance, we are now getting support to store virtual machines on a file server, as long as both client (Hyper-V host) and server (file server) are running Windows Server 2012 or above.

If you’re thinking ahead then you’ve already started to wonder about how you will backup these virtual machines using an agent on the host. The host no longer has “direct” access to the VMs as it would with internal disk, DAS, or a SAN. Windows Server 2012 VSS appears to be quite clever, intercepting a backup agents request to VSS snapshot a file server stored VM, and redirecting that to VSS on the file server. We’re told that this should all be transparent to the backup agent.

Now we get some new storage and host design opportunities.

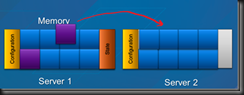

Shared File Server – No Hyper-V Clustering

In this example a single Windows Server 2012 file server is used to store the Hyper-V virtual machines. The Hyper-V hosts can use the same file server, and they are not clustered. With this architecture, you can do Live Migration between the two hosts, even without a cluster.

What about performance? SMB is going to suck, right? Not so fast, my friend! Even with a pair of basic 1 Gbps NICs for SMB 3.0 traffic (instead of a pair of NICs for iSCSI), I’ve been told that you can expect iSCSI-like speeds, and maybe even better. At 10 Gbps … well ![]() The end result is cheaper and easier to configure storage.

The end result is cheaper and easier to configure storage.

With the lack of fault tolerance, this deployment type is probably suitable only for small businesses and lab environments.

Scale Out File Server (SOFS) – No Hyper-V Clustering

Normally we want our storage to be fault tolerant. That’s because all of our VMs are probably on that single SAN (yes, some have the scale and budget for spanning SANs but that’s a whole different breed of organisation). Normally we would need a SAN made up fault tolerant disk tray$, switche$, controller$, hot $pare disk$, and $o on. I think you get the point.

Thanks to the innovations of Windows Server 2012, we’re going to get a whole new type of fault tolerant storage called a SOFS.

What we have in a SOFS is an active/active file server cluster. The hosts that store VMs on the cluster use UNC paths instead of traditional local paths (even for CSV). The file servers in the SOFS cluster work as a team. A role in SMB 3.0 called the witness runs on the Hyper-V host (SMB witness client) and file server (SMB witness server). With some clever redirection the SOFS can handle:

- Failure of a file server with just a blip in VM I/O (no outage). The cluster will allow the new host of the VMs to access the files without a 60 second delay you might see in today’s technology.

- Live Migration of a VM from one host to another with a smooth transition of file handles/locks.

And VSS works through the above redirection process too.

One gotcha: you might look at this and this this is a great way to replace current file servers. The SOFS is intended only for large files with little metadata access (few permissions checks, etc). The currently envisioned scenarios are SQL Server file storage and Hyper-V VM file storage. End user file shares, on the other hand, feature many small files with lots of metadata access and are not suitable for SOFS.

Why is this? To make the file servers active/active with smooth VM file handle/lock transition, the storage that the file servers are using consists of 1 or more Cluster Shared Volumes (CSVs). This uses CSV v2.0, not the version we have in Windows Server 2008 R2. The big improvements in CSV 2.0 are:

- Direct I/O for VSS backup

- Concurrent backup across all nodes using the CSV

Some activity in a CSV does still cause redirected I/O, and an example of that is metadata lookup. Now you get why this isn’t good for end user data.

When I’ve talked about SOFS many have jumped immediately to think that it was only for small businesses. Oh you fools! Never assume! Yes, SOFS can be for the small business (more later). But where this really adds value is that larger business that feels like they are held hostage by their SAN vendors. Organisations are facing a real storage challenge today. SANs are not getting cheaper, and the storage scale requirements are rocketing. SOFS offers a new alternative. For a company that requires certain hardware functions of a SAN (such as replication) then SOFS offers an alternative tier of storage. For a hosting company where every penny spent is a penny that makes them more expensive in the yes of their customers, SOFS is a fantastic way to provide economic, highly performing, scalable, fault tolerant storage for virtual machine hosting.

The SOFS cluster does require shared storage of some kind. It can be made up of the traditional SAN technologies such as SAS, iSCSI, or Fibre Channel with the usual RAID suspects. Another new technology, called PCI RAID, is on the way. It will allow you to use just a bunch of disks (JBOD) and you can have fault tolerance in the form of mirroring or parity (Windows Server 2012 Storage Spaces and Storage Pools). It should be noted that if you want to create a CSV on a Storage Space then it must use mirroring, and not parity.

Update: I had previously blogged in this article that I was worried that SOFS was suitable only for smaller deployments. I was seriously wrong.

Good news for those small deployment: Microsoft is working with hardware partners to create a cluster-in-a-box (CiB) architecture with 2 file servers, JBOD and PCI RAID. Hopefully it will be economic to acquire/deploy.

Update: And for the big biz that needs big IOPS for LOB apps, there are CiB solutions for you too, based on Infiniband networking, RDMA (SMB Direct), and SSD, e.g. a 5U appliance having the same IOPS are 4 racks of fibre channel disk.

Back to the above architecture, I see this one being useful in a few ways:

- Hosting companies will like it because every penny of each Hyper-V host is utilised. Having N+1 or N+2 Hyper-V hosts means you have to add cost to your customer packages and this makes you less competitive.

- Larger enterprises will want to reduce their every-5 year storage costs and this offers them a different tier of storage for VMs that don’t require those expensive SAN features such as LUN replication.

SOFS – Hyper-V Cluster

This is the next step up from the previous solution. It is a fully redundant virtualisation and storage infrastructure without the installation of a SAN. A SOFS (active-active file server cluster) provides the storage. A Hyper-V cluster provides the virtualisation for HA VMs.

The Hyper-V hosts are clustered. If they were direct attached to a SAN then they would place their VMs directly on CSVs. But in this case they store their VMs on a UNC path, just as with the previous SMB 3.0 examples. VMs are mobile thanks to Live Migration (as before without Hyper-V clusters) and thanks to Failover. Windows Server 2012 Clustering has had a lot of work done to it; my favourite change being Cluster Aware Updating (easy automated patching of a cluster via Automatic Updates).

The next architectures “up” from this one are Hyper-V clusters that use SAS, iSCSI, or FC. Certainly SOFS is going to be more scalable than a SAS cluster. I’d also argue that it could be more scalable than iSCSI or FC purely based on cost. Quality iSCSI or FC SANs can do things at the hardware layer that a file server cluster cannot, but you can get way more fault tolerant storage per Euro/Dollar/Pound/etc with SOFS.

So those are your options … in a single site ![]()

What About Hyper-V Cluster Networking? Has It Changed?

In a word: no.

The basic essentials of what you need are still the same:

- Parent/management networking

- VM connectivity

- Live Migration network (this should usually be your first 10 GbE network)

- Cluster communications network (heartbeat and redirected IO which does still have a place, even if not for backup)

- Storage 1 (iSCSI or SMB 3.0)

- Storage 2 (iSCSI or SMB 3.0)

Update: We now have two types of redirected IO that both support SMB Multichannel and SMB Direct. SMB redirection (high level) is for those short metadata operations, an block level redirect (2x faster) is for sustained redirection IO operations such as a storage path failure.

Maybe you add a dedicated backup network, and maybe you add a 2nd Live Migration network.

How you get these connections is another story. Thanks to native NIC teaming, DCB, QoS, and a lot of other networking changes/additions, there’s lots of ways to get these 6+ communication paths in Windows Server 2012. For that, you need to read about converged fabrics.