Speakers: CJ Williams and Gabriel Silva

What was done in Windows Server 2012:

Learning’s from data centres

MSFT has some massive scale data centres:

- Cutting costs: maximal utilization of existing resources, no specialized equipment

- Choice and flexibility: no vendor locking, any tenant VM deployed in the cloud

- Agility and automation are key: automation for the hoster and tenant networks, including core infrastructure services

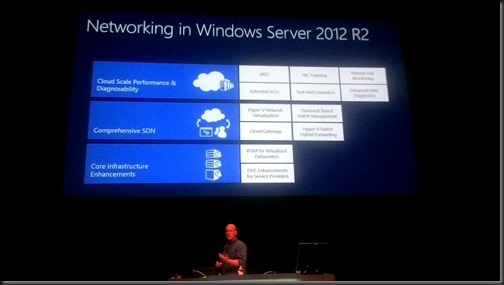

3 areas of focus

Virtual RSS (vRSS)

RSS = Receive Side Scaling. VMs restricted to 1 CPU for network traffic processing in WS2012.

- WS2012 R2 takes RSS and enables it in the VM. vRSS maximises resource utilization by spreading network traffic among multiple VM processors.

- Now possible to virtualize traditionally network intensive physical workloads.

- Requires no hardware upgrade and works with any NICs that support VMQ.

Example usage: network intensive guest apps that need to scale out from just a single vCPU processing interrupts.

DVMQ on the host NICs (for the virtual switch) allows us to use vRSS.

NIC Teaming

There is a new Dynamic Mode in WS2012 R2. This balances based on flowlets. Optimized utilisation of a team on existing hardware.

You can spread your traffic inbound and outbound. In WS2012, can only balance on outbound. EG, 1 VM would be pinned to one pNIC. Now “flowlets” give the OS much finer grained load balancing, across all the NICs, regardless of what workload you are running.

Extended ACLs

In WS2012 you can block/allow/measure based on source and destination address (IP or MAC).

In WS2012 R2, you can allow or block for specific worklaods:

- Network address

- Application port

- Protocol type

There is now stateful packet inspection, understanding a transaction.

Remote Live Monitoring

Remote monitoring of WS2012 traffic can be done, but it is difficult. In WS2012 R2, you can mirror and capture traffic for remote and local viewing. GUI experience with Message Analyzer (the new NetMon). Supports remote offline traffic captures. Filtering based on IP addresses and VMs.

Configured using WMI, and truncated network traffic redirected ETW events.

Gabe comes up to demo.

Demo

Dynamic Mode LBFO will be first. We see traditional WS2012 NIC teaming. Dynamic is enabled, and we see all NICs being roughly balanced in PerfMon.

Enabling it in the demo sees throughput go up for the VM – yes, CPU utilisation goes up in the VM, but that’s why the VM was given more vCPUs to allow more networking resources – otherwise the traffic is limited by being pinned to a single vCPU.

Test-NetConnection

The goal was to make Ping better. It’s a new PowerShell cmdlet. It pings, but it returns back a lot of information: Soutce IP, remote IP latency, test a port, get more detailed info, route information, etc.

IMO, it’s about damned time ![]() This is a very nice tool, and a nice hook to get people into looking at some basic PowerShell scripting, to extend what the cmdlet can already do by itself.

This is a very nice tool, and a nice hook to get people into looking at some basic PowerShell scripting, to extend what the cmdlet can already do by itself.

Software Defined Networking (Hyper-V Network Virtualization)

3 promises that the network should provide:

- Flexibility: HNV and Virtual Switch

- Automation: VMM – SMI-S, OMI (network devices) and Datacenter Abstraction Layer Putting it all together in VMM)

- Control: Partner extensions, e.g. Cisco Nexus 1000V

SDN should be

- Open (DMTF standard for appliance deployment and configuration – OMI), extensible (virtual switch), and standards based (NVGRE industry standard to encapsulate virtualisation traffic).

- Built in and production ready

- Innovation in software and hardware (pSwitches for example).

HNV uses a 24-bit identifier meaning the thing is extremely scalable, when compared to the very limited 4096 possible VLANs.

Dynamic Learning of Customer Addresses

HNV can dynamically learn Consumer Addresses being used in the VM Network. Allows for guest DHCP and guest clusters to be used in HNV VM Networks.

Performance

NIC teaming is supported on the host. NVGRE Task Offload Enable NICs will be able to offload the processing associated with NVGRE. Emulex and Mellanox are early suppliers.

Enhanced diagnostics

A host admin/operator can use a PoSH cmdlet to test connectivity to a VM, and validate that the VMs can communicate without having access to the VM (network-wise).

Hyper-V Extensible Switch

One layer is the forwarding switch. The Cisco Nexus 100V is out. NEC has an OpenFlow extension. In WS2012 R2, the HNV filter is moved into the virtual switch. 3rd party extensions can now work on the Consumer Address and the Provider Address (both VM and physical addresses).

Example, a virtual firewall extension might want to filter based on CA and/or PA.

A effect of this is that 3rd parties can bring their own network virtualization and implement it in Hyper-V. Examples: Cisco CXLan or Open Flow network virtualization.

Standards Based Switch Management

Using PowerShell, you can manage physical switches. Done via Open Management Infrastructure (OMI). VMM provides automation for this. Common management infrastructure across vendors. Automate common network tasks. Logo program to make switches “just work”.

Built-In Software Gateways

A WS2012 R2 gateway has 3 features:

- Site to site multi-tenant aware VPN gateway

- Multi-tenant aware NAT for Internet access

- Forwarding gateway for in-datacentre physical machine access

Demo with Gabe

Site-Site g/w.

2 clients in HNV. Both using different VPN protocols, SSTP and IKEv2. No access without VPN tunnels. Connects the VPNs of Red. Now Red can connect to Red VMs and Blue cannot to anything. Connects Blue’s VPN and Blue can now connect to Blue VMs.

IP Address Management (IPAM)

Added in WS2012, primarily for auditing IP usage and planning.

In WS2012 R2, you can manage IPs in the physical and virtual spaces. It integrates with SCVMM 2012 R2, and allows you to deploy IP pools, etc.

Improvements Summary

In my words, WS2012 innovated, and WS2012 R2 has smoothed the corners, making the huge strides in 2012 more achievable and easier to manage. And a bunch of new features too.

I hope there is an easy upgrade path for people on 2012 right now. The migration I went through from 2008 R2 to 2012 was not simple and very time consuming. (building new clusters and migrating everything by rolling 1 node over at a time).

Can you run a cluster with 2012 and 2012 R2? It would great if you can so you can put hosts in maintenance mode and upgrade them.

This is one area that VMware is 1000x easier. Running mixed mode VMware clusters was supported and upgrading to new versions very simple.

In place upgrade of clusters not possible. Use cross-version Live Migration. See my update post.