This post will explain how to override false positives in the (network) Azure Web Application Firewall (WAF), without compromising security, using one of four methods in combination with a tiered WAF Policy architecture:

- Managed Rulesets

- Custom Rules

- Exclusions

- Disabled rules

False Positives

A WAF is a rather simple solution, attempting to inspect L7 (application layer) traffic and intercept attacks such as protocol misuse, SQL injection, or cross-site scripting. Unfortunately, false positives can occur.

For example, let’s assume that an API app is securely shared using a WAF. Messages sent to the API might be formatted in JSON, with lots of special characters to format the message. SQL Inspection defenses count special characters, trying to find where an attacker is trying to escape out of a web request to create a database command that will execute. If the defense counts too many special characters (it will!) then an alert will be created and the message will be blocked if Prevention mode is enabled.

One must allow that traffic through because it is expected traffic that the application (and the business) requires. But one must do this without opening up too many holes in the WAF, making the WAF a costly, pointless existence.

Log Analytics Ingestion Charge

There is a side effect to false positives. False positives will vastly outnumber actual attack/probing attempts. Busy workloads can generate huge amounts of logs for false positives. If you use Log Analytics, that data has a cost:

- Storage: Not too bad

- Ingestion: This one is painful

The way to reduce the cost is to reduce the noise by overriding the detections that create false positives. Organizations that have a lot of web traffic could save a significant amount of money here.

WAF Policies

The WAF functionality of the Azure Application Gateway (AppGw) is managed by a resource called an Application Gateway WAF Policy (WAF Policy). The typical approach is to associate 1 WAF Policy with a WAF resource. The WAF policy will create customizations. For reasons that should become apparent later, I am going to urge you to take a slightly more granular approach to manage your WAF if your WAF is used to securely share more than one workload or listener:

- WAF parent policy: A WAF policy will be associated with the WAF. This policy will apply to the WAF and all listeners unless another WAF Policy overrides specific settings.

- Per-Listener/Per-Workload policy: This is a policy that is created specifically for a listener or a workload (a set of listeners). Any customisations that apply only to a listener or a workload will be applied here, without affecting any other listener or workload.

Methodology

You will never know what false positives you will encounter. If your WAF goes straight into Prevention mode then you will create a world of pain and be the recipient of a lot of hate-messages/emails.

Here’s the approach that I recommend:

- Protect your WAF with an NSG that has Traffic Analytics enabled. The NSG should only allow the necessary HTTP, HTTPS, WAF monitoring (from Azure), and load balancing traffic. Use a custom deny-all rule to block everything else.

- Enable monitoring for the Application Gateway, sending all logs to a queryable destination such as Log Analytics.

- Monitor traffic for a period of time – enough to allow expected normal usage of the full systems. Your monitoring should detect the false positives.

- Verify that Traffic Analytics did not record malicious IP addresses hitting your WAF.

- Query your monitoring data to find the false positives for each listener. Identify the hostname, request URI, ruleset, rule group, and rule ID that is causing the issue on a per-listener/workload basis.

- Ideally, developers fix any issues that create false positives but this is unlikely – so we’ll move on.

- Determine your override strategy (see below).

- Deploy your overrides with the policies still in Detection mode.

- Monitor traffic for another period of time to ensure that there are no more false positives.

- Switch the parent policy to Prevention Mode.

- Swith each per-listener/per-workload policy to Prevention Mode

- Monitor

Managed Rule Sets

The WAF today has two rulesets that you can use:

- OWASP: Used to detect attacks such as SQL Injection, Cross-site scripting, and so on.

- Microsoft Bot Manager Rule Set: Used to prevent malicious bots from browsing/attacking your workloads.

You need the OWASP ruleset – but we will need to manage it (later). The bot ruleset, in my experience, creates a huge amount of noise will no way of creating granular overrides. One can override the bot ruleset using custom rules, but as you’ll see later, that’s a big stick that is not granular at all!

My approach to this is to disable the Microsoft Bot Manager Rule Set (or leave it disabled) in the parent and child rulesets. If I have a need to enable it somewhere, I can do it in a per-listener or per-workload ruleset.

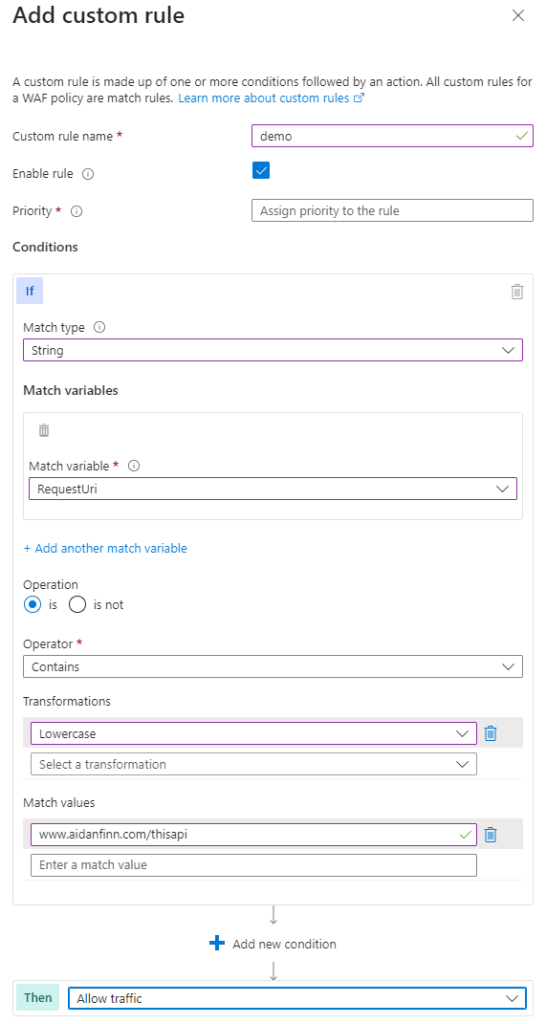

Custom Rules

A custom rule is created in a WAF Policy to force traffic that matches certain criteria to be:

- Always allowed

- Always denied

- Logged only without denying it

You can create a sequence of filters based on:

- IP Address

- Number

- String

- Geo Location

If the set of filters matches a request then your desired action will apply. For example, if I want to force traffic to be allowed to my API, I can enter the API URI as one of the filters (as above) and all traffic will be allowed.

Yes, all traffic will be allowed, including traffic that is not a false positive. If I only had a few OWASP rules that were blocking the traffic, the custom rule would disable all OWASP rules.

If you must use this approach, then implement it in the child policy so it is limited to the associated listener/workload.

Exclusions

This is the newest of the override types in WAF Policy – and I’ve found it to be the least useful.

The theory is that you can create an exclusion for one or more OWASP rules based on the values of request headers. For example, if a header called RequestHeaderKeys contains a value of X-Scanner you can instruct the affected OWASP rules to be disabled. This sounds really powerful and quite granular. But this starts to fall apart with other scenarios, such as the aforementioned SQL Injection.

Another common rule that alerts on or blocks traffic is Missing User Agent Header. Exclusions work on the value of a header, so if the header is missing, Exclusions cannot evaluate it.

Another gotcha is that you cannot combine header filters to create an exclusion. The Azure Portal experience for creating an Exclusion makes it look like you can. However, the result is two or more Exclusions that work independently.

If Exclusions will work for you, implement them in the per-listener/per-workload policy and specify only the rules that must be overridden. This approach will limit the effect of the exclusion:

- The scope is just the listener/workload that is associated with the WAF Policy.

- The scope is further limited to just requests where the header matches, allowing all other requests and all OWASP rules to be applied.

Disabled Rules

The final approach that you can use is to disable rules that are creating false positive alerts. A simple workload might only require one or two rules to be disabled. An older & larger workload might require many OWASP rules to be disabled!

If you are going to disable OWASP rules, then do it in the per-listener/per-workload policy. This will limit the effect of the changes to that listener/workload.

This is a fairly each approach and it is pretty granular – not as much as Exclusions. The downside is that you are completely disabling certain protections for an entire listener/workload, leaving the workload vulnerable to attacks of those previously protected types.

Combinations

If you have the time and the data, you can combine different approaches. For example:

- A webhook that comes from the same IP address all of the time can be allowed via a Custom Rule based on an IP Address filter. Any other traffic will be subject to the fill defenses of the WAF.

- If you have certain headers that must be allowed and you want to enable all other protections for all other traffic then use Exclusions.

- If traffic can come from anywhere and you need to override OWASP rules, then disable those rules.

No Great Solution

In summary, there is no perfect solution. The best you can do is find the correct override solution for the specific false positive and deploy it to a specific listener or workload. This will limit the holes that you create in the WAF to the absolute minimum while enabling your workloads to function.