In this post, I want to share the most important thing that you should know when you are designing connectivity and security solutions in Microsoft Azure: Azure virtual networks do not exist.

A Fiction Of Your Own Mind

I understand why Microsoft has chosen to use familiar terms and concepts with Azure networking. It’s hard enough for folks who have worked exclusively with on-premises technologies to get to grips with all of the (ongoing) change in The Cloud. Imagine how bad it would be if we ripped out everything they knew about networking and replaced it with something else.

In a way, that’s exactly what happens when you use Azure’s networking. It is most likely very different to what you have previously used. Azure is a multi-tenant cloud. Countless thousands of tenants are signed up and using a single global physical network. If we want to avoid all the pains of traditional hosting and enable self-service, then something different has to be done to abstract the underlying physical network. Microsoft has used VXLAN to create software-defined networking; this means that an Azure customer can create their own networks with address spaces that have nothing to do with the underlying physical network. The Azure fabric tracks what is running where, and what NICs can talk to each other, and forward packets as required.

In Azure, everything is either a physical (rare) or a virtual (most common) machine. This includes all the PaaS resources and even those so-called serverless resources. When you drill down far enough in the platform, you will find either a machine with an operating system with a NIC. That NIC is connected to a network of some kind, either an Azure-hosted one (in the platform) or a virtual network that you created.

The NIC Is The Router

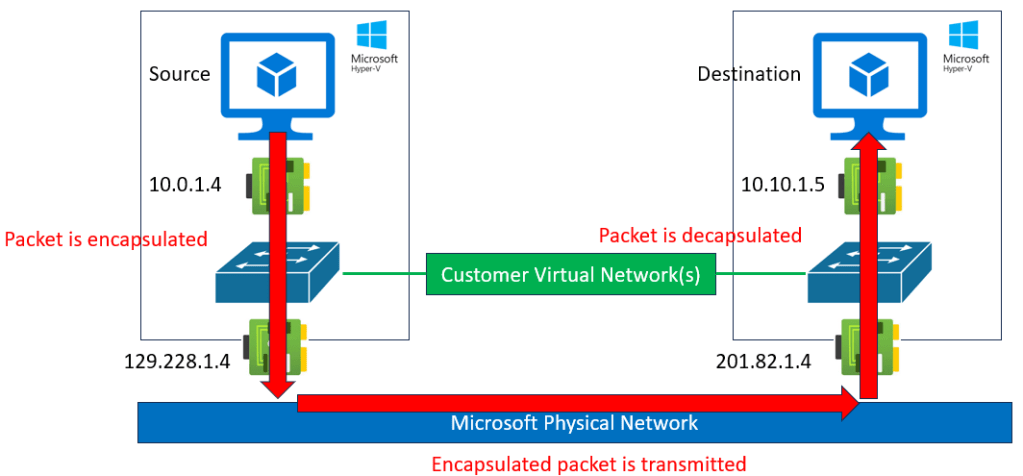

The above image is from a slide I use quite often in my Azure networking presentations. I use it to get a concept across to the audience.

Every virtual machine (except for Azure VMware Services) is hosted on a Hyper-V host, and remember that most PaaS services are hosted in virtual machines. In the image, there are two virtual machines that want to talk to each other. They are connected to a common virtual network that uses a customer-defined prefix of 10.0.0.0/8.

The source VM sends a packet to 10.10.1.5. The packet exits the VM’s guest OS and hits the Azure NIC. The NIC is connected to a virtual switch in the host – did you know that in Hyper-V, the switch port is a part of the NIC to enable consistent processing no matter what host the VM is moved to? The virtual switch encapsulates the packet to enable transmission across the physical network – the physical network has no idea about the customer’s prefix of 10.0.0.0/8. How could it? I’d guess that 80% of customers use all or some of that prefix. Encapsulation allows the pack to hide the customer-defined source and destination addresses. The Azure Fabric knows where the customer’s destination (10.10.1.5) is running, so it uses the physical destination host’s address in the encapsulated packet.

Now the packet is free to travel across the physical Azure network – across the rack, data centre, region or even the global network – to reach the destination host. Now the packet moves up the stack, is encapsulated and dropped into the NIC of the destination VM where things like NSG rules (how the NSG is associated doesn’t matter) are processed.

Here’s what you need to learn here:

- The packet went directly from source to destination at the customer level. Sure it travelled along a Microsoft physical network but we don’t see that. We see that the packet left the source NIC and arrived directly at the destination NIC.

- Each NIC is effectively its own router.

- Each NIC is where NSG rules are processed: source NIC for outbound rules and destination NIC for inbound rules.

The Virtual Network Does Not Exist

Have you ever noticed that every Azure subnet has a default gateway that you cannot ping?

In the above example, no packets travelled across a virtual network. There were no magical wires. Packets didn’t go to a default gateway of the source subnet, get routed to a default gateway of a destination subnet and then to the destination NIC. You might have noticed in the diagram that the source and destination were on different peered virtual networks. When you peer a virtual network, an operator is not sent sprinting into the Azure data centres to install patch cables. There is no mysterious peering connection.

This is the beauty and simplicity of Azure networking in action. When you create a virtual network, you are simply stating:

Anything connected to this network can communicate with each other.

Why do we create subnets? In the past, subnets were for broadcast control. We used them for network isolation. In Azure:

- We can isolate items from each other in the same subnet using NSG rules.

- We don’t have broadcasts – they aren’t possible.

Our reasons for creating subnets are greatly reduced, and so are our subnet counts. We create subnets when there is a technical requirement – for example, an Azure Bastion requires a dedicated subnet. We should end up with much simpler, smaller virtual networks.

How To Think of Azure Networks

I cannot say that I know how the underlying Azure fabric works. But I can imagine it pretty well. I think of it simply as a mapping system. And I explain it using Venn diagrams.

Here’s an example of a single virtual network with some connected Azure resources.

Connecting these resources to the same virtual network is an instruction to the fabric to say: “Let these things be able to route to each other”. When the app service (with VNet Integration) wants to send a packet to the virtual machine, the NIC on the source VM will send the packets directly to the NIC of the destination VM.

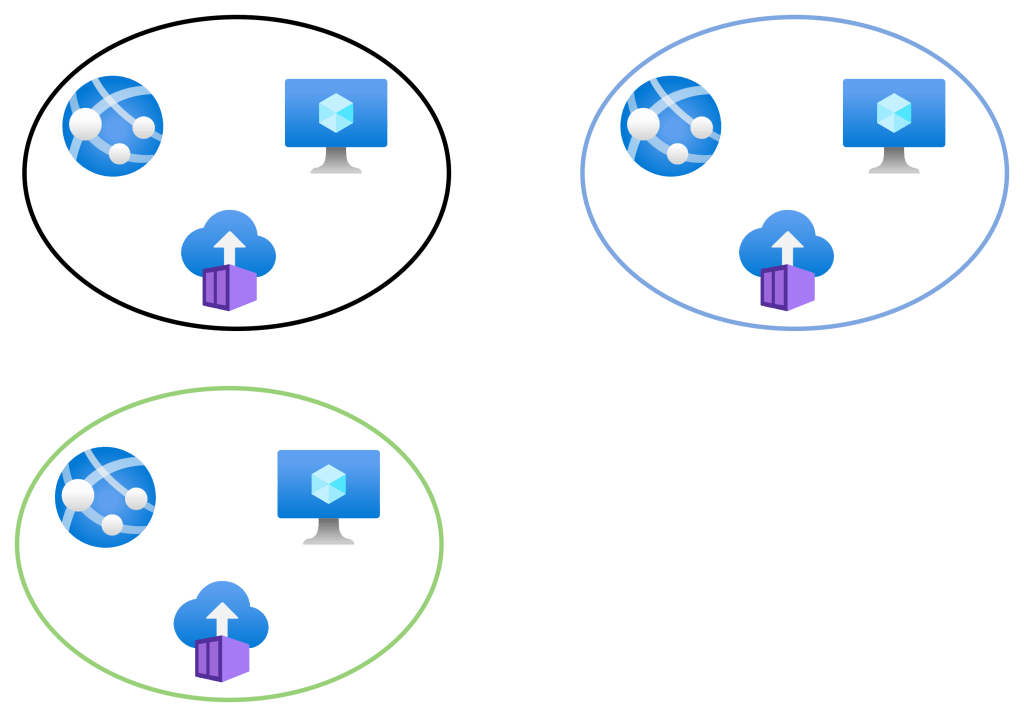

Two more virtual networks, blue and green, are created. Note that none of the virtual networks are connected/peered. Resources in the black network can talk only to each other. Resources in the blue network can talk only to each other. Resources in the green network can talk only to each other.

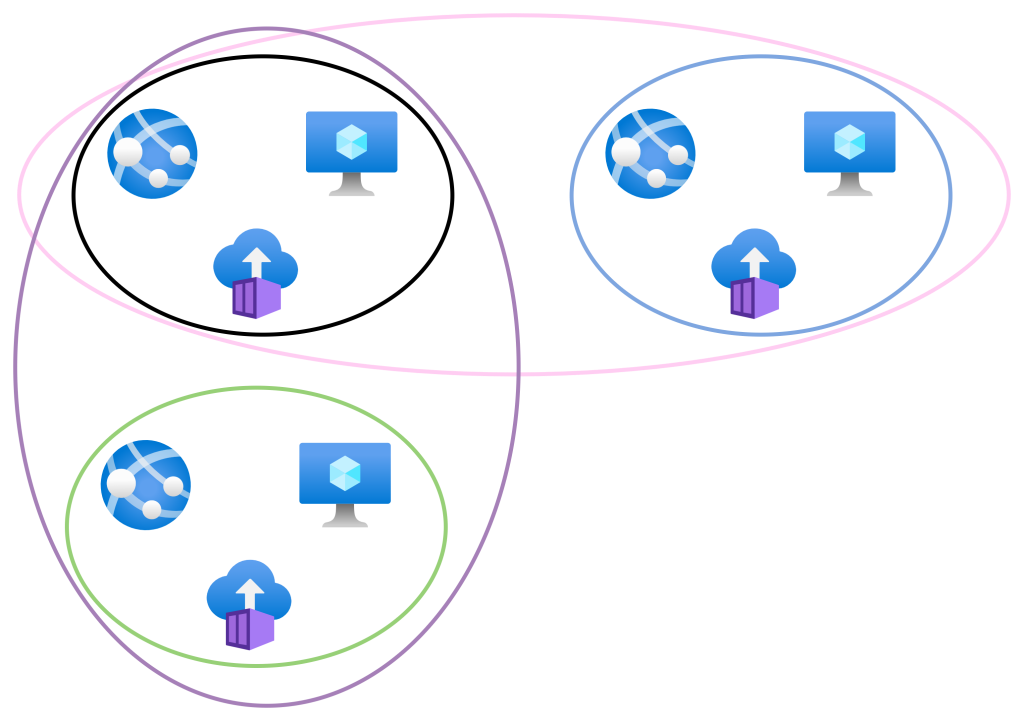

Now we will introduce some VNet peering:

- Black <> Blue

- Black <> Green

As I stated earlier, no virtual cables are created. Instead, the fabric has created new mappings. These new mappings enable new connectivity:

- Black resources can talk with blue resources

- Black resources can talk with green resources.

However, green resources cannot talk directly to blue resources – this would require routing to be enabled via the black network with the current peering configuration.

I can implement isolation within the VNets using NSG rules. If I want further inspection and filtering from a firewall appliance then I can deploy one and force traffic to route via it using BGP or User-Defined Routing.

Wrapping Up

The above simple concept is the biggest barrier I think that many people have when it comes to good Azure network design. If you grasp the fact that virtual networks do not exist and that packets route directly from source to destination and then be able to process those two facts then you are well on your way to designing well-connected/secured networks and being able to troubleshoot them.

If You Liked This Article

If you liked this article, then why don’t you check out my custom Azure training with my company, Cloud Mechanix. My next course is Azure Firewall Deep Dive, a two day virtual course where I go through how to design and implement Azure Firewall, including every feature. This two day course runs on February 12/13, timed for (but not limited to) European attendees.