If you asked me to pick the killer feature of WS2012 Hyper-V, then Replica would be high if not at the top of my list (64 TB VHDX is right up there in the competition). In Ireland, and we’re probably not all that different from everywhere else, the majority of companies are in the small/medium enterprise (SME) space and the vast majority of my customers work exclusively in this space. I’ve seen how DR is a challenge to enterprises and to the SMEs alike. It is expensive and it is difficult. Those are challenges an enterprise can overcome by spending, but that’s not the case for the SME.

Virtualisation should help. Hardware consolidation reduces the cost, but the cost of replication is still there. SAN’s often need licenses to replicate. SAN’s are normally outside of the reach of the SME and even the corporate regional/branch office. Software replication which is aimed at this space is not cheap either, and to be honest, some of them are more risky than the threat of disaster. And let’s not forget the bandwidth that these two types of solution can require.

Isn’t DR Just An Enterprise Thing?

So if virtualisation mobility and the encapsulation of a machine as a bunch of files can help, what can be done to make DR replication a possibility for the SME?

Enter Replica (Hyper-V Replica), a built-in software based asynchronous replication mechanism that has been designed to solve these problems. This is what Microsoft envisioned for Replica:

- If you need to replicate dozens or hundreds of VMs then you should be using a SAN and SAN replication. Replica is not for the medium/enterprise sites.

- Smaller branch offices or regional offices that need to replicate to local or central (head office or HQ data centre) DR sites.

- SME’s who want to replicate to another office.

- Microsoft partners or hosting companies that want to offer a service where SME’s could configure important Windows Server 2012 Hyper-V host VMs to replicate to their data centre – basically a hosted DR service for SMEs. Requirements of this is that it must have Internet friendly authentication (not Kerberos) and it must be hardware independent, i.e. the production site storage can be nothing like the replica storage.

- Most crucially of all: limited bandwidth. Replica is designed to be used on commercially available broadband without impacting normal email or browsing activity – Microsoft does also want to sell them Office 365, after all

How much bandwidth will you need? How long is a piece of string? Your best bet is to measure how much change there is to your customers VMs every 5 minutes and that’ll give you an idea of what bandwidth you’ll need.

How much bandwidth will you need? How long is a piece of string? Your best bet is to measure how much change there is to your customers VMs every 5 minutes and that’ll give you an idea of what bandwidth you’ll need.

In short, Replica is designed and aimed at the ordinary business that makes up 95% of the market, and it’s designed to be easy to set up and invoke.

What Hyper-V Replica Is Not Intended To Do

I know some people are thinking of this next scenario, and the Hyper-V product group anticipated this too. Some people will look at Hyper-V Replica and see it as a way to provide an alternative to clustered Hyper-V hosts in a single site. Although Hyper-V Replica could do this, it is not intended for for this purpose.

The replication is designed for low bandwidth, high latency networks that the SME is likely to use in inter-site replication. As you’ll see later, there will be a delay between data being written on host/cluster A and being replicated to host/cluster B.

You can use Hyper-V Replica within a site for DR, but that’s all it is: DR. It is not a cluster where you fail stuff back and forth for maintenance windows – although you probably could shut down VMs for an hour before flipping over – maybe – but then it would be quicker to put them in a saved state on the original host, do the work, and reboot without failing over to the replica.

How It Works

I describe Hyper-V Replica as being a storage log based asynchronous disaster recovery replication mechanism. That’s all you need to know …

But let’s get deeper

How Replication Works

Once Replica is enabled, the source host starts to maintain a HRL (Hyper-V Replica Log file) for the VHDs. Every 1 write by the VM = 1 write to VHD and 1 write to the HRL. Ideally, and this depends on bandwidth availability, this log file is replayed to the replica VHD on the replica host every 5 minutes. This is not configurable. Some people are going to see the VSS snapshot (more later) timings and get confused by this, but the HRL replay should happen every 5 minutes, no matter what.

The HRL replay mechanism is actually quite clever; it replays the log file in reverse order, and this allows it only to store the latest writes. In other words, it is asynchronous (able to deal with long distances and high latency by write in site A and later write in site B) and it replicates just the changes.

Note: I love stuff like this. Simple, but clever, techniques that simplify and improve otherwise complex tasks. I guess that’s why Microsoft allegedly ask job candidates why manhole covers are circular

As I said, replication or replay of the HRL will normally take place every 5 minutes. That means if a source site goes offline then you’ll lose anywhere from 1 second to nearly 10 minutes of data.

I did say “normally take place every 5 minutes”. Sometimes the bandwidth won’t be there. Hyper-V Replica can tolerate this. After 5 minutes, if the replay hasn’t happened then you get an alert. The HRL replay will have another 25 minutes (up to 30 completely including the 5) to complete before going into a failed state where human intervention will be required. This now means that with replication working, a business could lose between 1 second and nearly 1 hour of data.

Most organisations would actually be very happy with this. Novices to DR will proclaim that they want 0 data loss. OK; that is achievable with EUR100,000 SANs and dark fibre networks over short distances. Once the budget face smack has been dealt, Hyper-V Replica becomes very, very attractive.

That’s the Recovery Point Objective (RPO – amount of time/data lost) dealt with. What about the Recovery Time Objective (RTO – how long it takes to recover)? Hyper-V Replica does not have a heartbeat. There is not automatic failover. There’s a good reason for this. Replica is designed for commercially available broadband that is used by SMEs. This is often phone network based and these networks have brief outages. The last thing an SME needs is for their VMs to automatically come online in the DR site during one of these 10 minute outages. Enterprises avoid this split brain by using witness sites and an independent triangle of WAN connections. Fantastic, but well out of the reach of the SME. Therefore, Replica will require manual failover of VMs in the DR site, either by the SME’s employees or by a NOC engineer in the hosting company. You could simplify/orchestrate this using PowerShell or System Center Orchestrator. The RTO will be short but have implementation specific variables: how long does it take to start up your VMs and for their guest operating systems/applications to start? How long will it take for you to get your VDI/RDS session hosts (for remote access to applications) up, running and accepting user connections? I’d reckon this should be very quick, and much better with the 4-24 hours that many enterprises aim for. I’m chuckling as I type this; the Hyper-V group is giving SMEs a better DR solution than most of the Fortune 1000’s can realistically achieve with oodles of money to spend on networks and storage replication, regardless of virtualisation products.

A common question I expect: there is no Hyper-V integration component for Replica. This mechanism works at the storage level, where Hyper-V is intercepting and logging storage activity.

Replica and Hyper-V Clusters

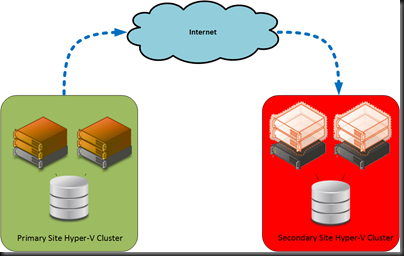

Hyper-V Replica works with clusters. In fact you can do the following replications:

- Standalone host to cluster

- Cluster to cluster

- Cluster to standalone host

The tricky thing is the configuration replication and smooth delegation of replication (even with Live Migration and failover) of HA VMs on a cluster. How can this be done? You can enable a HA role called a Hyper-V Replica Broker on a cluster (once only). This is where you can configure replication, authentication, etc, and the Broker replicates this data out to cluster nodes. Replica settings for VMs will travel with them, and the broker ensures smooth replication from that point on.

Configuring Hyper-V Replica

I don’t have my lab up and running yet, but there are already many step-by-step posts out there. I wanted to focus on the how it works and why to use it. But here are the fundamentals:

On the replica host/cluster, you need to enable Hyper-V Replica. Here you can control what hosts (or all) can replicate to this host/cluster. You can do things like have one storage path for all replicas, or creating individual policies based on source FQDN such as storage paths or enabling/pausing/disabling replication.

You do not need to enable Hyper-V Replica on the source host. Instead, you configure replication for each required VM. This includes things like:

- Authentication: HTTP (Kerberos) within the AD forest, or HTTPS (destination provided SSL certificate) for inter-forest (or hosted) replication.

- Select VHDs to replicate

- Destination

- Compressing data transfer: with a CPU cost for the source host.

- Enable VSS once per hour: for apps requiring consistency – not normally required because of the logging nature of Replica and it does cause additional load on the source host

- Configure the number of replicas to retain on the destination host/cluster: Hyper-V Replica will automatically retain X historical copies of a VM on the destination site. These are actually Hyper-V snapshots on the destination copy of the VM that are automatically created/merged (remember we have hot-merge of the AVHD in Windows 8) with the obvious cost of storage. There is some question here regarding application support of Hyper-V snapshots and this feature.

Initial Replication Method

I’ve worked in the online backup business before and know how difficult the first copy over the wire is. The SME may have small changes to replicate but might have TBs of data to copy on the first synchronisation. How do you get that data over the wire?

- Over-the-wire copy: fine for a LAN, if you have lots of bandwidth to burn, or if you like being screamed at by the boss/customer. You can schedule this to start at a certain time.

- Offline media: You can copy the source VMs to some offline media, and import it to the replica site. Please remember to encrypt this media in case it is stolen/lost (BitLocker-To-Go), and then erase (not format) it afterwards (DBAN). There might be scope for an R2/Windows 9 release to include this as part of a process wizard. I see this being the primary method that will be used. Be careful: there is no time out for this option. The HRL on the source site will grow and grow until the process is completed (at the destination site by importing the offline copy). You can delete the HRLs without losing data – it is not like a Hyper-V snapshot (checkpoint) AVHD.

- Use a seed VM on the destination site: Be very very careful with this option. I really see it as being a great one for causing calls to MSFT product support. This is intended for when you can restore a copy of the VM in the DR site, and it will be used in a differencing mechanism where the differences will be merged to create the synch. This is not to be used with a template or similar VMs. It is meant to be used with a restored copy of the same VM with the same VM ID. You have been warned.

And that’s it. Check out the social media and you’ll see how easy people are saying Hyper-V Replica is to set up and use. All you need to do now is check out the status of Hyper-V Replica in the Hyper-V Management Console, Event Viewer (Hyper-V Replica log data using the Microsoft-Windows-Hyper-V-VMMSAdmin log), and maybe even monitor it when there’s an updated management pack for System Center Operations Manager.

Failover

I said earlier that failover is manual. There are two scenarios:

Planned: You are either testing the invocation process or the original site is running but unavailable. In this case, the VMs start in the DR site, there is guaranteed zero data loss, and the replication policy is reversed so that changes in the DR site are replicated to the now offline VMs in the primary site.

Unplanned: The primary site is assumed offline. The VMs start in the DR site and replication is not reversed. In fact, the policy is broken. To get back to the primary site, you will have to reconfigure replication.Can I Dispense With Backup?No, and I’m not saying that as the employee of a distributor that sells two competing backup products for this market. Replication is just that, replication. Even with the historical copies (Hyper-V snapshots) that can be retained on the destination site, we do not have a backup with any replication mechanism. You must still do a backup, as I previously blogged, and you should have offsite storage of the backup.Many will continue to do off-site storage of tapes or USB disks. If your disaster affects the area, e.g. a flood, then how exactly will that tape or USB disk get to your DR site if you need to restore data? I’d suggest you look at backup replication, such as what you can get from DPM:

The Big Question: How Much Bandwidth Do I Need?

Ah, if I knew the answer to that question for every implementation then I’d know many answers to many such questions and be a very rich man, travelling the world in First Class. But I am not.

There’s a sizing process that you will have to do. Remember that once the initial synchronisation is done, only changes are replayed across the wire. In fact, it’s only the final resultant changes of the last 5 minutes that are replayed. We can guestimate what this amount will be using approaches such as these:

- Set up a proof of concept with a temporary Hyper-V host in the client site and monitor the link between the source and replica: There’s some cost to this but it will be very accurate if monitored over a typical week.

- Do some work with incremental backups: Incremental backups, taken over a day, show how much change is done to a VM in a day.

- Maybe use some differencing tool: but this could have negative impacts.

Some traps to watch out for on the bandwidth side:

- Asynchronous broadband (ADSL): The customer claims to have an 8 Mbps line but in reality it is 7 Mbps down and 300kbps up. It’s the uplink that is the bottleneck because you are sending data up the wire. Most SME’s aren’t going to need all that much. My experience with online backup verifies that, especially if compression is turned on (will consume source host CPU).

- How much bandwidth is actually available: monitor the customer’s line to tell how much of the bandwidth is being consumed or not by existing services. Just because they have a functional 500 kbps upload, it doesn’t mean that they aren’t already using it.

Very Useful Suggestion

Think about your servers for a moment. What’s the one file that has the most write activity? It is probably the paging file. Do you really want to replicate it from site A to site B, needlessly hammering the wire?

Hyper-V Replica works by intercepting writes to VHDs. It has no idea of what’s inside the files. You can’t just filter out the paging file. So the excellent suggestion from the Hyper-V product group is to place the paging file of each VM onto a different VHD, e.g. a SCSI attached D drive. Do not select this drive for replication. When the VMs are failed over, they’ll still function without the paging file, just not as well. You can always add one after if the disaster is sustained. The benefit is that you won’t needlessly replicate paging file changes from the primary site to the DR.

Summary

I love this feature because it solves a real problem that the majority of businesses face. It is further proof that Hyper-V is the best value virtualisation solution out there. I really do think it could give many Microsoft Partners a way to offer a new multi-tenant business offering to further reduce the costs of DR.

EDIT:

I have since posted a demo video of Hyper-V Replica in action, and I have written a guest post on Mary Jo Foley’s blog.

EDIT2:

I have written around 45 pages of text (in Word format) on the subject of Hyper-V Replica for a chapter in the Windows Server 2012 Hyper-V Installation and Configuration Guide book. It goes into great depth and has lots of examples. The book should be out Feb/March of 2013 and you can pre-order it now: