This session (original here) from Microsoft Ignite 2016 is looking at new networking features in Azure such as Web Application Firewall, IPv6, DNS, accelerated networking, VNet Peering and more. This post is my collection of notes from the recording of this session.

The speakers are:

- Yousef Khalidi, Corporate Vice President, Microsoft

- Jason Carson, Enterprise Architect, Manulife

- Art Chenobrov, Manager Identity, Access and Messaging, Hyatt Hotels

- Gabriel Silva, Program Manager, Microsoft

A mix of Microsoft and non-Microsoft speakers. There will be a breadth overview and some customer testimonials. A chunk of marketing consumes the first 7 minutes. Then on to the good stuff.

High Performance Networking

A number of improvements have been made at no cost to the customer. Honestly, I’ve seen some by accident, and they ruined (in a good way) some of my demos

- Improved performance of all VMs, seeing VNet performance improve by 33% to 50%

- More IOPS to storage – I saw IOPS increase in some demo/tests

- For Linux and Windows VMs

- The global deployment will be completed in 2016 – phased deployments across the Azure regions.

You have to do nothing to get these benefits. I’m sure that Yousef said that’ll we’ll be able to get up to 21 Gbps down depending on the VM SKU/size. Some of this is made possible thanks to making better utilization of NIC capacity.

Accelerated Networking

Azure now has SR-IOV (single-root IO virtualization), where a VM can connect directly to a physical NIC without routing traffic via the virtual switch in the host partition. The results are:

- 10 x latency improvement

- Increased packets per second (PPS)

- Reduced jitter – great for media/voice

Now Azure has the highest bandwidth VMs in the cloud: DS15v2 and D15v2 can hit 25 Gbps (in preview). The competition can get up to 20 Gbps “on a good day”.

Performance sensitive applications will benefit. There is a 1.5x improvement for Azure SQL DB in memory OLTP transactions.

Microsoft are rolling this out across Azure over this and the next calendar years. Gabe (Gabriel) does a demo, doing VM to VM latency and bandwidth test. You can enable SR-IOV in the Portal (Accelerated Network setting). The demo is done in West Central US region. You can verify that SR-IOV is enabled for the vNIC in the guest OS – Windows, look for a virtual function (VF) network adapter in Devices. Interestingly, in the demo, we can tell that the host uses Mellanox ConnectX-3 RDMA NICs. The first demo does 100,000 pings on VMs, and this is 10 times lower than current numbers. They run a network stress test between two VMs.

The get 25 Gbps of connectivity between the 2 VMs:

This functionality will be coming “soon” to us.

Next there’s a demo with connection latency tests to a database, from a VM with SRIOV and one without. We see that latency is significantly lower on the accelerated VM. They re-run the test to make the results more tangible. The un-accelerated machine can query 270 rows per second while the accelerated one is hitting 664. Same VMs – just SRIOV is enabled on one of them.

The subscription must be enabled for this feature first (still rolling it out) and then all of your VMs can leverage the feature. There is no cost to turning it on and using the feature.

Back to Yousef.

The Network Big Picture

The following is an old slide full of old features:

On to the new stuff.

VNet Peering (GA)

A customer can have lots of isolated features with duplicated effort.

Customers want to consolidate some of this. For example, can we:

- Have one VNet that has load balancing and virtual appliance firewalls/proxies

- Connect other VNets to this?

The answer is yes, you can now using VNet peering (limited to connections in a single region) which just went GA.

Note that VM connections across a VNet run at the speed of the VMs’ NICs.

Azure DNS (GA)

You can host your records in Azure DNS or elsewhere. The benefit of Azure is that it is global and fast.

IPv6 for Azure VMs

We can create IPv6 IP addresses on the load balancer, and use AAAA DNS records (which you can host in Azure DNS if you want) to access VM services in Azure. This is supported for Linux and Windows. This is a big deal for IoT devices.

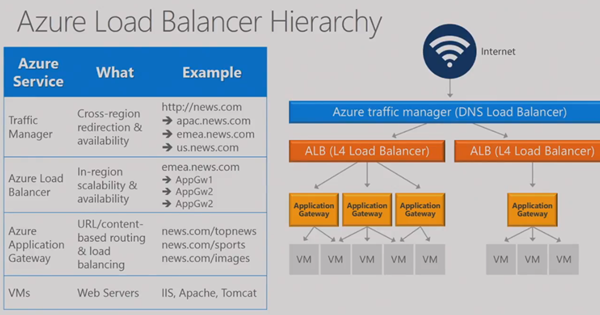

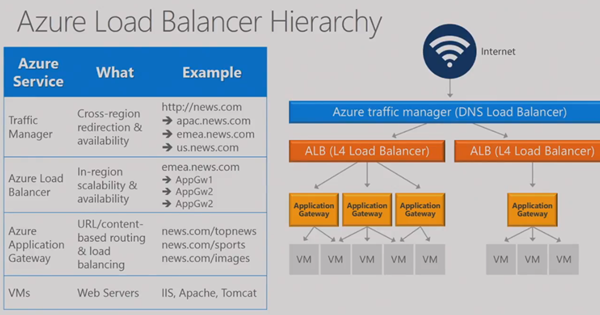

Load Balancing (Review)

Yousef reviews how load balancing can be done toady in Azure. A traffic manager profile (based on DNS records and abstraction) does load balancing/fail over between 2+ Azure deployments (across 1+ regions). A single deployment has an Azure Load Balancer, which uses Layer 4 LB rules to pass traffic through to the VNet. Within the VNet, Azure application gateways (can) the proxy/direct/load balance Layer 7 traffic to web servers (VMs) on the VNet.

Web Application Firewall

The web application gateway is still relatively unknown, in my experience, even though it’s been around for 1 year. This is layer 7 handling of traffic to web farms/servers.

A preview for web application firewall (WAF) has been announced – an extension of the web application gateway.

WAF adds security to the WAG. In current preview, it uses a hard set of rules, but custom rules will be coming soon. MSFT hopes to GA it soon (must be ready first).

WAF is an add-on SKU to the gateway. It can run in detection mode (great to watch traffic without intervening – try it out). When you are happy, you switch over to prevention mode so it can intervene.

Multiple VIPS for Load Balancer

This is a cost reduction improvement. For example, you needed to run multiple databases behind internal load balancers, with each DB pair requiring a unique VIP. Now we can assign multiple VIPs to a LB, and consolidate the databases to a pair of VMs instead of multiple pairs of VMs.

Back end ports can also be reused to facilitate the above.

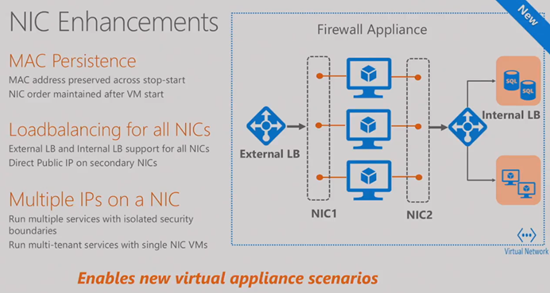

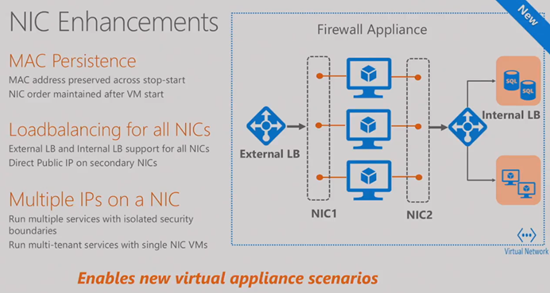

NIC Enhancements

These improvements didn’t get mentioned in any posts I read or announcements I heard. MAC addresses were not persistent. They have been for a few months now. Also, VM ordering in a VM is retained after VM start (important for NVAs) – there was a bug were the NICs weren’t in persistent order.

New virtual appliance scenarios are supported by adding functionality to additional NICs in a VM:

- Load balancing

- Direct public IP assignment

- Multiple IPs on a single NIC

A marketing-heavy video is played to discuss how Hyatt Hotels are using Azure networking. I think that the jist of the story is that Hyatt went from a single data center in the USA, to having multiple PoPs around the world thanks to Azure networking (probably ExpressRoute). The speaker from Hyatt comes on stage.

Yousef is back on stage to talk about connecting to Azure. I was ready to skip this piece of the video but Yousef did present some interesting stuff. The first is using the Azure backbone to connect disparate offices. Each office connects over “the last mile” to Azure using secure VPN. Then Azure VNet-VNet VPNs provide the WAN. I’d never thought of this architecture – it’s actually pretty simple to set up with the new VPN UI in the Azure Portal. Azure provides low latency and high bandwidth connections – this is a very cheap way to network sites together with lots of speed and low latency.

Highly Available Connections to Azure

We can create more than 1 connection to Azure VPN gateways, solving a concern that people have over reliance on a single link/ISP.

Most people don’t know it, but the Azure gateway was an active/passive VM cluster behind the curtain. You can now run the gateway in an active/active configuration, giving you greater HA for your site-to-Azure connections. And additionally, you can aggregate the bandwidth of both VPN tunnels/links/ISPs.

If you are interested in the expensive ExpressRoute WAN option, then the PoP locations have increased to 35 around the world – more than any other cloud, with lots of partners offering WAN and connection relay options.

ExpressRoute has a new UltraPerfromance gateway option: 5x improvement over the 2 Gbps HighPerformance gateway– up to 10 Gbps through to VNets

The ExpressRoute gateway SLA is increased to 99.95%.

More insights into ExpressRoute are being added: troubleshooting, BGP/traffic/routing statistics, diagnostics, alerting, monitoring, etc.

There’s a stint by the Manulife speaker to talk about their usage of Azure, which I skipped.

Monitoring And Diagnostics

Customers want visibility into the virtual networks that they are using for production and mission critical applications/services. So Microsoft has given us this in Azure:

More stuff will appear in PoSH, log extractions (for 3rd parties), and in the Portal in the future. And the session moved on to a summary.