These are my notes from the recording of this Ignite 2017 session, BRK2293.

Speaker: Nishant Thacker, Technical Product Manager – Big Data

This Level-200 overview session is a tour of big data in Azure, it explains why the services were created, and what is their purpose. It is a foundation for the rest of the related sessions at Ignite. Interestingly, only about 30% of the audience had done any big data work in the past – I fall into the other 70%.

What is Big Data?

- Definition: A term for data sets that are so large or complex that traditional data processing application s/w if inadequate to deal with it. Nishant stresses “complex”.

- Challenges: Capturing (velocity) data, data storage, data analysis, search, sharing, visualization, querying, updating, and information privacy. So … there’s a few challenges

The Azure Data Landscape

This slide is referred to for quite a while:

Data Ingestion

He starts with the left-top corner:

The first problem we have is data ingestion into the cloud or any system. How do you manage that? Azure can manage ingestion of data.

Azure Data Factory is a scheduling, orchestration, and ingestion service. It allows us to create sophisticated data pipelines from the ingestion of the data through to processing, through to storing, through to making it available to end users to access. It does not have compute power of it’s own; it taps into other Azure services to deliver any required compute.

The Azure Import/Export service can help bring incremental data on board. You can also use it to bulk load on Azure. If you have terabytes of data to upload, bandwidth might not be enough. You can securely courier data via disk to an Azure region.

The Azure CLI is designed for bulk uploads to happen in parallel. The SDKs can be put into your code, so you can generate the data in your application in the cloud, instead of uploading to the cloud.

Operational Database Services

- Azure SQL DB

- Azure Cosmos DB

The SQL database offers SQL Server, MySQL, and PostgreSQL.

Cosmos DB is the more interesting one – it’s NoSQL and offers global storage. It also supports 4 programming models: Mongo, Gremlin/Graph, SQL (DocumentDB), and Table. You have flexibility to bring in data in its native form, and data can be accessed in an operational environment. Cosmos DB has plugs into other aspects of Azure that make it more than just an operational database such as, Azure Functions or Spark (HDInsight).

Analytical Data Warehouse

When you want to do reporting and dashboards from data in operational databases then you will need an analytical data warehouse that aggregates data from many sources.

Traits of Azure SQL Data Warehouse:

- Can grow, shrink, and pause in seconds – up to 1 Petabyte

- Fill enterprise-class SQL Server – means you can migrate databases and bring your scripts with you. Independent scale of compute and storage in seconds

- Seamless integration with Power BI, Azure Machine Learning, HDInsight, and Azure Data Factory

NoSQL Data

- Azure Blob storage

- Azure Data Lake Store

When your data doesn’t fit into the rows and columns structure of a traditional database then this is when you need specialized big data storages – capacity, unstructured sorting/reading.

Unstructured Data Compute Engines

- Azure Data Lake Analytics

- Azure HDInsight (Spark / Hadoop): managed clusters of Hadoop and Spark with enterprise-level SLAs with lower TCO than on-premises deployment.

When you get data into a big unstructured stores such as Blob or Data Lake then you need specialized compute engines for the complexity and volume of the data. This compute must be capable of scaling out because you cannot wait hours/days/months to analyse the data.

Ingest Streaming Data

- Azure IoT Hub

- Azure Event Hubs

- Kafka on Azure HDInsight

How do you ingest this real-time data as it is generated? You can tap into event generators (e.g. devices) and buffer up data for your processing engines.

Stream Processing Engines

- Azure Stream Analytics

- Storm and Spark streaming on Azure HDInsight

These systems allow you to process streaming data on the fly. You have a choice of “easy” or “open source extensibility” with either of these solutions.

Reporting and Modelling

- Azure Analysis Services

- Power BI

You have cleansed and curated the data, but what do you do with it? Now you want some insights from it. Reporting & modelling is the first level of these insights.

Advanced Analytics

- Azure Machine Learning

- ML Server (R)

The basics of reporting and modelling are not new. Now we are getting into advanced analytics. Using data from these AI systems we can predict outcomes or prescribe recommended actions.

Deep Learning

-

Cognitive Services

-

Bot Service

Taking advanced analytics to a further level by using these toolkits.

Tracking Data

-

Azure Search

-

Azure Data Catalog

When you have such a large data estate you need ways to track what you have, and to be able to search it.

The Azure Platform

-

ExpressRoute

-

Azure AD

-

Network Security Groups

-

Azure Key Management Service

-

Operations Management Suite

-

Azure Functions (serverless compute)

-

Visual Studio

You need a platform with enterprise capabilities in the best ways possible in a compliant manner.

Big Data Services

Nishant says that that darker shaded services are the ones usually being talked about when they talk about Big Data:

To understand what all these services are doing as a whole, and why Microsoft has gotten into Big Data, we have to step all the way back. There are 3 high-level trends that are a kind of an industrial revolution, making data a commodity:

We are on the cusp of an era where every action produces data.

The Modern Data Estate

There are 2 principles:

-

Data on-premises and

-

Data in the cloud

Few organizations are just 1 or the other; most span both locations. Data warehouses aggregate operational databases. Data Lakes store the data used for AI, and will be used to answer the questions that we don’t even know of today.

We need three capabilities for this AI functionality:

-

The ability to reason over this data from anywhere

-

You have the flexibility to choose – MS (simplicity & ease of use), open source (wider choice), programming models, etc.

-

Security & privacy, e.g. GDPR

Microsoft has offerings for both on-premises and in Azure, spanning MS code and open source, with AI built-in as a feature.

Evolution of the Data Warehouse

There are 3 core scenarios that use Big Data:

-

Modern DW: Modernizing the old concept of a DW to consume data from lots of sources, including complexity (big data)

-

Advanced Analytics: Make predictions from data using Deep Learning (AI)

-

IoT: Get real time insights from data produced by devices

Implementing Big Data & Data Warehousing in Azure

Here is a traditional DW

Data from operational databases are fed into a single DW. Some analysis is done and information is reported/visualized for users.

SQL Server Integration services, a part of the Azure Data Factory, can allow you to consume data from your multiple operational assets and aggregate them as a DW.

Azure Analysis Services allows you yo build tabular models for your BI needs, and Power BI can be used to report and visualize those models.

If you have existing huge repositories of data that you want to bring into a DW then you can use:

- Azure CLI

- Azure Data Factory

- BCP Command Line Utility

- SQL Server Integration Services

This traditional model breaks when some of your data is unstructured. For example:

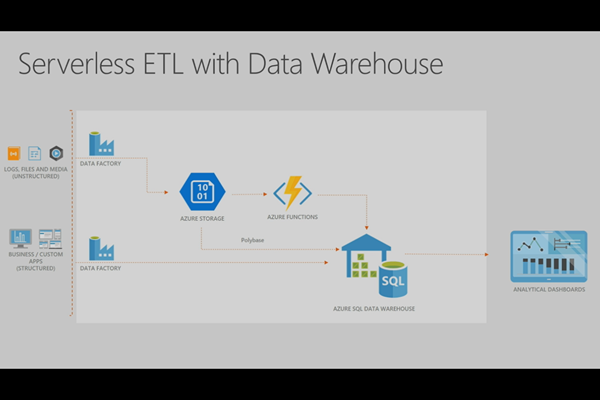

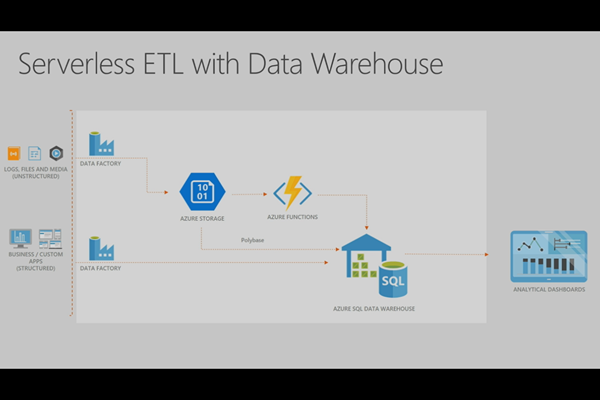

Structured operational data is coming in from Azure SQL DB as before.

Log files and media files are coming into blob storage as unstructured data – the structure of queries is unknown and the capacity is enormous. That unstructured data breaks your old system but you still need to ingest it because you know that there are insights in it.

Today, you might only know some questions that you’d like to ask of the unstructured data. But later on, you might have more queries that you’d like to create. The vast scale of economy of Azure storage makes this feasible.

ExpressRoute will be used to ingest data from an enterprise if:

Back to the previous unstructured data scenario. If you are curating the data so it is filtered/clean/useful, then you can use Polybase to ingest it into the DW. Normally, that task of cleaning/filtering/curating is too huge for you to do on the fly.

HDInsight can tap into the unstructured blob storage to clean/curate/process it before it is ingested into the DW.

What does HDInsight allow you to do? You forget that the data was structured/unstructured/semi-structured. You forget the complexity of the analytical queries that you want to write. You forget the kinds of questions you would like to ask of the data. HDInsight allows you to add structure to the data using some of it’s tools. Once the data is structured, you can import it into the DW using Polybase.

Another option is to use Azure Functions instead of HDInsight:

This serverless option can suit if the required manipulation of the unstructured data is very simple. This cannot be sophisticated – why re-invent the wheel of HDInsight?

Back to HDInsight:

Analytical dashboards can tap into some of the compute engines directly, e.g. tap into raw data to identify a trend or do ad-hoc analytics using queries/dashboards.

Facilitating Advanced Analytics

So you’ve got a modern DW that aggregates structured and unstructured data. You can write queries to look for information – but we want deeper insights.

The compute engines (HDIsnight) enable you to use advanced analytics. Machine Learning can only be as good as the quality and quantity of data that you provide to it – the compute engine’s job. The more data machine learning has to learn from, the more accurate the analysis will be. If the data is clean, then garbage results won’t be produced. To do this with TBs or PBs of data, you will need the scale-out compute engine (HDInsight) – a VM just cannot do this.

Some organizations are so large or so specialized that they need even better engines to work with:

Azure Data Lake store replaces blob storage for greater scales. Azure Data Lake Analytics replaces HDInsight offers a developer-friendly T-SQL-like & C# environment. You can also write Python R models. Azure Data Lake Analytics is serverless – there are no clusters as there are in HDInsight. You can focus on your service instead of being distracted by monitoring.

Note that HDInsights works with interactive queries against streaming data. Azure Data Lake is based on batch jobs.

You have the flexibility of choice for your big data compute engines:

Returning to the HDInsight scenario:

HDInsight, via Spark, can integrate with Cosmos DB. Data can be stored in Cosmos DB for users to consume. Also, data that users are generating and storing in Cosmos DB can be consumed by HDInsight for processing by advanced analytics, with learnings being stored back in Cosmos DB.

Demo

He opens an app on an iPhone. It’s a shoe sales app. The service is (in theory) using social media, fashion trends, weather, customer location, and more to make a prediction about what shoes the customer wants. Those shoes are presented to the customer, with the hope that this will simplify the shopping experience and lead to a sale on this app. When you pick a shoe style, the app predicts your favourite colour. If you view a shoe, but don’t buy it., the app can automatically entice you with promotional offers – stock levels can be queried to see what kind of promotion is suitable – e.g. try shift less popular stock by giving you a discount to do an in-store pickup where stock levels are too high and it would cost the company money to ship stock back to the warehouse. The customer might also be tempted to buy some more stuff when in the shop.

He then switches to the dashboard that the marketing manager of the shoe sales company would use. There’s lots of data visualization from the Modern DW, combining structured and unstructured data – the latter can come from social media sentiment, geo locations, etc. This sentiment can be tied to product category sales/profits. Machine learning can use the data to recommend promotional campaigns. In this demo, choosing one of these campaigns triggers a workflow in Dynamics to launch the campaign.

Here’s the solution architecture:

There are 3 data sources:

-

Unstructured data from monitoring the social and app environments – Azure Data Factory

-

Structured data from CRM (I think) – Azure Data Factory

-

Product & customer profile data from Cosmos DB (Service Fabric in front of it servicing the mobile apps).

HDInsight is consuming that data and applying machine learning using R Server. Data is being written back out to:

The DW consumes two data sources:

Azure Analysis Services then provides the ability to consume the information in the DW for the Marketing Manager.

Enabling Real-Time Processing

This is when we start getting in IoT data, e.g. sensors – another source of unstructured data that can come in big and fast. We need to capture the data, analyse it, derive insights, and potentially do machine learning analysis to take actions on those insights.

Event hubs can ingest this data and forward it to HDIngsights – stream analysis can be done using Spark Streaming or Storm. Data can be analysed by Machine Learning and reported in real-time to users.

So the IoT data is:

-

Fed into HDInsights for structuring

-

Fed into Machine Learning for live reporting

-

Stored in Blob Storage.

-

Consumed by the DW using Polybase for BI

There are alternatives to this IOT design.

You should use Azure IoT Hub if you want:

If you have some custom operations to perform, Azure HDInsight (Kafka) can scale up from millions of events per second. It can apply some custom logic that cannot be done by Event Hub or IoT Hub.

We also have flexibility of choice when it comes to processing.

Azure Stream Analytics gives you ease-of-use versus HDInsight. Instead of monitoring the health & performance of compute clusters, you can use Stream Analytics.

The Azure Platform

The platform of Azure wraps this package up:

-

ExpressRoute: Private SLA networking

-

Azure Data Factory: Orchestration of the data processing, not just ingestion.

-

Azure Key Vault: Securely storing secrets

-

Operations Management Suite: Monitoring & alerting

And now that your mind is warped, I’ll leave it there  I thought it was an excellent overview session.

I thought it was an excellent overview session.