Note: This post was originally written using the Windows Server “8” (aka 2012) Beta. The PowerShell cmdlets have changed in the Release Candidate and this code has been corrected to suit it.

After the posts of the last few weeks, I thought I’d share a script that I am using to build a converged fabric hosts in the lab. Some notes:

- You have installed Windows Server 2012 on the machine.

- You are either on the console or using something like iLO/DRAC to get KVM access.

- All NICs on the host will be used for the converged fabric. You can tweak this.

- This will not create a virtual NIC in the management OS (parent partition or host OS).

- You will make a different copy of the script for each host in the cluster to change the IPs.

- You could strip out all but the Host-Parent NIC to create converged fabric for standalone host with 2 or 4 * 1 GbE NICs

And finally …. MSFT has not published best practices yet. This is still a beta release. Please verify that you are following best practices before you use this script.

OK…. here we go. Watch out for the line breaks if you copy & paste:

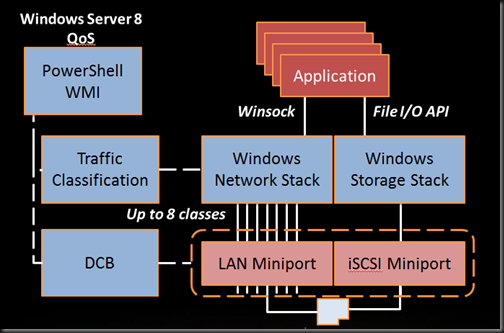

write-host “Creating virtual switch with QoS enabled”

New-VMSwitch “ConvergedNetSwitch” -MinimumBandwidthMode weight -NetAdapterName “ConvergedNetTeam” -AllowManagementOS 0

write-host “Setting default QoS policy”

Set-VMSwitch “ConvergedNetSwitch” -DefaultFlowMinimumBandwidthWeight 10

write-host “Creating virtual NICs for the management OS”

Add-VMNetworkAdapter -ManagementOS -Name “Host-Parent” -SwitchName “ConvergedNetSwitch”

Set-VMNetworkAdapter -ManagementOS -Name “Host-Parent” -MinimumBandwidthWeight 10

Add-VMNetworkAdapter -ManagementOS -Name “Host-Cluster” -SwitchName “ConvergedNetSwitch”

Set-VMNetworkAdapter -ManagementOS -Name “Host-Cluster” -MinimumBandwidthWeight 10

Add-VMNetworkAdapter -ManagementOS -Name “Host-LiveMigration” -SwitchName “ConvergedNetSwitch”

Set-VMNetworkAdapter -ManagementOS -Name “Host-LiveMigration” -MinimumBandwidthWeight 10

Add-VMNetworkAdapter -ManagementOS -Name “Host-iSCSI1” -SwitchName “ConvergedNetSwitch”

Set-VMNetworkAdapter -ManagementOS -Name “Host-iSCSI1” -MinimumBandwidthWeight 10

#Add-VMNetworkAdapter -ManagementOS -Name “Host-iSCSI2” -SwitchName “ConvergedNetSwitch”

#Set-VMNetworkAdapter -ManagementOS -Name “Host-iSCSI2” -MinimumBandwidthWeight 15

write-host “Waiting 30 seconds for virtual devices to initialise”

Start-Sleep -s 30

write-host “Configuring IPv4 addresses for the management OS virtual NICs”

New-NetIPAddress -InterfaceAlias “vEthernet (Host-Parent)” -IPAddress 192.168.1.51 -PrefixLength 24 -DefaultGateway 192.168.1.1

Set-DnsClientServerAddress -InterfaceAlias “vEthernet (Host-Parent)” -ServerAddresses “192.168.1.40”

New-NetIPAddress -InterfaceAlias “vEthernet (Host-Cluster)” -IPAddress 172.16.1.1 -PrefixLength “24”

New-NetIPAddress -InterfaceAlias “vEthernet (Host-LiveMigration)” -IPAddress 172.16.2.1 -PrefixLength “24”

New-NetIPAddress -InterfaceAlias “vEthernet (Host-iSCSI1)” -IPAddress 10.0.1.55 -PrefixLength “24”

#New-NetIPAddress -InterfaceAlias “vEthernet (Host-iSCSI2)” -IPAddress 10.0.1.56 -PrefixLength “24”

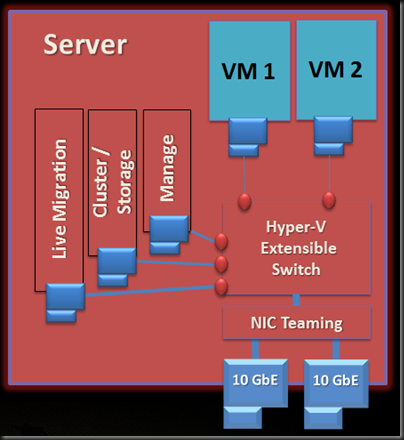

That will set up the following architecture:

QoS is set up as follows:

- The default (unspecified links) is 10% minimum

- Parent: 10%

- Cluster: 10%

- Live Migration: 20%

My lab has a single VLAN network. In production, you should have VLANs and trunk the physical switch ports. Then (I believe), you’ll need to add a line for each virtual NIC in the management OS (host) to specify the right VLAN (I’ve not tested this line yet on the RC release of WS2012 – watch out for teh VMNetowrkAdaptername parameter):

Set-VMNetworkAdapterVLAN –ManagementOS –VMNetworkAdapterName “vEthernet (Host-Parent)” –Trunk –AllowedVLANList 101

Now you have all the cluster connections you need, with NIC teaming, using maybe 2 * 10 GbE, 4 * 1 GbE, or maybe even 4 * 10 GbE if you’re lucky.