Many people in Europe take the month of July off for vacation so they would have missed out on an unusually busy few weeks of announcements for Microsoft. This post summarises the infrastructure announcements from Microsoft Azure during July 2023.

Update: 01/09/2023. I’m not sure how this happened but I missed a bunch of interesting items from the second half of July. I guess that I got distracted while putting this list together (there’s a lot of task hopping during the day job) and thought that I’d completed the list. I have added some items today.

Networking

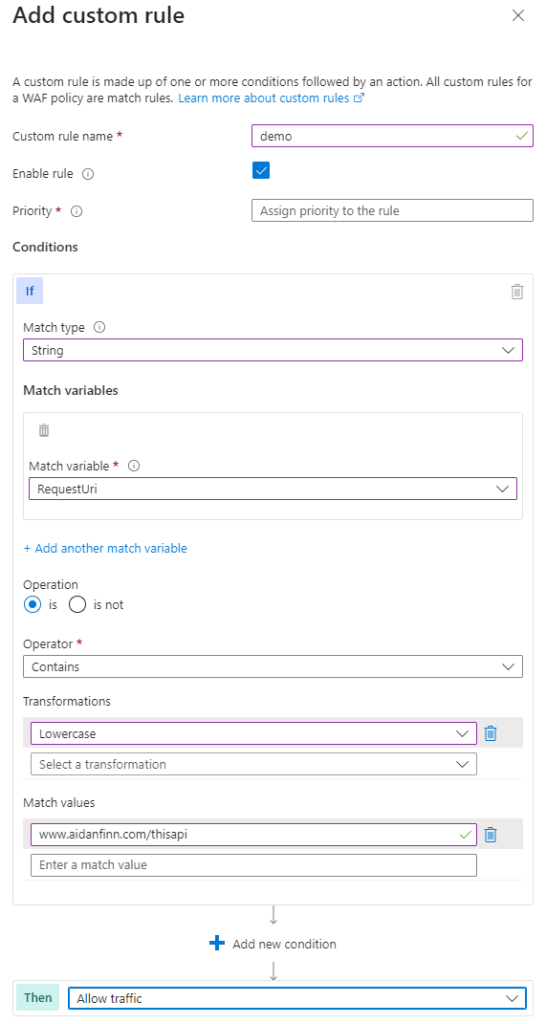

DRS 2.1 is baselined off the Open Web Application Security Project (OWASP) Core Rule Set (CRS) 3.3.2 and extended to include additional proprietary protection rules developed by Microsoft.

All improvements to the CRS claimed to reduce the detection of false positives and I never saw that in reality. I’m going to be skeptical about this one – a simple rules-based system will still detect the same false positives that I continue to see daily.

With Virtual Network encryption, customers can enable encryption of traffic between Virtual Machines and Virtual Machines Scale Sets within the same virtual network and between regionally and globally peered virtual networks.

This will be useful in limited scenarios going forward for customers. Too many networking features are limited to VMs. Legacy ssytems that are migrated to Azure or niche solutions that are best on VMs are fewer in number every day – customers that are already in the cloud normally choose PaaS first.

ExpressRoute private peering now supports the use of custom Border Gateway Protocol (BGP) communities with virtual networks connected to your ExpressRoute circuits. Once you configure a custom BGP community for your virtual network, you can view the regional and custom community values on outbound traffic sent over ExpressRoute when originating from that virtual network.

This one could be useful for customers where they have multiple ExpressRoute circuits with 1:M or N:M site:gateway scenarios.

Always Serve for Azure Traffic Manager (ATM) is now generally available. You can disable endpoint health checks from an ATM profile and always serve traffic to that given endpoint. You can also now choose to use 3rd party health check tools to determine endpoint health, and ATM native health checks can be disabled, allowing flexible health check setups.

Not much to say here 🙂

When branch-to-branch is enabled and Route Server learns multiple routes across site-to-site (S2S) VPN, ExpressRoute, and SD-WAN NVAs, for the same on-premises destination route prefix, users can now configure connection preferences to influence Route Server route selection.

Azure Route Server is a great resource. It’s so simple to configure. I just wish there were native solutions where you could program routes into it when using only native Azure networking resources. Using BGP instead of UDRs in a hub & spoke would be so much more reliable and agile.

With cross-region Load Balancer, you can distribute traffic across multiple Azure regions with ultra-low latency and high performance.

This smells like one of those Azure resource types that was developed for other Azure or Microsoft cloud services (like telephony) and they released it to the public too.

We have updated the default TLS configuration for new deployments of the Application Gateway to Predefined AppGwSslPolicy20220101 policy to improve the default security. This recently introduced, generally available, predefined policy ensures better security with minimum TLS version 1.2 (up to TLS v1.3) and stronger cipher suites.

Those of you using older deployments or modular code for new deployments should consult your application owners and start a planning process to upgrade.

Cloud NGFW by Palo Alto Networks is the first ISV next-generation firewall service natively integrated in Azure. Developed through a collaboration between Microsoft and Palo Alto Networks, this service delivers the cutting-edge security features of Palo Alto Network’s NGFW technology while also offering the simplicity and convenience of cloud-native scaling and management.

If you really must stay with on-prem tech 😀

Azure Kubernetes Service

Application Gateway for Containers is the next evolution of Application Gateway + Application Gateway Ingress Controller (AGIC), providing application (layer 7) load balancing and dynamic traffic management capabilities for workloads running in a Kubernetes cluster.

It sounds good, but AKS folks that I respect seem to prefer NGINX. That said, I know SFA about K8s.

The new network observability add-on for AKS, now in public preview, provides complete observability into the network health and connectivity of your AKS cluster.

I’m surprised that something like this wasn’t already available. My current project might not include AKS, but monitoring network performance and health between services was critical. Doing the same between micro-services seems more important to me.

BYOK support provides you the option to use your own customer managed keys (CMK) to encrypt your ephemeral OS Disks, providing you increased control over your encryption keys.

This sounds like one of those “a really big customer wanted it” features and it won’t be of interest to too many others.

Azure Virtual Desktop

Personal Desktop Autoscale is Azure Virtual Desktop’s native scaling solution that automatically starts session host virtual machines according to schedule or using Start VM on Connect and then deallocates session host virtual machines based on the user session state (log off/disconnect).

This could be a real money saver for a very expensive solution – personal desktops in the cloud.

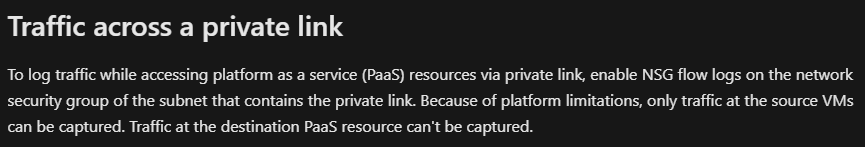

Private Link for Azure Virtual Desktop is now generally available! With this feature, users can securely access their session hosts and workspaces using a private endpoint within their virtual network. Private Link enhances the security of your data by ensuring it stays within a trusted and secure private network environment.

I have encountered a customer scenario where the connection had to go over a “leased line”. Even, at the time, had “Windows Virtual Desktop” been ready, the use of a public endpoint would have forced us to use Citrix instead. The use of a Private Endpoint forces the client to connect over a private network.

We are announcing the general availability for Watermarking support on Azure Virtual Desktop, an optional protection feature to Screen Capture that acts as a deterrent for data leakage.

A QR code is watermarked onto the screen. The QR code can be scanned to obtain the connection ID of the session. Then admins can trace that session through Log Analytics. There are limitations.

Virtual Machines

Azure confidential VMs (CVMs) offer VM memory encryption with integrity protection, which strengthens guest protections to deny the hypervisor and other host management components code access to the VM memory and state.

This might sound like overkill to most of us, but I have encountered one virtual desktop scenario where the nature of the data and the legal requirements might mandate the use of this technology.

With Azure Dedicated Host’s new ‘resize’ feature, you can easily move your existing dedicated host to a new Azure Dedicated Host SKU (e.g., from Dsv3-Type1 to Dsv3-Type4). This new ‘resize’ feature minimizes the impact and effort involved in configuring VMs when you want to upgrade your underlying dedicated host system.

For you Hyper-V folks out there: yes, Live Migration will be used to keep the VMs running for all but a second or two (just like vMotion).

Dev Box combines developer-optimized capabilities with the enterprise-ready management of Windows 365 and Microsoft Intune.

Think of this as the cousin of Windows 365 which is aimed at developers. For me, this has two use cases:

- Supplying pay-as-you-go virtual machines to contract developers instead of purchasing hardware or trusting their hardware.

- Providing a full development experience that is in a secured network and can be trusted to connect to Azure services.

Hotpatch is now available for Windows Server Azure Edition VMs with Desktop Experience installation mode using the newly released image.

Hmm, did someone say that Server Core is not widely popular? It’s about time.

The new additions to the B family consist of 3 new VM series – Bsv2, Basv2, and Bpsv2, each based on the Intel® Xeon® Platinum 8370C, AMD EPYC™ 7763v, and Ampere® Altra® Arm-based processors respectively. These new burstable v2-series virtual machines offer up to 15% better price-performance, up to 5X higher network bandwidth with accelerated networking, and 10X higher remote storage throughput when compared to the original B series.

This is easily the most popular series of VMs for any customer that I have gone near. It makes sense that new hardware is being introduced to enable continued growth.

Azure Boost is a new system that offloads virtualization processes traditionally performed by the hypervisor and host OS onto purpose-built hardware and software … customers participating in the preview to achieve a 200 Gbps networking throughput and a leading remote storage throughput up to 10 GBps and 400K IOPS, enabling the fastest storage workloads available today.

Back when I was a Hyper-V MVP, this was the sort of feature that would have caught my attention and led to a bunch of really detailed blog posts. If you follow the links you can read:

“Azure Boost VMs in preview can achieve up to 200 Gbps networking throughput, marking a significant improvement with a doubling in performance over other existing Azure VMs … industry leading remote storage throughput and IOPS performance of 10 GBps and 400K IOPS with our memory optimized E112ibsv5 VM using NVMe-enabled Premium SSD v2 or Ultra Disk options.”

It doesn’t appear to be just the extreme spec VMs that get improved:

“Offloading storage data plane operations from the CPU to dedicated hardware results in accelerated and consistent storage performance, as customers are already experiencing on Ev5 and Dv5 VMs. This also enhances existing storage capabilities such as disk caching for Azure Premium SSDs.”

“Azure Boost’s isolated architecture inherently improves security by running storage and networking processes separately on Azure Boost’s purpose-built hardware instead of running on the host server.” This might only be a Linux feature based on Security Enhanced Linux (SELinux).

I wish that Ben Armstrong was still doing tech presentations for Microsoft. He did an amazing job at sharing how things worked. in Hyper-V (what Azure is built upon).

he deadline to migrate your Iaas VMs from Azure Service Manager to Azure Resource Manager is now September 6*, 2023. To avoid service disruption, we recommend that you complete your migration as soon as possible. We will not provide any additional extenstions after September 6, 2023.

There won’t be too many pre-ARM virtual machines out there. But those that are out there are probably old and mostly un-touched in years. It’s already late to get planning … so get planning!

Azure Migrate

A few notes:

- Components in financial estimates through the “TCO/Business case” feature to allow you to analyze cost more comprehensively before moving to the cloud.

- Tanium’s (a partner) real-time operational data can be used by Azure Migrate for assessments and to generate a business case to move to Azure.

- Azure Migrate will now support in-place upgrade of end-of-support (EOS) Windows Server 2012 and later operating system (OS), during the move to Azure.

I have never been able to use Azure Migrate in 4+ years of migrating customers to Azure due to various reasons so I cannot comment on the above.