I thought I’d write a post on how traffic connects and flows in a Windows Server 2012 R2 implementation of Hyper-V with the storage being Hyper-V over SMB 3.0 on a WS2012 R2 Scale-Out File Server (SOFS). There are a number of pieces involved. Understanding what is going on will help you in your design, implementation, and potential troubleshooting.

The Architecture

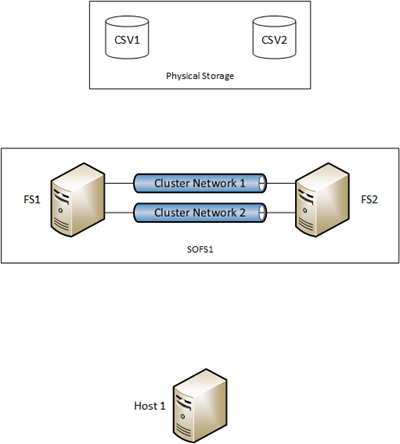

I’ve illustrated a high-level implementation below. Mirrored Storage Spaces are being used as the back-end storage. Two LUNs are created on this storage. A cluster is built from FS1 and FS2, and connected to the shared storage and the 2 LUNs. Each LUN is added to the cluster and converted to Cluster Shared Volume (CSV). Thanks to a new feature in WS2012 R2, CSV ownership (the CSV coordinator automatically created and managed role) is automatically load balanced across FS1 and FS2. Let’s assume, for simplicity, that CSV1 is owned by FS1 and CSV2 is owned by FS2.

The File Server for Application Data role (SOFS) is added to the cluster and named as SOFS1. A share is added to CSV1 called CSV1-Share, and a share called CSV2-Share is added to CSV2.

Any number of Hyper-V hosts/clusters can be permitted to use both or either share. For simplicity, I have illustrated just Host1.

Name Resolution

Host1 wants to start up a VM called VM1. The metadata of VM1 says that it is stored on \SOFS1CSV1-Share. Host1 will do a DNS lookup for SOFS1 when it performs an initial connection. This query will return back all of the IP addresses of the nodes FS1 and FS2.

Tip: Make sure that the storage/cluster networks of the SOFS nodes are enabled for client connectivity in Failover Cluster Manager. You’ll know that this is done because the NICs’ IP addresses will be registered in DNS with additional A records for the SOFS CAP/name.

Typically in this scenario, Host1 will have been given 4-6 addresses for the SOFS role. It will perform a kind of client based round robin, randomly picking one of the IP addresses for the initial connection. If that fails, another one will be picked. This process continues until a connection is made or the process times out.

Now SMB 3.0 kicks in. The SMB client (host) and the SMB server (SOFS node) will negotiate capabilities such as SMB Multichannel and SMB Direct.

Tip: Configure SMB Multichannel Constraints to control which networks will be used for storage connectivity.

Initial Connection

There are two scenarios now. Host1 wants to use CSV1-Share so the best possible path is to connect to FS1, the owner of the CSV that the share is stored on. However, the random name resolution process could connect Host1 to FS2.

Let’s assume that Host1 connects to FS2. They negotiate SMB Direct and SMB Mulitchannel and Host1 connects to the storage of the VM and starts to work. The data flow will be as illustrated below.

Mirrored Storage Spaces offer the best performance. Parity Storage Spaces should not be used for Hyper-V. Repeat: Parity Storage Spaces SHOULD NOT BE USED for Hyper-V. However, Mirrored Storage Spaces in a cluster, such as a SOFS, are in permanent redirected IO mode.

What does this mean? Host1 has connected to SOFS1 to access CSV1-Share via FS2. CSV1-Share is on CSV1. CSV1 is owned by FS1. This means that Host1 will connect to FS2, and FS2 will redirect the IO destined to CSV1 (where the share lives) via FS1 (the owner of CSV1).

Don’t worry; this is just the initial connection to the share. This redirected IO will be dealt with in the next step. And it won’t happen again to Host1 for this share once the next step is done.

Note: if Host1 had randomly connected to FS1 then we would have direct IO and nothing more would need to be done.

You can see why the cluster networks between the SOFS nodes needs to be at least as fast as the storage networks that connect the hosts to the SOFS nodes. In reality, we’re probably using the same networks, converged to perform both roles, making the most of the investment in 10 GbE, or faster and possibly RDMA.

SMB Client Redirection

There is another WS2012 R2 feature that works along-side CSV balancing. The SMB server, running on each SOFS node, will redirect SMB client (Host1) connections to the owner of the CSV being accessed. This is only done if the SMB client has connected to a non-owner of a CSV.

After a few moments, the SMB server on FS2 will instruct Host1 that for all traffic to CSV1, Host1 should connect to FS1. Host1 seamlessly redirects and now the traffic will be direct, ending the redirected IO mode.

TIP: Have 1 CSV per node in the SOFS.

What About CSV2-Share?

What if Host1 wants to start up VM2 stored on \SOFS1CSV2-Share? This share is stored on CSV2 and that CSV is owned by Host1. Host1 will again connect to the SOFS for this share, and will be redirected to FS2 for all traffic related to that share. Now Host1 is talking to FS1 for CSV1-Share and to FS2 for CSV2-Share.

TIP: Balance the placement of VMs across your CSVs in the SOFS. VMM should be doing this for you anyway if you use it. This will roughly balance connectivity across your SOFS nodes.

And that is how SOFS, SMB 3.0, CSV balancing, and SMB redirection give you the best performance with clustered mirrored Storage Spaces.

Why do you recommend to never use parity spaces for Hyper-V? Is it due to overhead in spreading the data? We are looking to implement a SOFS/SAN with a 60 drive shelf (RAIDInc) and I am finding your blog very helpful. Thanks for writing. There is little else out there to reference other than Jose Barreto.

Parity spaces are only supported by Microsoft for archive workloads. You have VMs on parity spaces and it misbehaves, MSFT will tell you “tough titty”, but maybe with a more polite choice of words.

Question on the Cluster Network: Is it worth having a dedicated cluster (heartbeat) network (with no client communication enabled) over a 1G link? Is it even needed? We are using two 10G RDMA networks for our storage traffic, so of course those have cluster and client communication enabled. Are those two 10G networks sufficient (plus a 1G management network), or should I have that cluster ‘heartbeat’ network as well? Your recommendation that the cluster networks be as fast as the storage networks definitely makes sense after reading this post. I would hate for the redirected IO to happen over the 1G cluster network instead of the 10G RDMA storage network. Thank you!

The concept of a cluster comms network went out with tbe arrival of WS2012. Clustering can use any networks, you just need a minimum of two for best practice.

Brilliant, thank you! That definitely simplifies the cluster’s network.

Aidan, I’d like to hear your thoughts on how to scale this SMB3/SOFS solution. We run multiple hyper-v clusters (with different types of workloads per cluster), all ontop of a Hitachi VSP G1000. We’ve since moving to 2012 R2 adopted the “new Microsoft way” with SMB3 via SOFS. This move have now started to raise some questions (we’re still fairly early in the move to SMB3).

1. How many VMs can we “safely” host on a single CSV (behind the SOFS share)? – I know it will ultimately depend on the I/O, but lets say even 100 VMs/LUN wouldn’t be enough to saturate the IO capacity of a single LUN. The limit I guess would be the queue depth. We wouldnt host the technical limit on a single lun (I believe it’s around 2048 VMs/LUN that is technically possible), as that would surely yield some issues. But what would be a good number? 40? 50? 100? 200?

2. Should we run multiple CSVs behind a single SOFS share (possible?)

3. How many Hyper-V clusters should we allow on the same SOFS Cluster? 1? 2? 5? 10? 10+? – I know you’re gonna say: “it depends”. I need a rough rule of thumb 😉

4. Should we allow VMs from different Hyper-V Clusters on the same SOFS share? – If so, how many *?*

I’ve spend some time googling these questions, and it has become apparent to me that not many companies have adopted the Hyper-V/SMB3/SOFS solution yet, and thus it’s difficult finding people who’s got experience with whatever issues inevidently will arise.

Thanks for blogging about this stuff, it’s always very insightfull and helpfull 🙂

Hmmm, some of this will be opinions:

1) There is no guidance here. It does all come down to performance and risk versus greed 🙂 CSV itself does not have a limit because of how it orchestrates from the owner rather than the file system.

2) 1 share per CSV is best practice.

3) There is no guidance. The real vision is “stamps”. Each rack contains 1 SOFS cluster and 1 Hyper-V cluster, but there’s nothing to stop you going 1:N.

4) I wouldn’t do it. I don’t recommend treading on land that few others have walked … you never know when you’ll step on a landmine.

Thanks for detailed explanation of IO redirection.

Is there any we can track or we can look at “to which FileServer node Hyper-V server connected initially” and any way to get to know whether direct or redirected access happened?