Windows Server 2012 Hyper-V allows you to create guest clusters of up to 64 nodes. They need some kind of shared storage and that came in the form of:

- SMB 3.0 file shares

- iSCSI

- Fibre Channel

There are a few problems with this:

- It complicates host architecture. Take a look at my converged network/fabric designs for iSCSI. Virtual Fibre Channel is amazing but there’s plenty of work: virtual SANs, lots of WWNs to zone, and SAN vendor MPIO to install in the guest OS.

- It creates a tether between the VMs and the physical infrastructure that limits agility and flexibility

- In the cloud, it makes self-service a near (if not total) impossibility

- In a public cloud, the hoster is going to be unwilling to pierce the barrier between infrastructure and untrusted tenant

So in Windows Server 2012 R2 we get a new feature: Shared VHDX. You attach a VHDX to a SCSI controller of your VMs, edit the Advanced settings of the VHDX, and enable sharing. This creates a persistent reservation passthrough to the VHDX, and the VMs now see this VHDX as a shared SAS disk. You can now build guest clusters with this shared VHDX as the cluster storage.

There are some requirements:

- Obviously you have to be clustering the VMs to use the storage.

- The hosts must be clustered. This is to get a special filter driver (svhdxflt.sys).

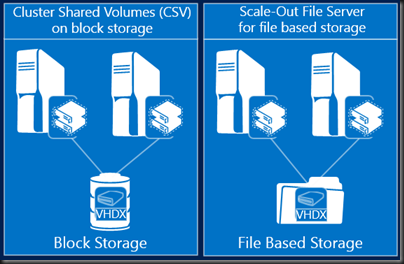

- The VHDX must be on shared storage, either CSV or SMB 3.0.

Yes, this eliminates running a guest cluster with Shared VHDX on Windows 8.1 Client Hyper-V. But you can do it on a single WS2012 R2 machine by creating a single node host cluster. Note that this is a completely unsupported scenario and should be for demo/evaluation labs only

Another couple of gotchas:

- You cannot do host-level backups of the guest cluster. This is the same as it always was. You will have to install backup agents in the guest cluster nodes and back them up as if they were physical machines.

- You cannot perform a hot-resize of the shared VHDX. But you can hot-add more shared VHDX files to the clustered VMs.

- You cannot Storage Live Migrate the shared VHDX file. You can move the other VM files and perform normal Live Migration.

Even with the gotchas, that I expect MSFT will sort out quickly, this is a superb feature. I would have loved Shared VHDX when I worked in the hosting business because it makes self-service and application HA a realistic possibility. And I will absolutely eat it up in my labs ![]()

EDIT1:

I had a question from Steve Evans (MVP, ASP.NET/IIS) about using Shared VHDX: Is Shared VHDX limited to a single cluster? On the physical storage side, direct-attached CSV is limited to a single cluster. However, SMB 3.0 file shares are on different physical infrastructure and can be permissioned for more than one host/cluster. Therefore, in theory, a guest cluster could reside on more than one host cluster, with the Shared VHDX stored on a single SMB 3.0 file share (probably SOFS with all this HA flying about). Would this be supported? I checked with Jose Barreto: it’s a valid use case and should be supported. So now you have a solution to quadruple HA your application:

- Use highly available virtual machines

- Use more than one host cluster to host those HA VMs

- Create guest clusters from the HA VMs

- Store the shared VHDX on SOFS/SMB 3.0 file shares

Hi Aidan

Shared vhdx are great stuff!

One question came to my mind – would it be possible to replicate those shared vhdx (and guest cluster virtual disks as well) with Hyper-V replica, e.g. in this way achieve DR?

Best

Goran

No.

Could you expand on no, why is not supported

Because it does not work. Simple as.

I am seeing that with a shared VHDX file other benefits of non-shared VHDX files are also not working, such as SCSI retrim. My SAN is thin provisioned, but the shared VHDX file is taking up the entire file size (23TB) even though the Guest OS file cluster has deduplication enabled and the used size should be closer to 9.5TB. Following the guidance to shrink a regular VHDX file (Optimize-volume -retrim and then reboot) doesn’t work with a shared VHDX file (even when rebooting both nodes).

Anyone else see anything similar?

Just found this blog again, and thought I’d respond to my own post. I found the issue on another blog. http://workinghardinit.wordpress.com/2014/05/19/hyper-v-unmap-does-work-with-san-snapshots-and-checkpoints-but-not-always-as-you-first-expect/

Not being able to dynamically expand a shared VHDX is not an insignificant limitation. Hot adding a drive is useful, but if you are running out of space that means spanning a volume (is that even possible, since you need to use basic disks), or trying to shuffle data around. The reason why we have failover clusters is to avoid downtime when doing maintenance.

I’m testing SVHDX in my environment and I can’t get it working. We have setup failover cluster and CSV backed by SAN over FC, I have put SVHDX on CSV and attached that disk to two VMs. Now I have inconsistency on the disk as I save few files on the VM1 they are not visible on the VM2 and CHKDSK is reporting errors. I think that I’m missing something here, did I get SVHDX point all wrong or ?

Are you using it as a clustered disk? That’s the point of shared VHDX.

We’ve built a few pre-production shared VHDX based guest clusters with large 1TB+ data sets. We’ve been testing backup / restore performance and I’m not 100% sure yet where the problem lies but we seem to be getting abysmally slow speeds when backing up the shared vhdx’s in comparision to other VMs hosted on the same infrastructure running the same build of 2012 R2, but with no shared VHDXs. Anyone seen anything similar? Performance penalty I was expecting was in the 10 to 20% region, not 60 to 70% as seems to be the case.

It is not supported to backup VMs including shared VCHDX in the set from the host where shared VHDX is used. If you’re using guest OS backup then you have to consider how the shared disk is being backed up and how data is moving between the two virtual nodes.

Yep it’s a “traditional” backup method i.e. an agent on the guest. It seems, from stuff I’ve read elsewhere, that it may be a problem with redirected i/o versus direct i/o coming into play when the guest cluster nodes are on different physical hosts, and some sort of file by file brokering is going on between the nodes, and has to cross an inter-node network connection. Still playing with it, and running file copy tests, hard to say for sure but there does appear to be a serious drop in performance using shared VHDXs. Will probably log a support case. Thanks

You’re on the right track. Can’t remember the exact details off-hand, but I think redirected IO might be in play here.

Just as an FYI – following a support case, the official line was that the feature is “fundamentally flawed and should not be used”. Hopefully 2016’s new shared disk format resolves.

Hey Aidan, many thanks for the interesting article.I just have a project were we need to decide if we realize Guest Clustering using “classical” ISCSI (like your converged fabric design) or using shared VHDXs…Network performance in the cluster networks shouldn´t be a problem but I´m struggling if Shared VHDXs are already good for using in productive environments….

Using HP StoreVirtual on the storage side, so we don´t have to think about not being able to use HV-Replica while using Shared VHDX…

What would you prefer when just building everything from scratch?

If you don’t have replication/backup concerns/limitations then shared VHDX would be best long-term. Software-defined anything is the path forward because it is more flexible. Things will improve with future releases. It also would simplify your host/VM networking – iSCSI at the VM layer just complicates things.

Hi Aidan, I have a question. Is it supported to have a Guest SQL2012 Failover Cluster inside a Win2012R2 HyperV Cluster with the shared vhds living on a CSV drive?

Thank you in advance

Yes.

Having an issue with 2012R2 Hyper-V cluster not expanding in DPM to allow for VHD backups. This is a new cluster in an untrusted domain, DPM agent installed on both hosts and attached to DPM server. Any ideas?

Sounds like a support call issue. Are backups working on the cluster?