Back in January I posted a possible design for implementing iSCSI connectivity for a host and virtual machines using converged networks.

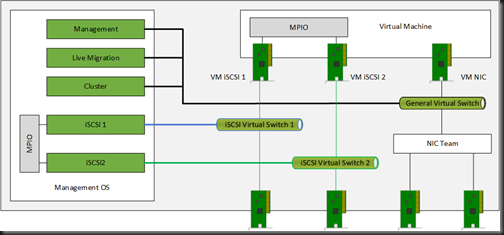

In that design (above) a pair of virtual NICs would be used for iSCSI, either in the VM or the management OS of the host. MPIO would “team” the NICs. I was talking with fellow Hyper-V MVP Hans Vredevoort (@hvredevoort) about this scenario last week but he brought up something that I should have considered.

Look at iSCSI1 and iSCSI2 in the Management OS. Both are virtual NICs, connecting to ports in the iSCSI virtual switch, just like any virtual NIC in a VM would. They pass into the virtual switch, then into the NIC team. As you should know by now, we’re going to be using a Hyper-V Port mode NIC team. That means all traffic from each virtual NIC passes in and out through a single team member (physical NIC in the team).

Here’s the problem: The allocation of virtual NIC to physical NIC for traffic flow is done by round robin. There is no way to say “Assign the virtual NIC iSCSI1 to physical NIC X”. That means that iSCSI1 and iSCSI2 could end up being on the same physical NIC in the team. That’s not a problem for network path failover, but it does not make the best use of available bandwidth.

Wouldn’t it be nice to guarantee that iSCSI NIC1 and iSCSI NIC2, both at host and VM layers, were communicating on different physical NICs? Yes it would, and here’s how I would do it:

The benefits of this design over the previous one are:

- You have total control over vNIC bindings.

- You can make much better use of available bandwidth (QoS is still used)

- You can (if required by the SAN vendor) guarantee that iSCSI1 and iSCSI2 are connecting to different physical switches

Don’t worry about the lack of a NIC team for failover of the iSCSI NICs at the physical layer. We don’t need it; we’re implementing MPIO in the guest OS of the virtual machines and in the management OS of the host.

Confused? Got questions? You can learn about all this stuff by reading the networking chapter in Windows Server 2012 Hyper-V Installation And Configuration Guide:

This is the exact design we implemented on a recent Hyper-V project. It works great and when reviewing with the storage vendor they were happy with it because we were maintaining a “fault domain” for each MPIO path.

Thanks Nick. I reckon the split domain feature for some MPIO implementations is the strength here. It’s completely controlled from SAN switch/controller, through to the host and VMs.

Aidan, so this is a supported/recommended scenario for Host+Guest iSCSI? I recall from the past (even your posts and some early WS2012 H-V designs) that sharing the same NICs for host and guest iSCSI is not recommended.

What about the performance-penalty/CPU processing overhead of the vSwitch for host iSCSI (10GbE), is that negligible?

It should be. It’s a lot simpler than the other one, which was supported. This newer alternative doesn’t contravene anything either.

When we implemented this design we did a whole boatload of throughput and redundancy tests. We tested network throughput from vNIC to vNIC on the iSCSI NICs using iPerf ( which will do a memory to memory transfer). Our throughput tests moved at 9.9 Gbps which is the exact speed we had on physical NICs. Now these particular servers were the latest greatest blades from Dell but it was extremely impressive to get 9.9 Gbps.

Running like this from day one. Expect performance to be around 70% of physical…

This also has the advanteage over 1st design if you’re using multiple SANs from different vendors with different requirements while maintaining max. control

Interesting. I’d love to have the h/w to test, but I suspect SMB 3.0 might easily beat iSCSI in this type of scenario.

It does, because of multichannel (let alone using RDMA which I haven’t tested yet)

Hi Aidan, I just recently deployed a new cluster using a converged network, the commands were as follows:

New-VMSwitch “ConvergedNetSwitch” –NetAdapterName “ConvergedNetTeam” –AllowManagementOS 0 –MinimumBandwidthMode Weight

Set-VMSwitch “ConvergedNetSwitch” –DefaultFlowMinimumBandwidthWeight 50

Add-VMNetworkAdapter -ManagementOS -Name “Management” -SwitchName “ConvergedNetSwitch”

Set-VMNetworkAdapter -ManagementOS -Name “Management” -MinimumBandwidthWeight 10

Add-VMNetworkAdapter -ManagementOS -Name “LAN” -SwitchName “ConvergedNetSwitch”

Set-VMNetworkAdapter -ManagementOS -Name “LAN” -MinimumBandwidthWeight 10

Add-VMNetworkAdapter -ManagementOS -Name “Cluster” -SwitchName “ConvergedNetSwitch”

Set-VMNetworkAdapter -ManagementOS -Name “Cluster” -MinimumBandwidthWeight 10

Add-VMNetworkAdapter -ManagementOS -Name “Live Migration” -SwitchName “ConvergedNetSwitch”

Set-VMNetworkAdapter -ManagementOS -Name “Live Migration” -MinimumBandwidthWeight 40

Start-Sleep -s 15

New-NetIPAddress -InterfaceAlias “vEthernet (Management)” -IPAddress 10.10.0.66 -PrefixLength 24 -DefaultGateway 10.10.0.1

Set-DnsClientServerAddress -InterfaceAlias “vEthernet (Management)” -ServerAddresses “10.10.0.47,10.10.0.53,192.168.2.51”

New-NetIPAddress -InterfaceAlias “vEthernet (LAN)” -IPAddress 10.10.1.66 -PrefixLength 24

New-NetIPAddress -InterfaceAlias “vEthernet (Cluster)” -IPAddress 192.168.4.66 -PrefixLength 24

New-NetIPAddress -InterfaceAlias “vEthernet (Live Migration)” -IPAddress 192.168.5.66 -PrefixLength 24

Set-VMNetworkAdapterVlan -ManagementOS -VMNetworkAdapterName “Management” -Access -VlanId 150

Set-VMNetworkAdapterVlan -ManagementOS -VMNetworkAdapterName “LAN” -Access -VlanId 100

Set-VMNetworkAdapterVlan -ManagementOS -VMNetworkAdapterName “Cluster” -Access -VlanId 400

Set-VMNetworkAdapterVlan -ManagementOS -VMNetworkAdapterName “Live Migration” -Access -VlanId 500

I haven’t created a special network for VMs. What is your suggestion regarding this. Is it required, how come you don’t have it in most of your recent designs?

http://www.petri.co.il/hyper-v-host-networking-requirements.htm

http://www.petri.co.il/converged-networks-overview.htm

http://www.petri.co.il/converged-network-designs-hyper-v-hosts-example.htm

An additional question, do you recommend an additional virtual network for Hyper-V Replica or should QoS (for the destination port, subnet) be enough?

You have a virtual switch, therefore you have a network for VMs. It’s up to you how you VLAN tag (assuming you use VLANs) the virtual NICs of those VMs.

Thank you Aidan. I’m just curious why you don’t have a separate virtual NIC for VM traffic in your designs, is it not a recommended deployment?

See converged networks/fabrics.