Ten days ago I highlighted a blog post by Microsoft’s Jose Baretto that SMB Multichannel across multiple NICs in a clustered node required that both NICs be in different subnets. That means:

- You have 2 NICs in each node in the Scale-Out File Server cluster

- Both NICs must be in different subnets

- You must enable both NICs for client access

- There will be 2 NICs in each of the hosts that are also on these subnets, probably dedicated to SMB 3.0 comms, depending on if/how you do converged fabrics

You can figure out cabling and IP addressing for yourself – if not, you need to not be doing this work!

The question is, what else must you do? Well, SMB Multichannel doesn’t need any configuration to work. Pop the NICs into the Hyper-V hosts and away you go. On the SOFS cluster, there’s a little bit more work.

After you create the SOFS cluster, you need to make sure that client communications is enabled on both of the NICs on subnet 1 and subnet 2 (as above). This is to allow the Hyper-V hosts to talk to the SOFS across both NICs (the green NICs in the diagram) in the SOFS cluster nodes. You can see this setting below. In my demo lab, my second subnet is not routed and it wasn’t available to configure when I created the SOFS cluster.

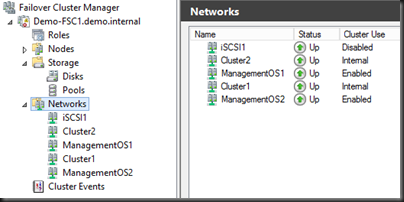

You’ll get a warning that you need to enable a Client Access Point (with an IP address) for the cluster to accept communications on this network. Damned if I’ve found a way to do that. I don’t think it’s necessary to do that additional step in the case of an SOFS, as you’ll see in a moment. I’ll try to confirm that with MSFT. Ignore the warning and continue. My cluster (uses iSCSI because I don’t have a JBOD) looks like:

You can see ManagementOS1 and ManagementOS2 (on different subnets) are Enabled, meaning that I’ve allowed clients to connect through both networks. ManagementOS1 has the default CAP (configured when the cluster was created).

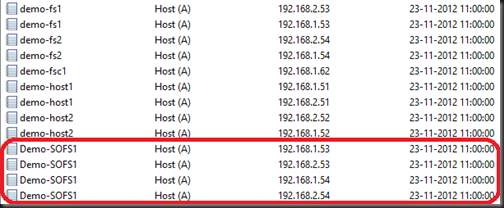

Next I created the file server for application data role (aka the SOFS). Over in AD we find a computer object for the SOFS and we should see that 4 IP addresses have been registered in DNS. Note how the SOFS role uses the IP addresses of the SOFS cluster nodes (demo-fs1 and demo-fs2). You can also need the DNS records for my 2 hosts (on 2 subnets) here.

If you don’t see 2 IP address for each SOFS node registered with the SOFS name (as above – 2 addresses * 2 nodes = 4) then double check that you have enabled client communications across both cluster networks for the NICs on the SOFS cluster nodes (as previous).

Now we should be all ready to rock and role.

In my newly modified demo lab, I run this with the hosts clustered (to show new cluster Live Migration features) and not clustered (to show Live Migration with SMB storage). The eagle-eyed will notice that my demo Hyper-V hosts don’t have dedicated NICs for SMB comms. In the real world, I’d probably have dedicated NICs for SMB 3.0 comms on the Hyper-V hosts. They’d be on the 2 subnets that have been referred to in this post.

I tried this setup with Infinband for RDMA and this setup does not work. Does the RDMA network need to be routable to my domain?

Have you configured DNS settings for the storage NICs?

Yes, I have but MS tells me that my RDMA Infiniband interfaces do not have to be routable to my domain.

And they would be right. Have a search for the step-by-step instructions for Infiniband SOFS by Jose Barreto. Mellanox didn’t see fit to give me gear for my lab so I cannot advise on Infiniband.

However, for clustered SOFS the RDMA adapters need to be on separate IP segments, each RDMA adapter needs to be able to route to the other & registered in DNS.

No routing required AFAIK. And you only need to put in the DNS config on the storage NICs to force a registration (which goes via the management NICs).

BTW, Jose Barreto’s blog only shows a single node SOFS, not a cluster. I used this as a reference for my design.

I am having a similar issue.

Each of my SOFS node have 1 Gb mgmt nic – default CAP and main link to AD/DNS.

Each of my SOFS nodes and Hyper-V host also have dual port Mellenox RDMA nics for SMB comm. I setup separate subnet on each of these RDMA nics for SMB multichannel. These subnets are non-routable to the rest of the network AD or DNS, as I only want my Hyper-V hosts to talk to this separate storage network. I setup CAP on the RDMA nics. I assumed the servers would just work with SMB multichannel.My performance is terrible.

Do all CAPs /RDMA nics need to register in DNS to function properly?

Yes. They don’t need networking to it; the servers will register the rNICs via the routed NICs.

I have the same problem, and same setup.

Exactly how will they register the rNICs in the DNS? How do you configure the Routing?

I get great performance if I do \\fileserver\share, since the host will communnicate over rNICs, but if I try \\FileClusterName\Share it will ask the DNS and use the mgmt NIC.

Simply configure DNS on the rNICs. The registration goes via the management NIC.

Thanks! I could have sworn somewhere I read not to enable DNS registration on those rNICs. It works perfect now. Do you recommend using the ExcludeNetwork to remove the MGMT NICs from DNS? If not, what happens when a host hits the MGMT NIC during round robin?

No prob! SMB Multichannel will select the rNICs because RDMA trumps non-RDMA NICs 🙂 Do enable SMB Multichannel Constraints on all nodes (SOFS and hosts) to restrict it to the rNICs to enforce this.

Okay, I just added the DNS server on the rNICs and now they’re registered in DNS.

However the problem persists:

\\FileClusterName\Share still use mgmt nic.

Have you tested when SMB mutlichannel has kicked in? And then read this: https://www.petri.com/controlling-smb-multichannel

On both Chelsio rNICs I have configured an IP address, Subnet and DNS. I have disabled “register in DNS” and NetBIOS over TCP/IP on the rNICs but still they do not register in DNS. The rNICs have the same DNS settings as the MGMT teamed NIC. I also have the rNICs set for client communications. I have the MGMT teamed NIC at the top of the binding order followed by the two rNICs. I bounced the SOFS role more times than I can count. Any thoughts?

You “disabled register in DNS” … that’s your issue.

You mentioned for real world use you would rather have dedicated SMB 3 NICs on your Hyper-V cluster nodes. With redundant switches and converged networking, what value is added if bandwidth wasn’t an issue?

Is it more of a safety net should the vSwitch have an issue?

Two reasons: RSS and RDMA cannot be used in virtual NICs in the management OS.

how about using good old “hosts” file on the HV hoster so they access the SMB not-routable VLAN while the DNS keps pointing – as the set up dictated to the routable mang vlan of the servers?

this way when you try to remote to sofs1 name from mng network u get the active sofs cluster member yet the HV hosters will resolve to the SMB network.

but maybe the round robin will be broken this way?

so perhaps the oposit and use hosts on my mng station so i access the correct role holder?

Use DNS as prescribed. Those of you who venture out into unknown country end up being eaten by wolves.

Hi Finn, hi have a 2 node sofs cluster with a teamed nic (without rdma). Do i have to bind 3 networks on this nic ?

-smb1 ( smb1, live mig.)

-smb2 (smb2, CSV)

-mgmt (management)

regards

Mario

What speed and how many NICs do you have on the NIC team? How many NICs do the SOFS nodes have? The design I recommend is here: https://aidanfinn2.wpcomstaging.com/?p=14879

HI Aidan,

This article worked a treat for me. I disabled my out of band 1GB NIC from having cluster comms. THat left me with my Fabric Mgmt NIC team and my two Storage NICS.

I am using 10GB Mellanox Connect-x3 for my management team and 40GB Mellanox Connect-x3 Pro for the two storage NICS. THese however are ethernet based, no Infiniband so i believe i will be using RoCE. So that will require (from what i understand) some level of configuration rather than plug and play at a both switch & 2012R2 level.

Any advice, articles or blogs from people configuring RoCE with MLX Nics for SMB3.0 networking?

Regards

Buddy.

Learn about DCB – data center bridging. It’s strongly recommended for iWARP, but essential for ROCE and Infiniband. DCB relies on some PoSH configuration in hosts & SMB 3.0 servers, and on the switch fabric. Didier Van Hoye has done the most research & writing on this topic (https://blog.workinghardinit.work/wp-content/uploads/2015/06/SMBDirectSecretDecoderRingE2EVC2015.pdf) and is seen presenting around Europe with his “secret decoder ring” session (recordings can be found online).

That is real helpful Aidan, i have been read up on some of his articles. Useful. File Server Witness share, is it required for SOFS to be active-active? I followed a YT video of yours for the deployment. So far, so good. I think!

As far as benchmarking goes, do you have or know of a blog which has recorded their networking & Disk performance. I can then compare and share back! I am deploying 3 node HV cluster, 3 SOFS nodes, 3 SuperMicro JBODS, HGST SSD & 7200 Enterprise SAS all hooked up on Mellanox 10-40GB switching. It has been a steep learning curve for sure but am fully embracing this solution!

As always, thanks Aidan.

Buddy.

Hi Buddy,

Performance varies so much depending on a person’s choice of devices, right down to disk firmware, so you cannot associate one with another. What I will tell you is that SuperMicro JBODs come up again and again in bad conversations about Storage Spaces. Make sure all firmware (disks up) are updated. Make sure you set up MPIO in LB mode.

Your SOFS cluster will require a witness – use a “1 GB” virtual disk from the storage pool as a disk witness. Do not use a FSW for the SOFS. You can use a file share witness for the Hyper-V cluster – use a non-continuously available share on the SOFS.

Aidan.

Hi Aidan,

For some reason i knew that the SM comment would come up! I pushed for DataOn! Maybe my version 1.0 90bay SM JBODS they made for us will be an improvement?!! I should put a wager on it in the office!! Thanks for the witness verification, thats how its deployed so makes me happy.

I get what you mean around benchmarking, I just really dont know what to expect with read/write speeds and IOPs in general. With the MPIO, i havent user it before now and have just enabled the role and allowed SAS discovery . Do you have an article you can point me to for further configuration?

Aidan, thank you for your time with replying.

Buddy.

There’s nothing more you need to do with MPIO; just add SAS (in the MPIO console), reboot, and set the policy to LB.

What to expect on performance is very complicated. It’s a tradeoff – SAN is predictable because the manufacturer builds it end to end. You are building the SOFS in most cases. Why I like DataON – they recommend disks and can supply them, so their performance is predictable. I heard from a follower on Twitter of a SuperMicro customer with Toshiba SSDs – the SSDs limit write performance so write benchmarking was turning up less than expected results. And in my experience (and that of many others), SanDISK is a complete nightmare in Storage Spaces (performance, maintenance, life, etc).

Hi Aidan,

Never mind, i found the set-MSDSM powershell to set the LB policy! I think thats all that is needed. Cheers. I have been working with Mellanox to ensure my networking is up to par at a line level and from a PFC PoV with tagging SMB traffic. That seems ok, i get great throughput results @ RDMA. Less at TCP obviously.

As far as testing the performance of the deployment what tools would be recommended and where to be run from. Something like SQLIO run from my HV cluster? I have determined the only way i am going to see what this thing can do is to actually just run some tests or workloads and see!

I done an IOMeter comparison from within some VM’s on my cluster but i dont think that gives me real results just because of the HyperVisor layers it would be going through. I’m close, i can feel it.

Then i will stop bothering you as well!! Thanks again Aidan,

Buddy.

Have a search for Jose Barreto’s posts on DiskDpd. That’s the tool MS uses and released. It’s designed for what you want to do. In my tests (before DiskSpd) I actually got best results from within the VM.

Hi AFinn.

Thank you for your support and your knowledge. Your advice helps solve issues with setting Mellanox equipment.